We put excellence, value and quality above all - and it shows

A Technology Partnership That Goes Beyond Code

“Arbisoft has been my most trusted technology partner for now over 15 years. Arbisoft has very unique methods of recruiting and training, and the results demonstrate that. They have great teams, great positive attitudes and great communication.”

US Buyer’s Guide: How to Choose a Custom Software Development Partner (2026)

Selecting a custom software development partner is not a procurement formality. It is a delivery and risk decision that can affect uptime, security posture, timelines, and total cost long after launch.

If you are a US-based product or engineering leader carrying accountability for outcomes, this guide gives you a repeatable selection process, what to evaluate, and how to verify reality before you sign.

For the broader journey and supporting resources, start with the custom software vendor selection hub.

Why this decision matters in 2026

In 2026, a weak partner choice can show up as more than missed milestones. It can mean rework from low engineering quality, operational drag from constant steering, and compliance remediation because security and data handling expectations were never made explicit. It can also create a governance gap where everyone is working hard, but no one can explain progress, risk, or trade-offs in a way that stands up to executive scrutiny.

Modern third-party risk programs also raise the bar. If a partner touches production systems or sensitive data, buyers are increasingly expected to run a structured selection process and retain evidence of due diligence.

Action cue: Treat partner selection as a delivery risk decision, and plan to document your rationale like you would for any critical third party.

What “custom software development partner” means

A custom software development partner designs, builds, and often maintains software tailored to your business. In practice, that can include discovery and design, architecture, implementation, testing, DevOps engineering, and post-launch support. Unlike off-the-shelf software vendors, they are not selling you a product license. Unlike pure staff augmentation, they are typically accountable for outcomes.

It also helps to be explicit about boundaries. Most partners will not assume responsibility for your broader enterprise architecture, business change management, or go-to-market planning unless you contract for it. Likewise, services such as 24 by 7 operations, penetration testing, or formal risk assessments may be separate line items or handled by specialized firms. Even with a strong partner, accountability stays shared: you remain responsible for defining requirements and validating that delivered controls meet your obligations.

Practical way to frame this internally: it is a managed third-party engagement with defined delivery responsibilities, evidence expectations, and governance routines.

Action cue: Before you evaluate anyone, write down what you expect the partner to own across the lifecycle, and what stays on your side.

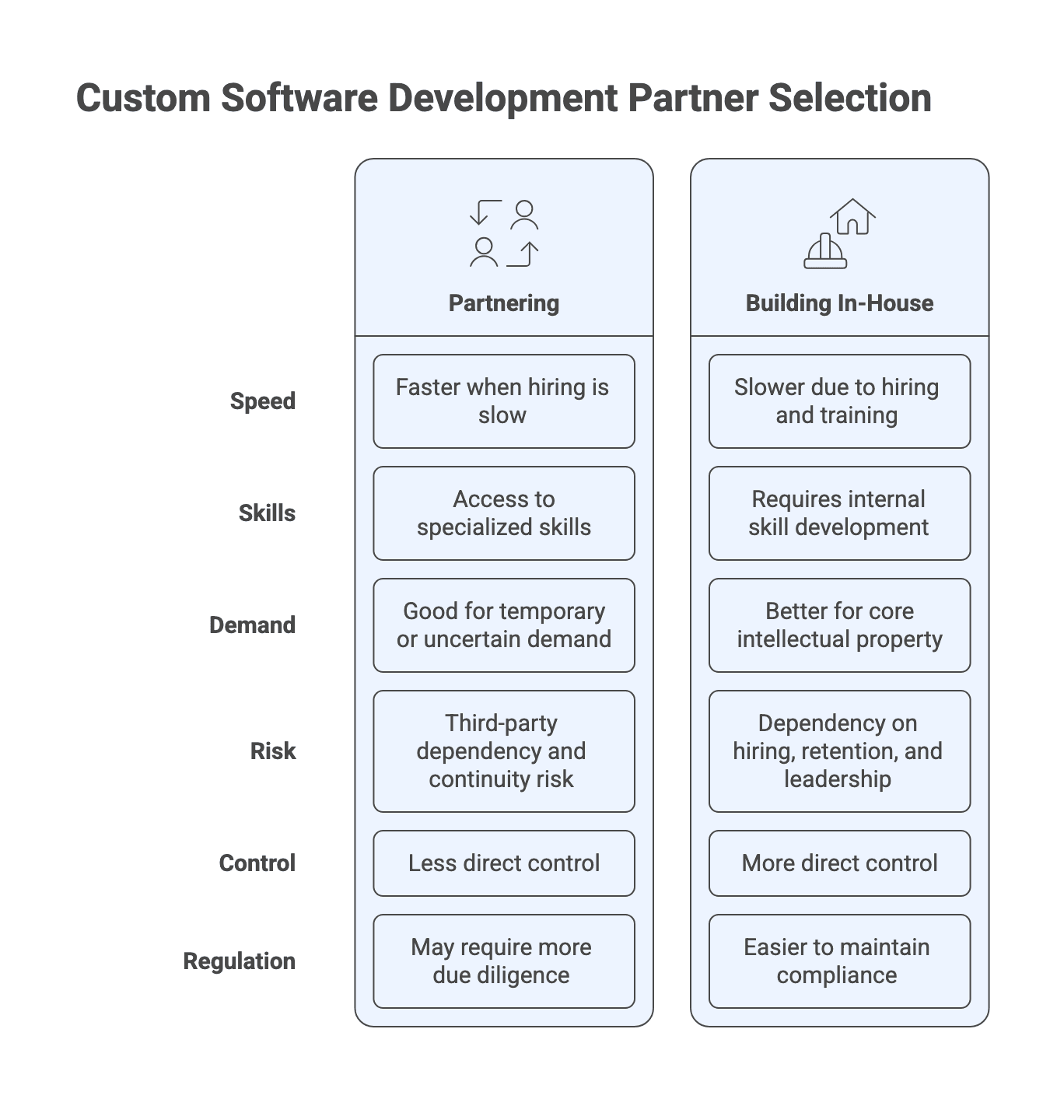

When partnering beats building in-house (and when it doesn’t)

Partnering is often the right call when speed matters and hiring will not keep up, when you need specialized skills you do not have, or when demand is temporary or uncertain. For US buyers, global delivery can also widen the talent pool and improve coverage, especially if you design time-zone overlap and collaboration expectations upfront.

Building in-house tends to win when the software is core intellectual property, strategic differentiation, or deeply embedded domain knowledge. It can also be preferable in highly regulated contexts where direct control, staffing continuity, and operational accountability are easier to maintain with an internal team.

Partnering introduces third-party dependency and continuity risk. In-house reduces vendor lock-in but increases dependency on hiring, retention, and internal leadership capacity. The point is to choose where you want to carry it, then design governance and contracts accordingly, instead of eliminating risk.

Action cue: Classify your initiative by strategic criticality and scope certainty, then choose a partner-heavy, hybrid, or in-house approach that matches that profile.

Step-by-step selection process

This workflow is designed to be defensible. Each step produces concrete outputs you can use to compare options and reduce surprises.

Action cue: Run this as a gated process with explicit outputs, rather than an open-ended set of vendor conversations.

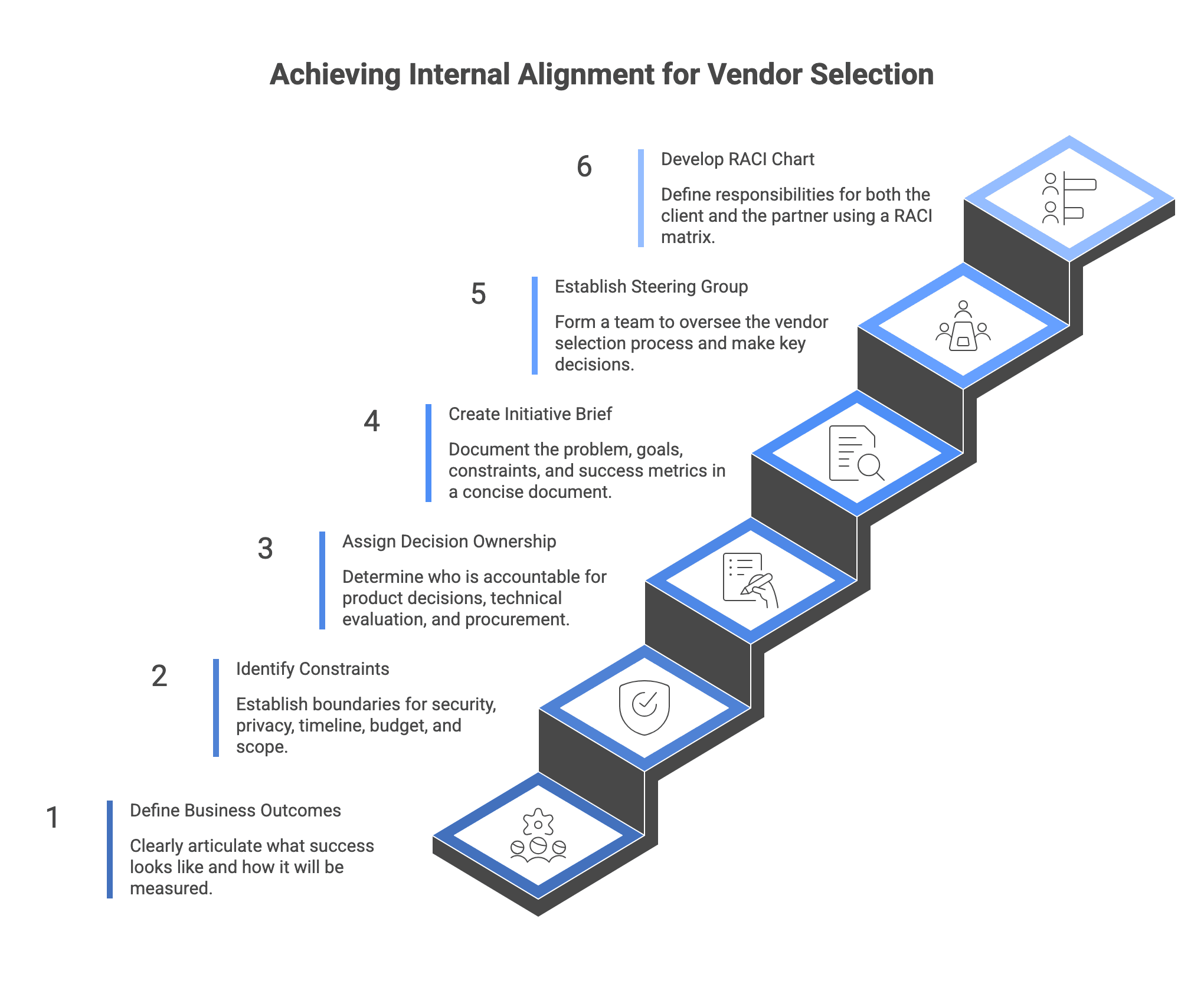

Step 1: Align internally on outcomes, constraints, and decision owners

Most vendor selection friction starts inside your company. When goals are vague or ownership is unclear, vendors receive conflicting signals, proposals become incomparable, and decisions stall.

Start with internal alignment on:

- Business outcomes and success metrics: what success means and how it will be measured.

- Constraints: security and privacy expectations, timeline drivers, budget envelope, and scope boundaries.

- Decision ownership: who is accountable for product decisions, technical evaluation, security and legal sign-off, and procurement mechanics.

Expected outcomes:

- A 1 to 2 page initiative brief (problem, goals, constraints, success metrics).

- A named decision owner and a small steering group.

- A RACI-style view of responsibilities across client and partner.

Action cue: Do not contact vendors until the brief and owners are agreed and signaled consistently.

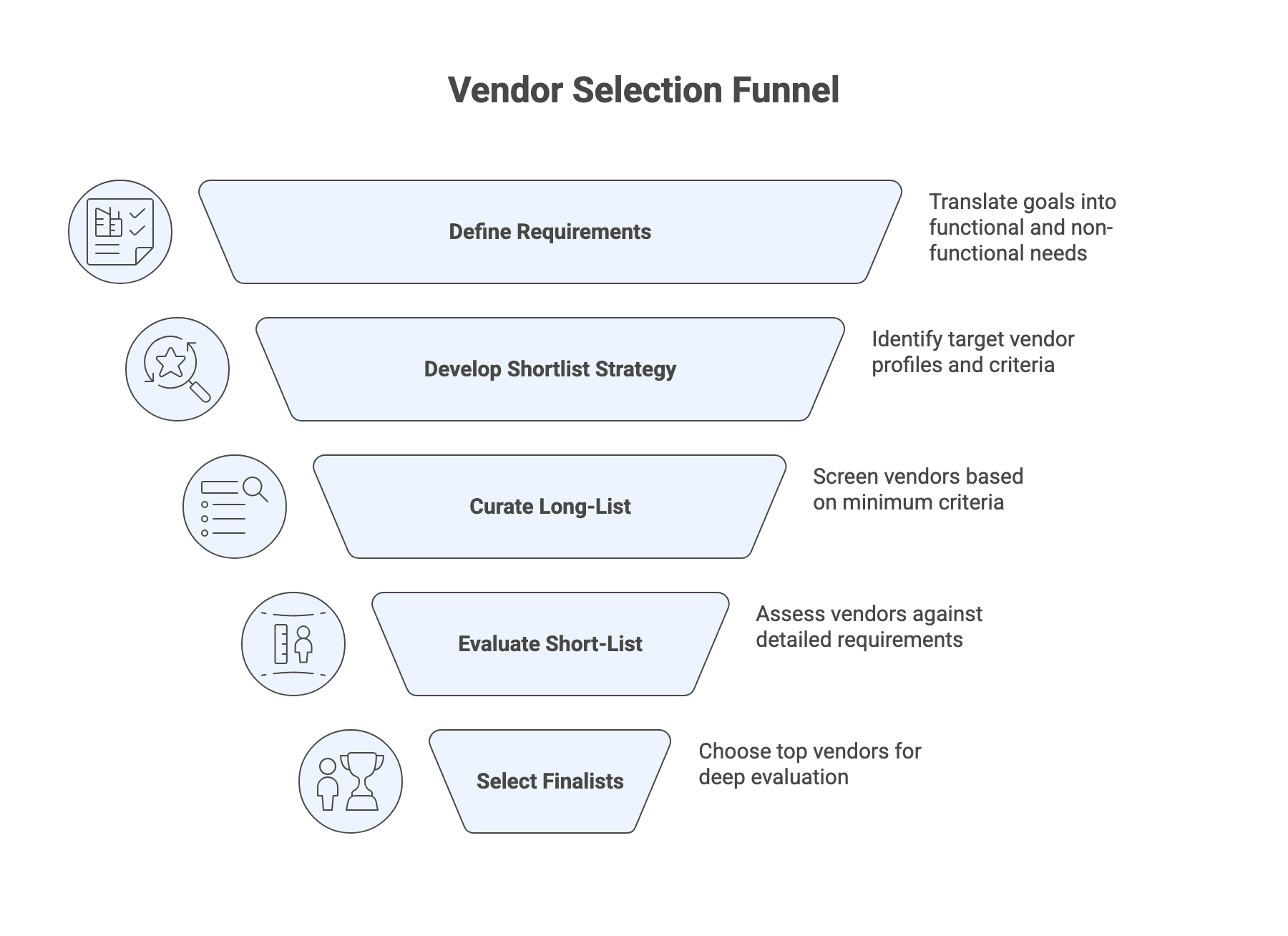

Step 2: Translate needs into requirements and a shortlist strategy

Convert goals into requirements that vendors can respond to concretely. If you are evaluating providers from our list of the top custom software development companies in 2026 or building your own shortlist, clarity at this stage determines how comparable proposals will be.

- Functional requirements: what the system must do, including key user flows and integrations.

- Non-functional requirements: performance, reliability, security, scalability, usability, support, and operational expectations.

In 2026, non-functional requirements often drive hidden cost. If you do not define expectations for authentication, encryption, logging, monitoring, and data handling early, you are likely to pay for retrofits later.

Shortlist strategy should define:

- Target vendor profiles (tech focus, domain experience, geography, team scale).

- Minimum criteria (stack alignment, similar project size, proof of delivery maturity).

- A manageable funnel: long-list to short-list to finalists.

Expected outcomes:

- Requirements list labeled must-have, should-have, nice-to-have.

- Screening checklist for long-list curation.

- A list of finalists you can evaluate deeply without burning cycles.

Action cue: Prioritize clarity and comparability over completeness, and label requirements to avoid treating every item as critical.

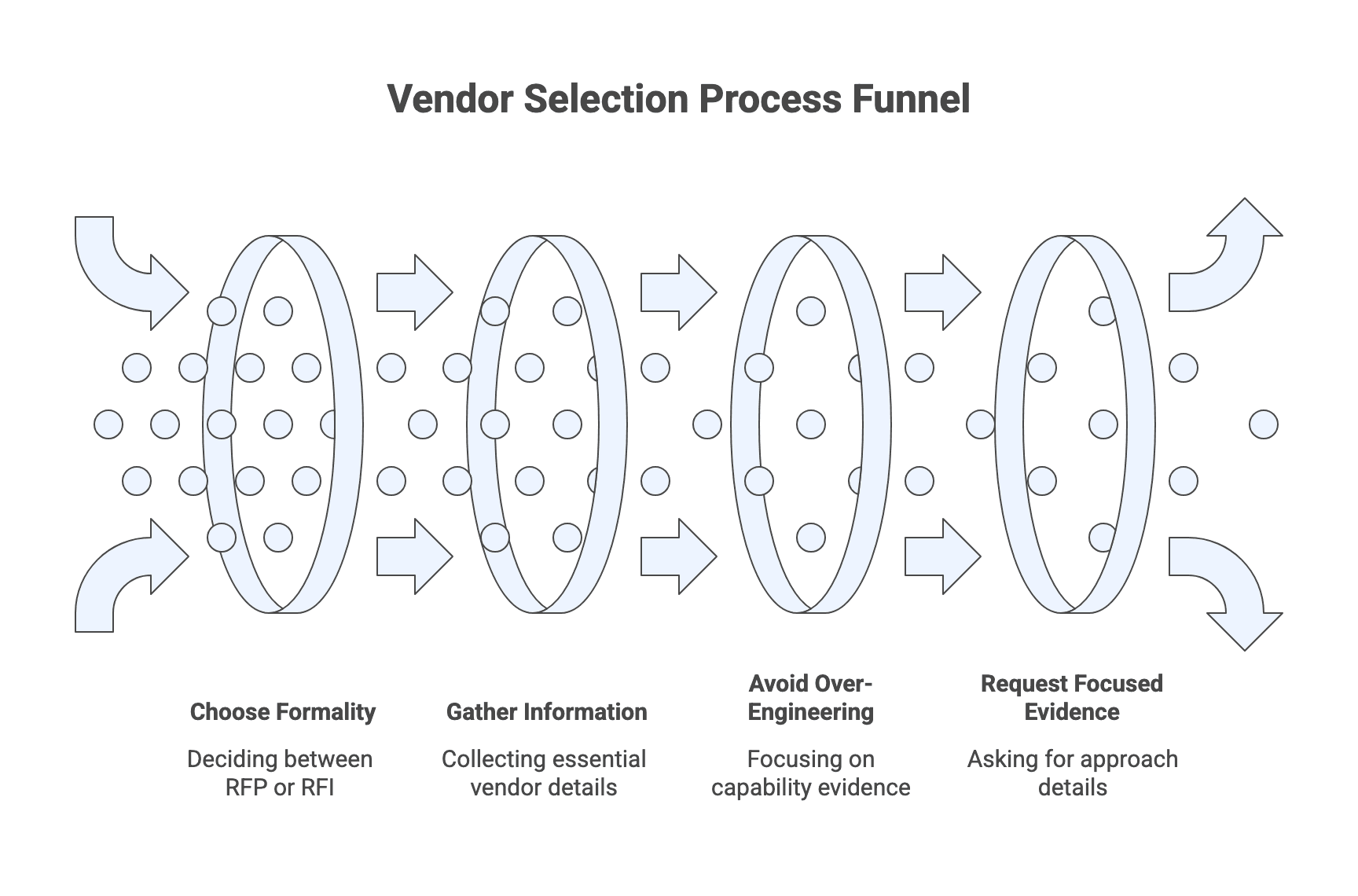

Step 3: Run an RFP or structured discovery without over-engineering it

Choose formality based on value and risk. Large, regulated, or high-stakes initiatives often warrant a formal RFP with structured scoring. Smaller or exploratory work can be better served by a lighter request for information plus discovery workshops.

Common elements that consistently improve proposal quality:

- Clear overview and problem statement.

- Scope boundaries and what is out of scope.

- Requirements, including non-functional and security constraints.

- Timeline expectations and decision dates.

- Vendor response requirements, including requested artifacts.

Avoid over-engineering. Asking for extensive speculative design or free proofs of concept can deter strong vendors and produce low-signal material. A better pattern is to ask for focused evidence of approach and then reserve deep solution design for a later, potentially paid discovery phase.

If you need a structured starting point, use the custom software development RFP template.

Action cue: Ask for the smallest set of information that reveals capability, and keep deep design work for the shortlist stage.

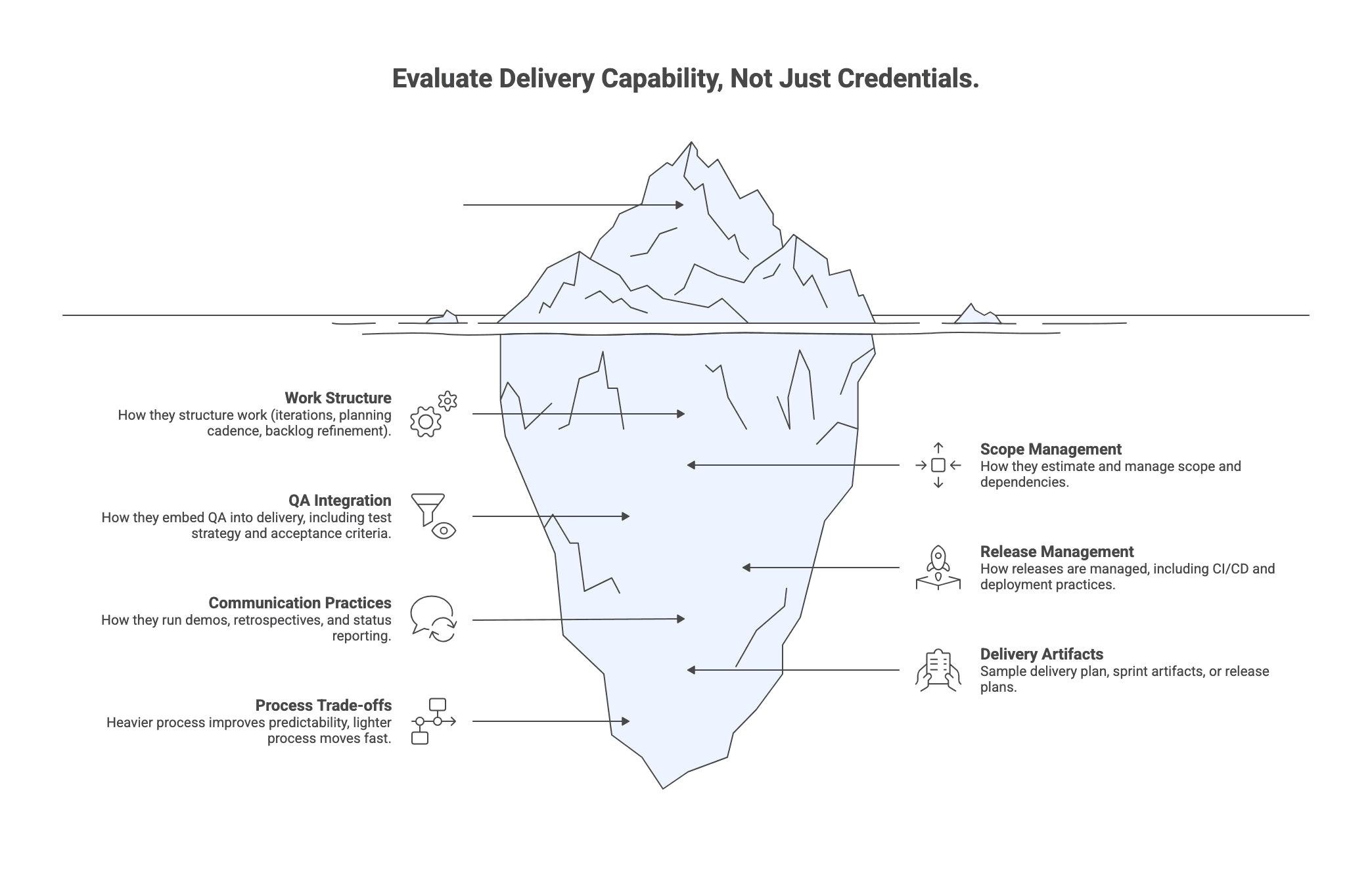

Step 4: Evaluate delivery capability instead of just credentials

Logos and marketing claims do not ship software. Delivery capability shows up in how a partner plans, executes, tests, and communicates.

What to probe:

- How they structure work (iterations, planning cadence, backlog refinement).

- How they estimate and manage scope and dependencies.

- How they embed QA into delivery, including test strategy and acceptance criteria.

- How releases are managed, including CI/CD and deployment practices.

- How they run demos, retrospectives, and status reporting.

Artifacts that often separate mature delivery from sales narratives:

- Sample delivery plan, sprint artifacts, or release plans.

- QA and testing strategy.

- Status report templates and risk registers (RAID logs).

- A walkthrough of a recent engagement including setbacks and course corrections.

Trade-offs to recognize:

- Heavier process can improve predictability and auditability, but adds overhead.

- Lighter process can move fast in early discovery, but requires stronger client engagement and clearer decision-making.

Action cue: Make vendors show how they deliver instead of what they have done.

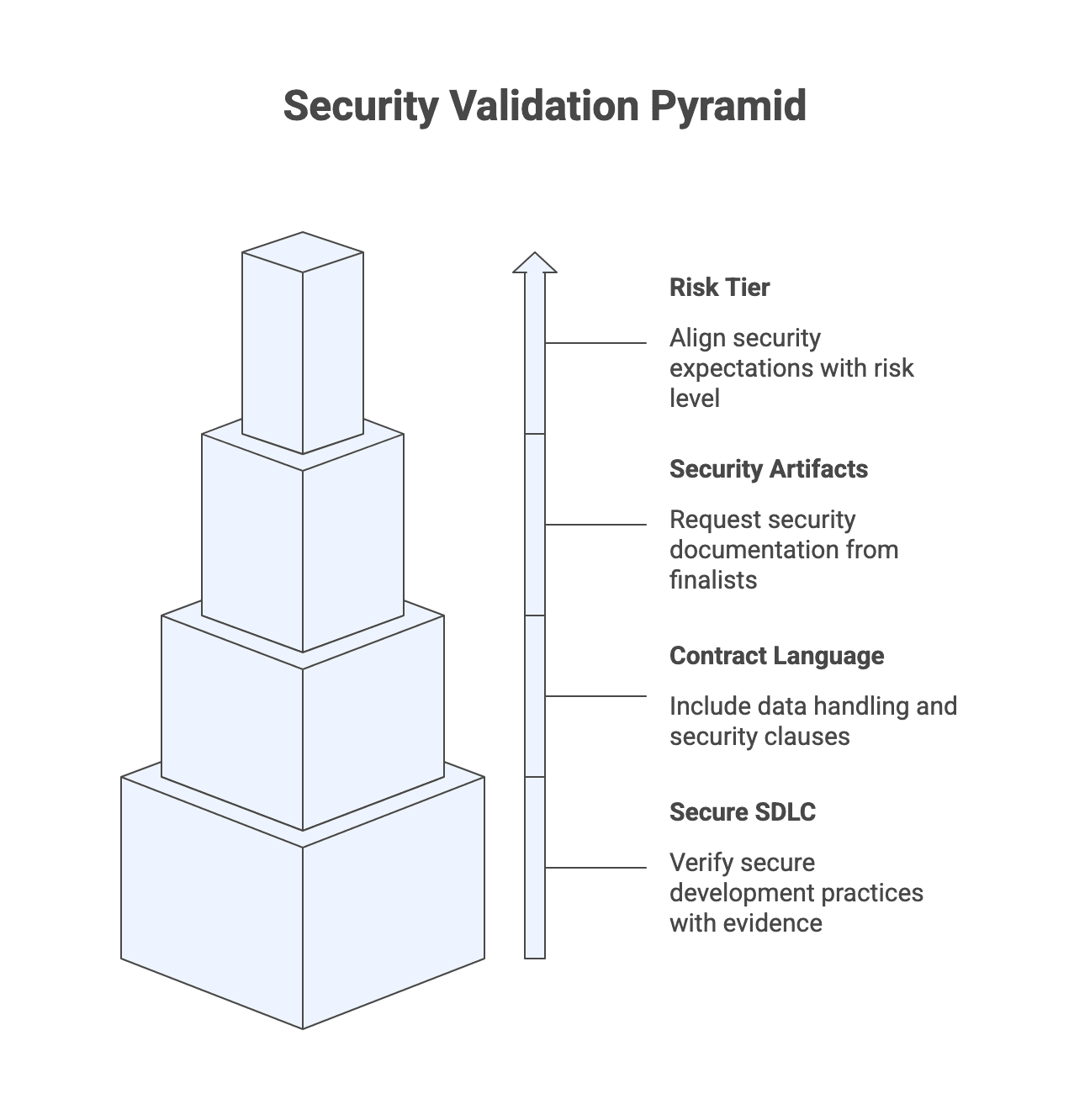

Step 5: Validate security, privacy, and compliance fit (right-sized to your risk)

Security should be evaluated as an operational practice. Mature partners can explain how secure development works throughout the lifecycle, including:

- Threat modeling and secure design thinking.

- Secure coding standards and code review practices.

- Dependency management and software supply chain controls.

- CI/CD hardening and secrets management.

- Vulnerability management and incident readiness.

Due diligence depth should match risk tier. If a partner will handle sensitive data or critical processes, buyers commonly request security questionnaires, audit reports or attestations where available, penetration test summaries, and incident response and business continuity basics. Certifications can be useful signals, but they do not guarantee that your system will be secure. You still need project-specific security requirements and validation.

Expected outcomes:

- Risk tier and security expectations aligned with your internal program.

- Required security artifacts list for finalists.

- Contract language requirements for data handling, security testing responsibilities, and incident processes.

Action cue: Tier the diligence effort by criticality and data sensitivity, then verify secure SDLC practices with evidence.

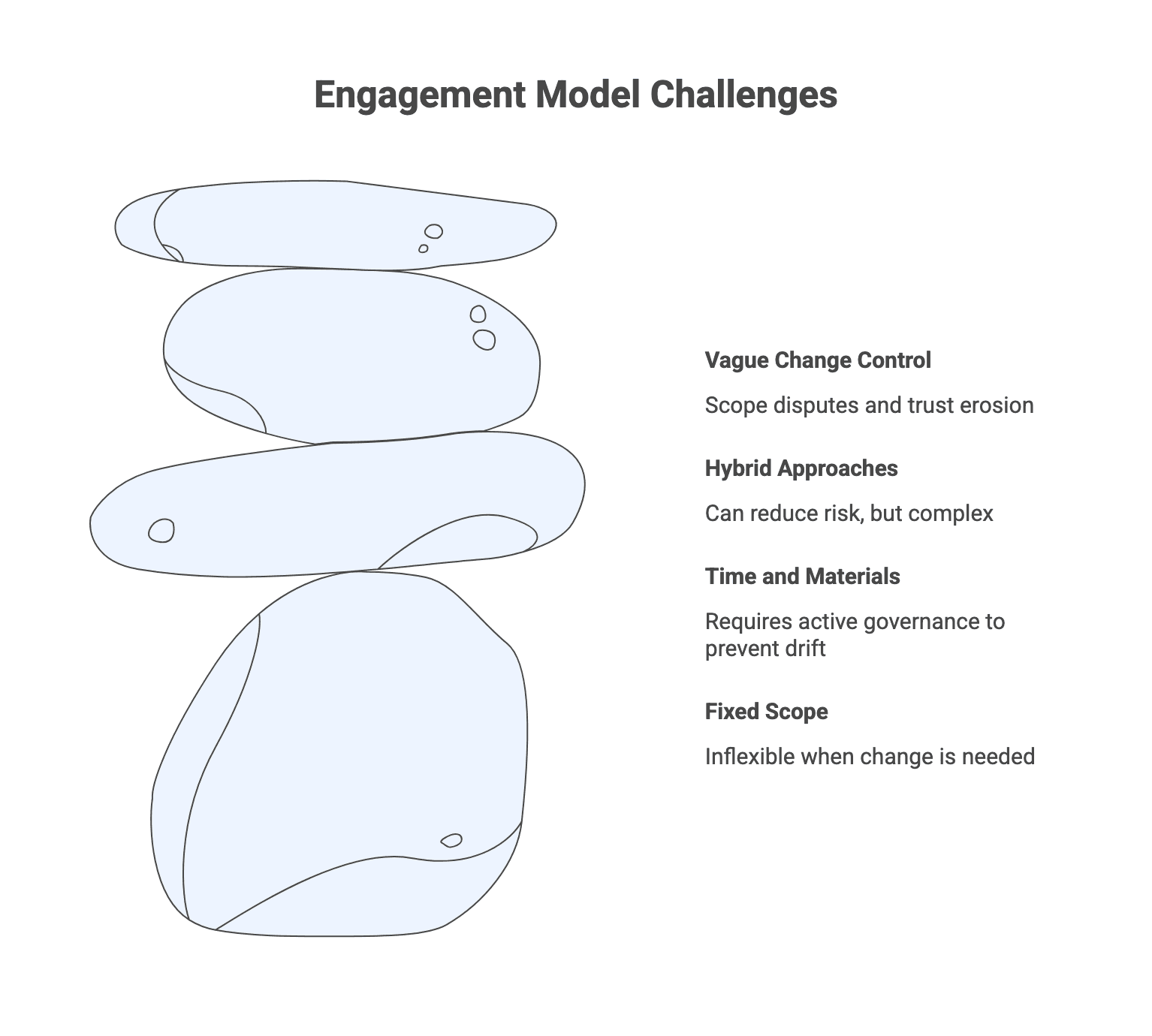

Step 6: Choose an engagement model and commercial structure that matches uncertainty

The engagement model is where many partnerships either stay healthy or break down.

High-level guidance:

- Fixed scope / fixed price works best when requirements are stable and deliverables can be defined precisely. It offers budget predictability, but can be inflexible when change is needed.

- Time and materials supports evolving scope and iterative prioritization, but requires active governance to prevent budget drift.

- Hybrid approaches can reduce risk, for example a fixed-scope discovery phase followed by iterative build, or time and materials with caps or milestone structures.

Regardless of model, change control must be explicit: how changes are proposed, estimated, approved, and tracked. If change control is vague, scope disputes and trust erosion are likely.

For deeper trade-offs and when to use which approach, see: custom software development engagement models guide.

Action cue: Pick the model that matches how uncertain your scope really is, and define change control before kickoff.

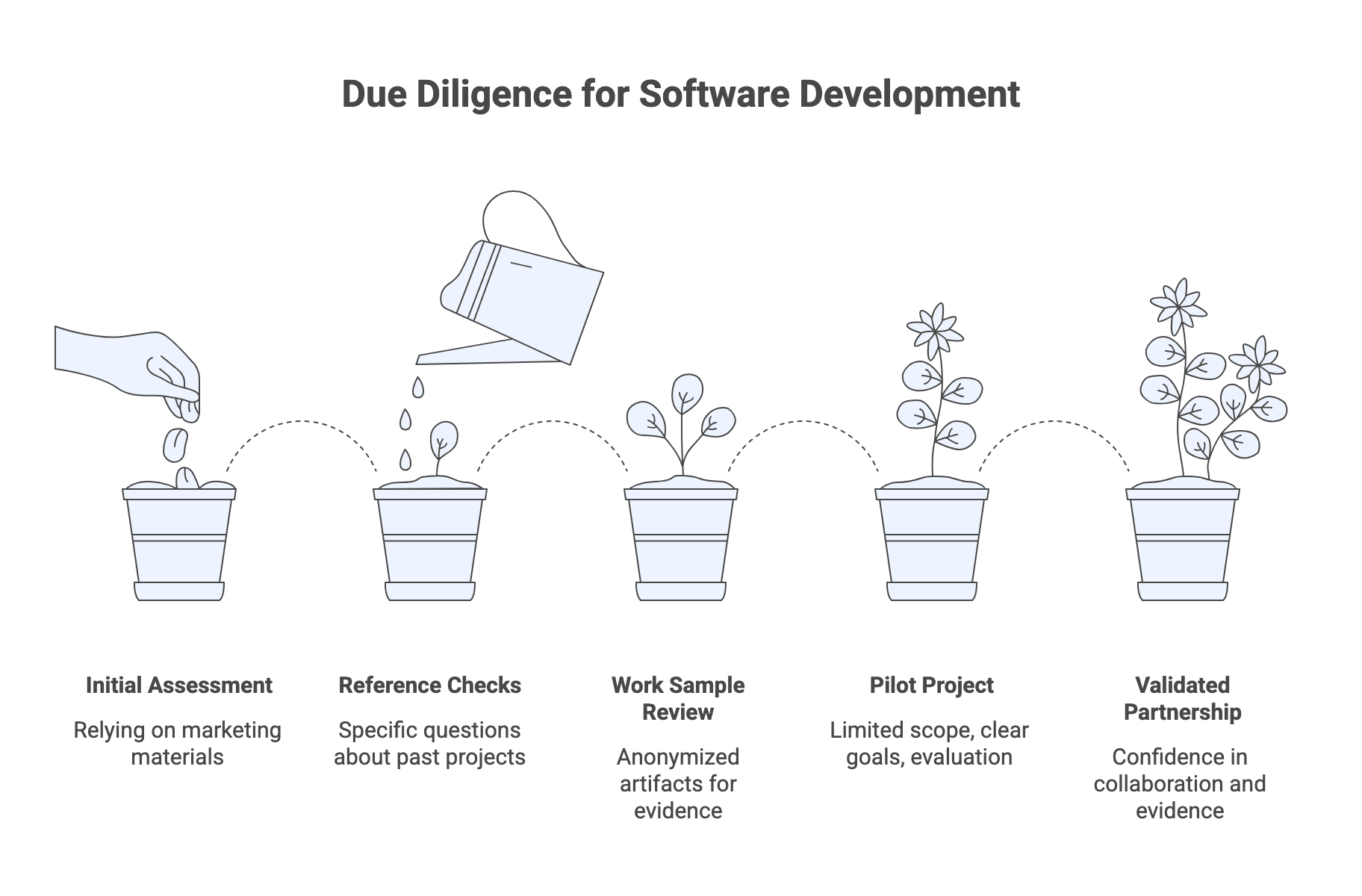

Step 7: Run a structured due diligence: references, work samples, and pilot options

Due diligence is where you verify claims under real conditions.

Reference checks work best when they are specific:

- How did the vendor handle setbacks and slippage?

- How did they manage change requests?

- How did they communicate risk and bad news?

- How stable was the team and how was continuity handled?

- What was the quality of documentation and handover?

Work samples can raise confidence quickly, especially anonymized artifacts like plans, status reports, test strategies, and retrospectives.

When you have close contenders or higher uncertainty, a paid pilot or discovery sprint can be the highest-signal step. It should have clear goals, limited scope, and explicit evaluation criteria, and it should produce useful outputs even if you choose not to continue.

Expected outcomes:

- Reference call script and scoring notes.

- Artifact review checklist for delivery and security evidence.

- Pilot brief with success criteria if you choose to run one.

Action cue: Validate the partnership by testing collaboration and evidence quality, rather than reading more marketing material.

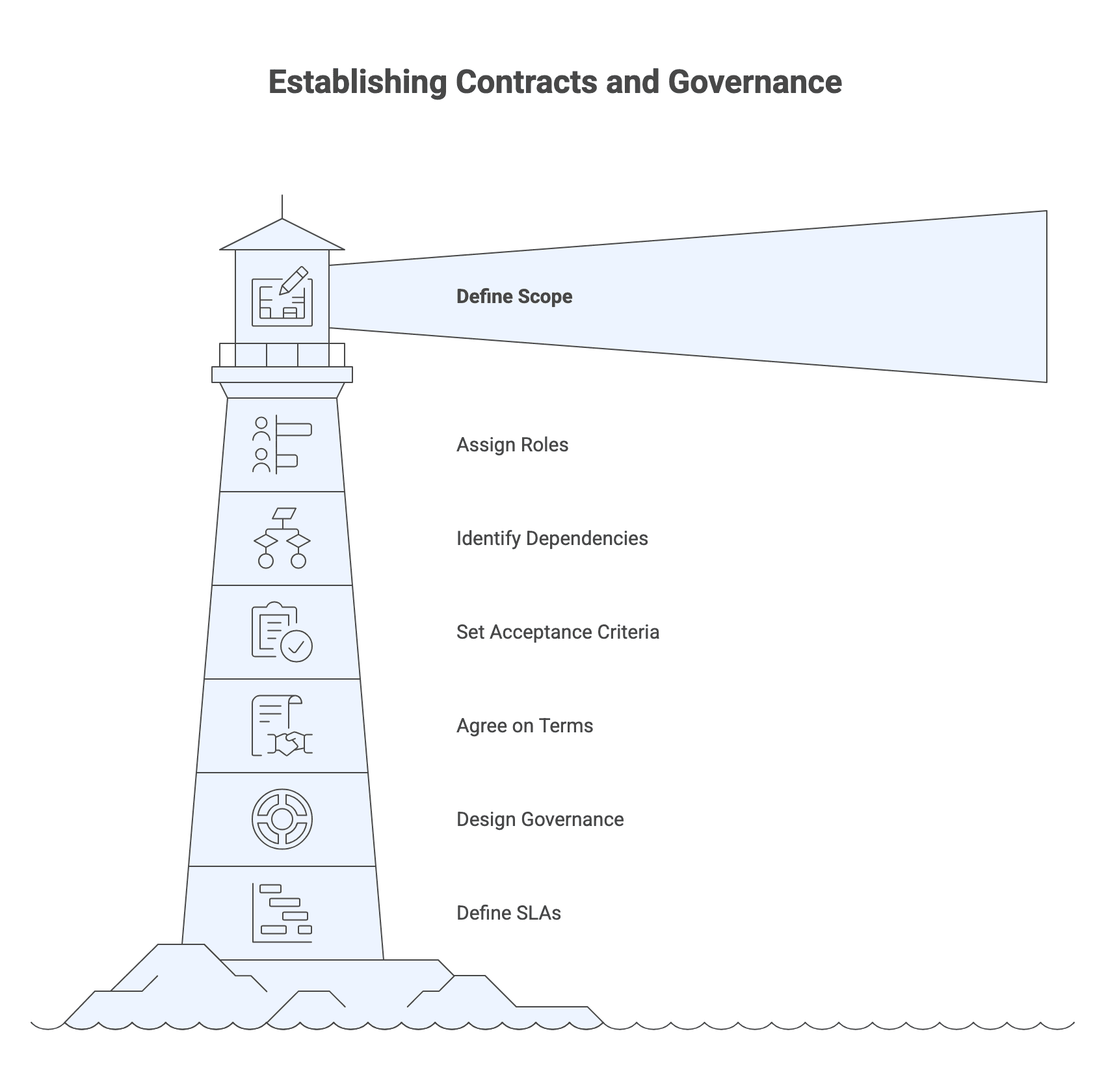

Step 8: Contracting and governance setup before kickoff

Contracts and governance are not administrative. They shape incentives and execution.

A strong Statement of Work (SOW) should define:

- Scope and objectives, deliverables, and milestones.

- Roles and responsibilities, including who owns decisions.

- Assumptions and dependencies.

- Acceptance criteria and how acceptance will be measured.

- Commercial terms, billing mechanics, and change control.

Governance should be designed before day one:

- Meeting cadence, reporting format, and tooling.

- Escalation paths and decision forums.

- How risks and issues will be tracked and resolved.

- When and how performance will be reviewed.

If relevant, define Service Level Agreements (SLAs) and Service Level Objectives (SLOs) for post-launch support, including availability, incident response and resolution targets, and defect handling. Make sure measurement mechanics are clear and realistic.

Action cue: Document delivery governance like part of the product system, and encode critical expectations in the SOW and contract.

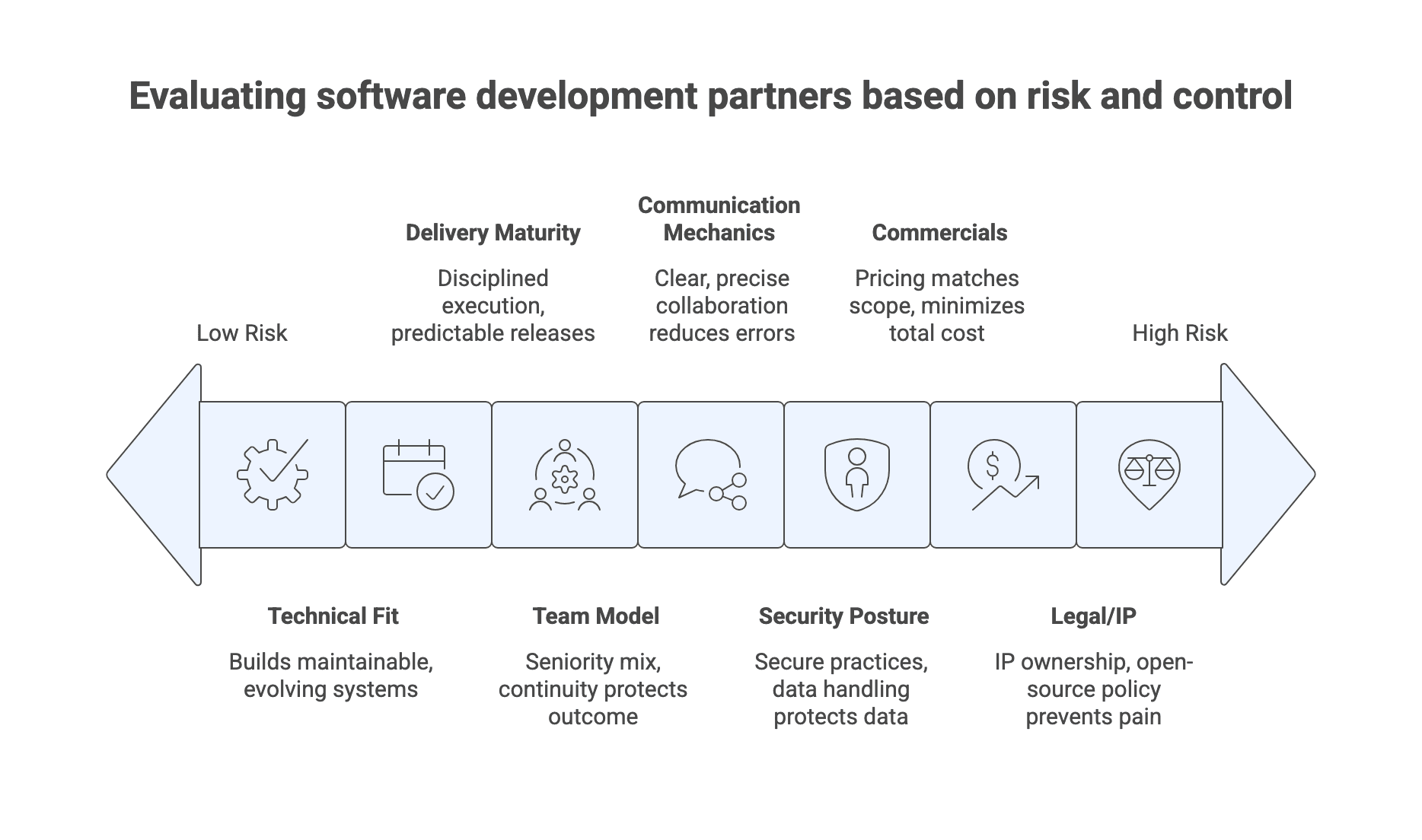

What to evaluate (criteria & trade-offs)

Once you have a workable shortlist, evaluate using consistent criteria. The goal is to understand fit and trade-offs.

Action cue: Use a small set of criteria that reflect your real risks, then weight them based on criticality.

Technical and architectural fit

Technical fit is not just stack familiarity. It is whether the partner can build in a way your organization can operate and evolve.

What to evaluate:

- Experience with your languages, frameworks, cloud platform, and toolchain.

- Integration capabilities with your critical systems and APIs.

- Architectural thinking and ability to explain trade-offs.

- Maintainability signals: modular design, documentation habits, testing discipline, and technical debt management.

Key trade-off: a partner may propose technologies that are new to your team. That can bring speed or specialized capability, but it can also increase long-term dependency and reduce internal ownership unless you plan for knowledge transfer.

Action cue: Require rationale for major technology choices, and confirm you can maintain what is built without permanent dependency.

Delivery maturity and predictability

Delivery predictability comes from disciplined execution, not from a claim of being “agile.”

What to evaluate:

- Planning cadence and backlog management practices.

- QA integration: automated testing where appropriate, and clear acceptance criteria.

- Release practices and CI/CD maturity.

- How progress and risk are reported, including what happens when work is off-track.

Key trade-off: more formal delivery practices can improve predictability, but may feel slower during exploration. Match maturity to project phase: discovery often needs flexibility, while build and launch need discipline.

Action cue: Ask how the partner measures delivery health, and what they do when milestones slip.

Team model, seniority mix, and continuity

Team composition often determines your outcome more than the company brand.

What to evaluate:

- Named roles and responsibilities: engineering lead, architect, QA, DevOps, product or delivery manager.

- Seniority mix that fits complexity.

- Continuity mechanisms: documentation, shared code ownership, onboarding practices, and backup coverage.

- Substitution rules: under what conditions people can be swapped, and how you approve changes.

Common failure mode: the A-team sells, the B-team builds. Avoid this with explicit staffing commitments and by meeting the delivery leads before signing.

Action cue: Confirm who will actually work on your product, and how continuity will be protected over time.

Communication and collaboration mechanics

Communication failures are often misdiagnosed as technical failures.

What to evaluate:

- Proposed cadence: standups, sprint reviews, planning, steering committee.

- Artifacts: status reports, decision logs, shared boards, and documentation norms.

- Time-zone overlap, escalation handling outside core hours, and responsiveness expectations.

- Clarity and precision during the sales cycle as an indicator of future behavior.

Trade-off: more synchronous collaboration reduces misunderstanding but costs time. More asynchronous collaboration can scale but requires discipline and strong writing.

Action cue: Define the working rhythm and artifacts early, and make them part of the governance design.

Security posture and data handling practices

Security posture is both organizational and project-specific.

What to evaluate:

- Secure development practices across the lifecycle.

- Access controls, least privilege, and joiner-mover-leaver processes.

- Secrets management, logging and monitoring expectations, and incident procedures.

- Data handling rules: use of production data in lower environments, encryption in transit and at rest, retention and deletion.

Trade-off: strict controls can slow development if not designed well. The right balance depends on your risk tier.

Action cue: Provide your data classification requirements, then ask the vendor to explain how they will implement them in practice.

Commercials: pricing model signals and total cost risk

Pricing structure affects incentives, and incentives affect outcomes.

What to evaluate:

- Whether the model matches scope certainty.

- Rate cards, billing increments, and expense policies.

- Change control mechanics and how estimates are produced.

- How the vendor talks about rework, quality, and risk.

Hidden costs commonly come from rework, heavy client oversight, and compliance remediation when expectations were unclear. Total cost includes internal management time, operational overhead, and transition costs.

Action cue: Model total cost across the system lifecycle, including governance effort and transition risk.

Legal/IP and operational risk

Legal clarity prevents operational pain later.

What to evaluate:

- IP ownership and licensing for custom deliverables.

- Treatment of reusable components and frameworks.

- Open-source usage policy, tracking, and compliance habits.

- Subcontracting policy and how obligations flow down to fourth parties.

- Termination and transition support: access to code, documentation, and reasonable assistance.

Operational risk rises when critical knowledge is concentrated in a few people, or when subcontracting is opaque. These can be manageable, but only if you design for visibility and transition.

Action cue: Get explicit about IP, open-source policy, and transition support before you lock into an engagement.

How to Verify If a Vendor Can Actually Deliver

Verification is where you make the decision real. This is also what makes your final choice defensible to stakeholders, auditors, and leadership.

Action cue: Standardize what you request across vendors, so your evaluation stays comparable.

The artifact request list (minimum set)

A pragmatic minimum set for substantial custom software engagements:

Delivery evidence:

- Sample SOW from a similar engagement.

- Sample delivery plan or release plan, including how progress is reported.

- QA strategy document, including test approach and how acceptance criteria are applied.

- Examples of status reports and a risk log (RAID).

Technical evidence:

- Architecture overview from a comparable system, including rationale and key trade-offs.

- Documentation samples that show maintainability habits.

Security and risk evidence (scaled to your risk tier):

- Security questionnaire responses.

- Supporting evidence proportionate to criticality, such as audit report excerpts or summaries where available.

- Penetration test summary and vulnerability management approach.

- Incident response and business continuity basics.

Team evidence:

- Bios for key roles and confirmation of staffing continuity expectations.

How to use artifacts well:

- Review them cross-functionally. Delivery, security, legal, and engineering should see the same core materials.

- Use gaps as prompts for follow-up. Not every missing artifact is a disqualifier, but it is always a signal.

- Store final artifacts centrally as part of vendor management records.

Action cue: Ask for evidence that shows how work is actually executed instead of what the vendor claims to value.

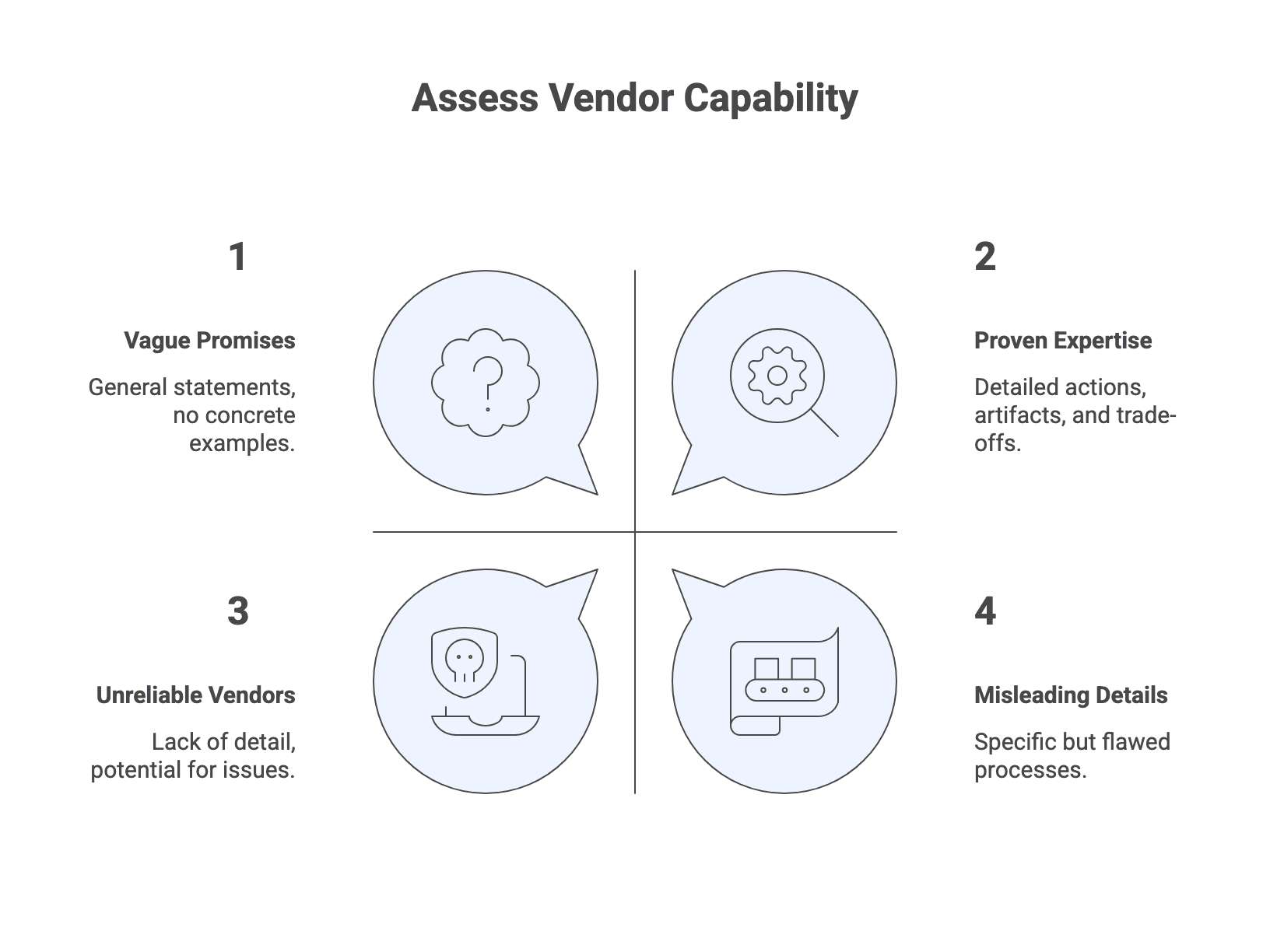

Interview questions that reveal real capability

Use scenario-based prompts that force specificity. Examples:

Delivery and governance:

- “Describe a project that went significantly off track. What changed, what did you do, and what artifacts did you produce to manage the recovery?”

- “How do you handle scope changes without derailing delivery? Walk through the change control steps.”

Quality and engineering practices:

- “Show how acceptance criteria are defined and validated. Where does QA fit in the iteration?”

- “What does code review look like in practice, and how do you prevent critical knowledge from concentrating in one person?”

Security posture:

- “A widely used dependency gets a serious vulnerability. How do you detect impact, triage, patch, and communicate?”

- “How do you manage access to client environments and secrets across the team lifecycle?”

Collaboration:

- “How do you communicate bad news, and what cadence and artifacts do you use so there are no surprises?”

How to evaluate answers:

- Look for concrete steps, artifacts, and trade-offs.

- Ask to meet the people who will actually own delivery roles, instead of only sales leadership.

- Map answers back to your criteria rather than relying on overall rapport.

Action cue: Require specificity. If a vendor cannot describe real behaviors and artifacts, you should assume they will not appear later.

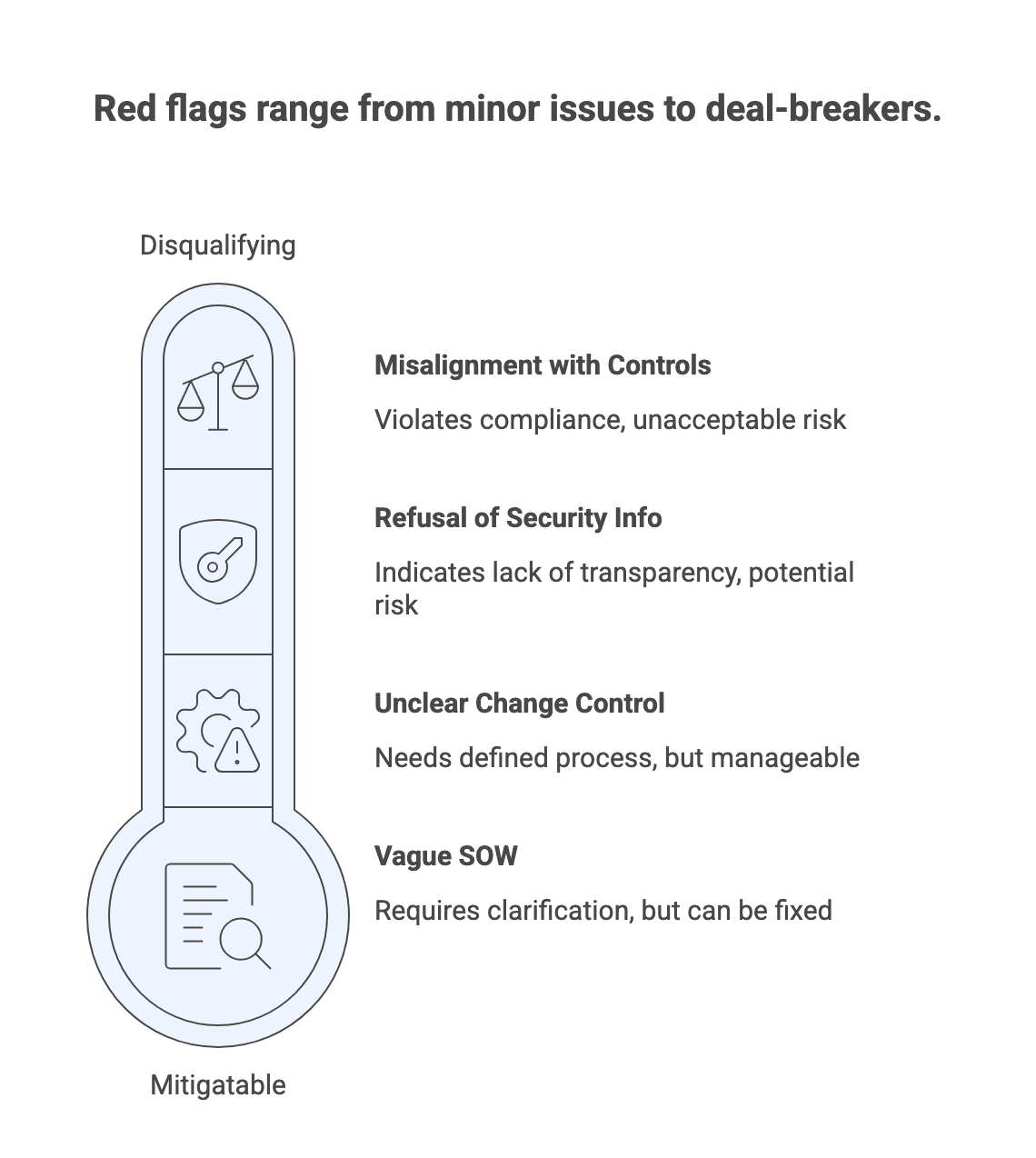

Red flags and disqualifiers

Red flags that often correlate with delivery pain:

Delivery and quality:

- No clear delivery methodology, or “we are agile” without specifics.

- No defined QA strategy or testing discipline.

- Vague SOW language with ambiguous deliverables or acceptance criteria.

- Inability to explain how they handle slippage and risk.

Security and risk:

- Refusal to provide basic security information for a risk-tier-appropriate engagement.

- Misalignment with mandatory controls for your data and systems.

- Over-reliance on certifications as the only security answer.

Commercials and governance:

- One-sided contract terms that undermine collaboration.

- Change control that is unclear or absent.

- Billing practices that are hard to reconcile or audit.

How to use a red-flag list:

- Separate “mitigatable risks” from “disqualifiers.”

- Document what you found and how you handled it. This is what makes the decision defensible.

- Do not let branding or rapport override a critical control gap.

Action cue: Decide upfront which red flags are deal-breakers for your risk tier, then apply that bar consistently.

Lightweight scoring: how to compare vendors consistently

A lightweight scoring model helps you avoid gut-feel decisions without creating bureaucracy.

Keep it simple:

- Choose 6 to 10 criteria that map to your risks, such as technical fit, delivery maturity, security posture, communication, commercials, and references.

- Use a consistent scale, for example 1 to 5.

- Weight criteria based on risk. For high-risk projects, delivery maturity and security should outweigh price.

- Have multiple evaluators score independently, then reconcile differences and record rationale.

If you want a more structured template and weighting guidance, use a custom software development vendor evaluation scorecard.

Action cue: Use scoring to structure the discussion and documentation.

Conclusion: make the decision defensible

A good partner choice in 2026 is not the one with the best pitch. It is the one you can verify, govern, and explain.

Make the decision defensible by:

- Defining outcomes, constraints, and owners before you engage vendors.

- Evaluating delivery maturity, security posture, and collaboration mechanics with evidence.

- Choosing an engagement model that matches how uncertain your scope really is.

- Running structured due diligence with references, artifacts, and pilots when needed.

- Capturing the final rationale in a short decision record and storing it with your vendor management documentation.

Action cue: Create a one-page decision memo that links requirements, evaluation outcomes, and the reason the selected partner best fits your risk profile.