We put excellence, value and quality above all - and it shows

A Technology Partnership That Goes Beyond Code

“Arbisoft has been my most trusted technology partner for now over 15 years. Arbisoft has very unique methods of recruiting and training, and the results demonstrate that. They have great teams, great positive attitudes and great communication.”

Custom Software Development Vendor Evaluation Scorecard (How to Use + Download)

If you are selecting a custom software development vendor, the hardest part is rarely finding options. It is comparing them consistently across delivery, technical fit, and risk, with enough evidence to defend the decision later.

This guide gives you a practical vendor evaluation scorecard template you can copy into a spreadsheet, plus a straightforward way to use it with a shortlist so your buying committee can agree on a finalist without relying on gut feel, the slickest demo, or the lowest day rate.

Why this decision needs a scorecard (and what it prevents)

This vendor choice has long tail consequences. The wrong fit can mean months of scope churn, missed windows, painful rewrites, and multi year spend that becomes hard to unwind. The risk is governance, continuity, and the realities of how the vendor plans, reports, tests, and escalates when delivery gets messy.

A scorecard prevents the most common failure mode in vendor selection: evaluating vendors tactically and inconsistently. Without a shared rubric, teams tend to optimize for what is easiest to compare early, like day rates or a confident presentation. Meanwhile, the factors that predict outcomes, like delivery maturity, quality discipline, security posture, and team continuity, get evaluated too late, when leverage is lower and switching costs are higher.

A good scorecard also reduces committee bias. When product, engineering, security, and procurement bring different priorities, it is easy to end up “horse trading” across opinions instead of aligning on evidence. A shared rating scale, documented rationale, and agreed weights help keep the decision anchored in what matters for your project.

One more benefit is continuity. If you select a vendor based on delivery reliability, defect trends, responsiveness, and governance quality, those same dimensions can later become your recurring KPIs and quarterly review topics. That connection helps you detect drift early and act before performance problems become entrenched.

If you already have two to five vendors in mind, the most useful next step is to lock your criteria, weights, and disqualifiers now, before the next sales call influences what “good” looks like. If you are still building your shortlist, review the top custom software development companies shortlist to identify vendors that meet baseline delivery, technical, and governance standards before applying this scorecard.

What this scorecard is (and who should use it)

This scorecard is a structured evaluation tool for assessing custom software development vendors across criteria that tend to predict real world outcomes. It uses an E-A-V style rubric:

- Execution: delivery and governance

- Architecture: technical depth and fit

- Value: commercials, risk, and relationship quality

Because software projects also fail on operational realities, the scorecard explicitly calls out quality, security, and team continuity as first class criteria rather than footnotes.

Who it is for:

- US based mid market and enterprise teams commissioning business critical builds or modernization work

- Buying committees that need an auditable, defensible selection process

- Teams that want to compare vendors apples to apples, then carry the evaluation into contracting and governance

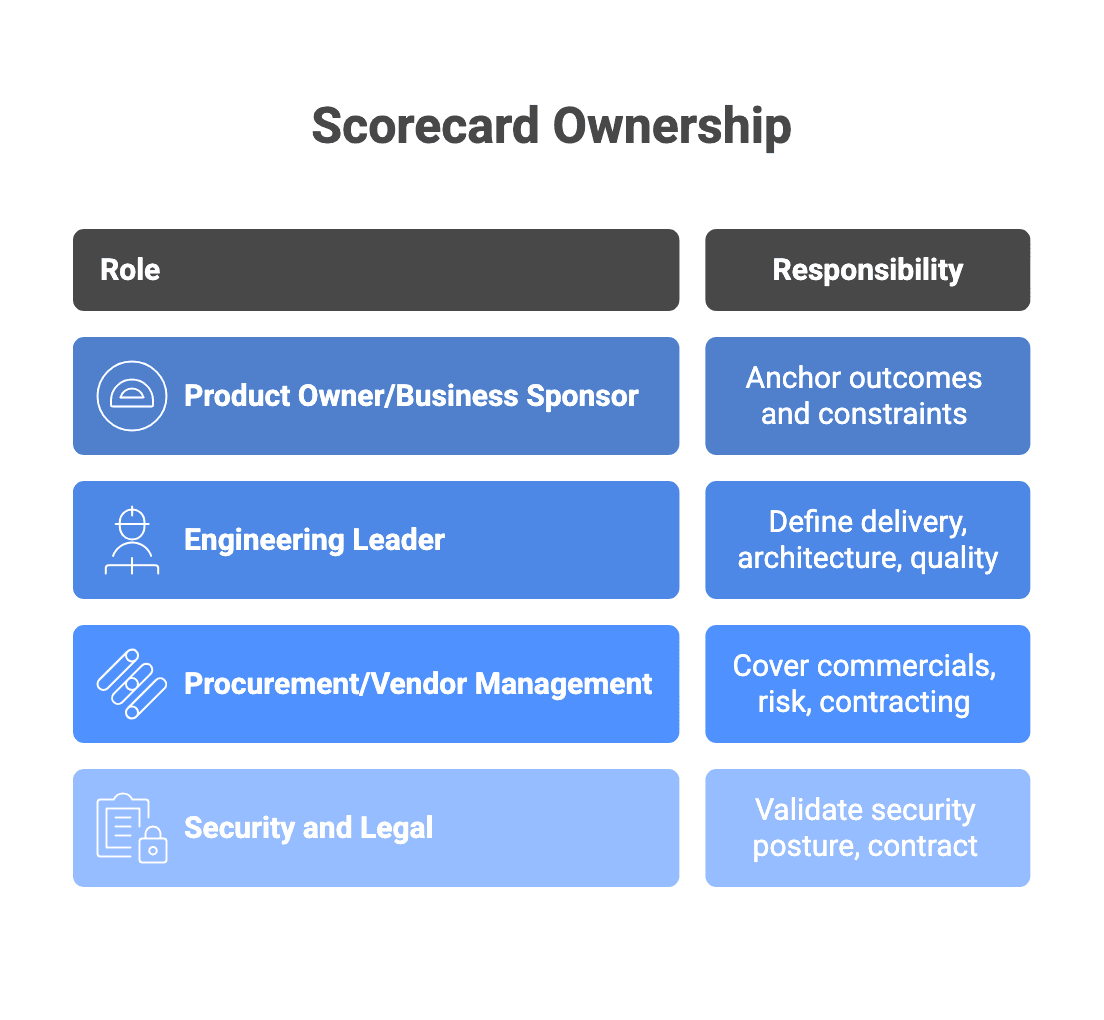

Who should own it:

- A product owner or business sponsor to anchor outcomes and constraints

- An engineering leader to define delivery, architecture, and quality criteria

- Procurement or vendor management to cover commercials, risk, and contracting structure

- Security and legal to validate security posture and contract requirements

Pick one evaluation owner. That person manages the template, runs scoring calibration, consolidates inputs, and keeps the process consistent.

Timing matters. Introduce the scorecard early, before an RFP (Request for Proposal) or discovery workshops, so you agree on criteria and weights before you see pitches. If you want the full end to end selection context, start with the the guide to choose a custom software development partner and then return here for the operational rubric.

Before you contact vendors again, assign ownership and run a quick calibration using a sample vendor so everyone applies the scale the same way.

The vendor evaluation scorecard template

You can implement this template in Google Sheets or Excel in about 15 minutes.

Step 1: Create the columns

Create these columns in your spreadsheet:

- Vendor name

- Criterion group

- Criterion

- What this measures

- Evidence to request

- Score (1 to 5)

- Weight

- Confidence (high, medium, low)

- Notes and risks

- Disqualifier (yes or no)

Tip: Keep the rubric stable across vendors. If you change criteria midstream, you will lose comparability.

Step 2: Use a simple 1 to 5 scale with anchors

Use descriptive anchors so evaluators do not invent their own meanings.

- 1 (Unacceptable): missing, risky, or non compliant with your minimum bar

- 2 (Weak): exists in name, inconsistent, or not evidenced

- 3 (Adequate): meets baseline expectations with typical gaps

- 4 (Strong): well defined, evidenced, and reliable in practice

- 5 (Leading): consistently strong, clearly evidenced, and likely to scale with complexity

For critical criteria, write brief “what a 1, 3, and 5 look like” notes directly in your sheet so scoring stays consistent.

Step 3: Start with a starter rubric you can tailor

Below is a starter set of rows. It is intentionally compact. Add more only when it improves decision clarity.

Criterion group | Criterion | What good looks like | Evidence to request |

| Delivery execution and governance | Planning and estimation discipline | Clear approach to backlog grooming, sprint planning, and handling uncertainty without hiding it | Sample delivery plan, sprint plan, estimation approach, examples of how scope changes were managed |

| Delivery execution and governance | Progress reporting and transparency | Regular reporting that surfaces risks early, with clear escalation paths and governance forums | Status report template, sample sprint report, risk register, governance cadence and attendees |

| Technical capability and architecture fit | Architecture approach and fit | Can explain trade offs and align architecture to your stack, constraints, and operational capabilities | Anonymized architecture diagram, decision records, integration approach |

| Technical capability and architecture fit | Relevant experience | Demonstrated experience in similar domains or platforms, with evidence beyond marketing | Case studies with comparable scale, reference context, proposed approach for your scenario |

| Quality and reliability | Test strategy | Documented testing approach across unit, integration, and end to end testing, tied to acceptance criteria | Test plan or QA strategy, example test reports, definition of done |

| Quality and reliability | CI/CD discipline | Continuous Integration and Continuous Delivery (CI/CD) pipeline with automated checks to prevent regressions | Pipeline overview, release workflow, rollback strategy, defect triage process |

| Security and compliance readiness | Secure development practices | Security built into discovery and delivery | Secure SDLC description, access control approach, vulnerability management, incident response overview |

| Security and compliance readiness | Data protection and access control | Clear handling of sensitive data, least privilege access, and secrets management | Security questionnaire responses, access control model, data handling practices |

| Team structure and continuity | Proposed team and seniority mix | The staffed team matches complexity, and the vendor can protect continuity | Team bios for proposed delivery team, role definitions, backfill plan |

| Team structure and continuity | Knowledge management | Documentation and knowledge sharing reduce dependency on single individuals | Documentation examples, runbook outline, onboarding plan, ownership model |

| Commercials and contract realities | Pricing model fit | Commercial model matches scope certainty and risk appetite | Pricing structure, assumptions, change control process, rate transparency |

| Commercials and contract realities | IP and exit readiness | Clear IP ownership and a practical handover path to reduce lock in | Contract term summary, IP approach, transition plan, documentation commitments |

Add a summary area that includes:

- Total weighted score per vendor

- Two to three strengths

- Two to three risks

- Recommended action: advance, hold, or drop

Want to Implement the Scorecard?

This template gives you a ready-to-run scorecard (with demo data) to align on criteria, weights, and evidence so your vendor decision holds up before pitches shape the narrative.

How to use the scorecard in a real evaluation cycle

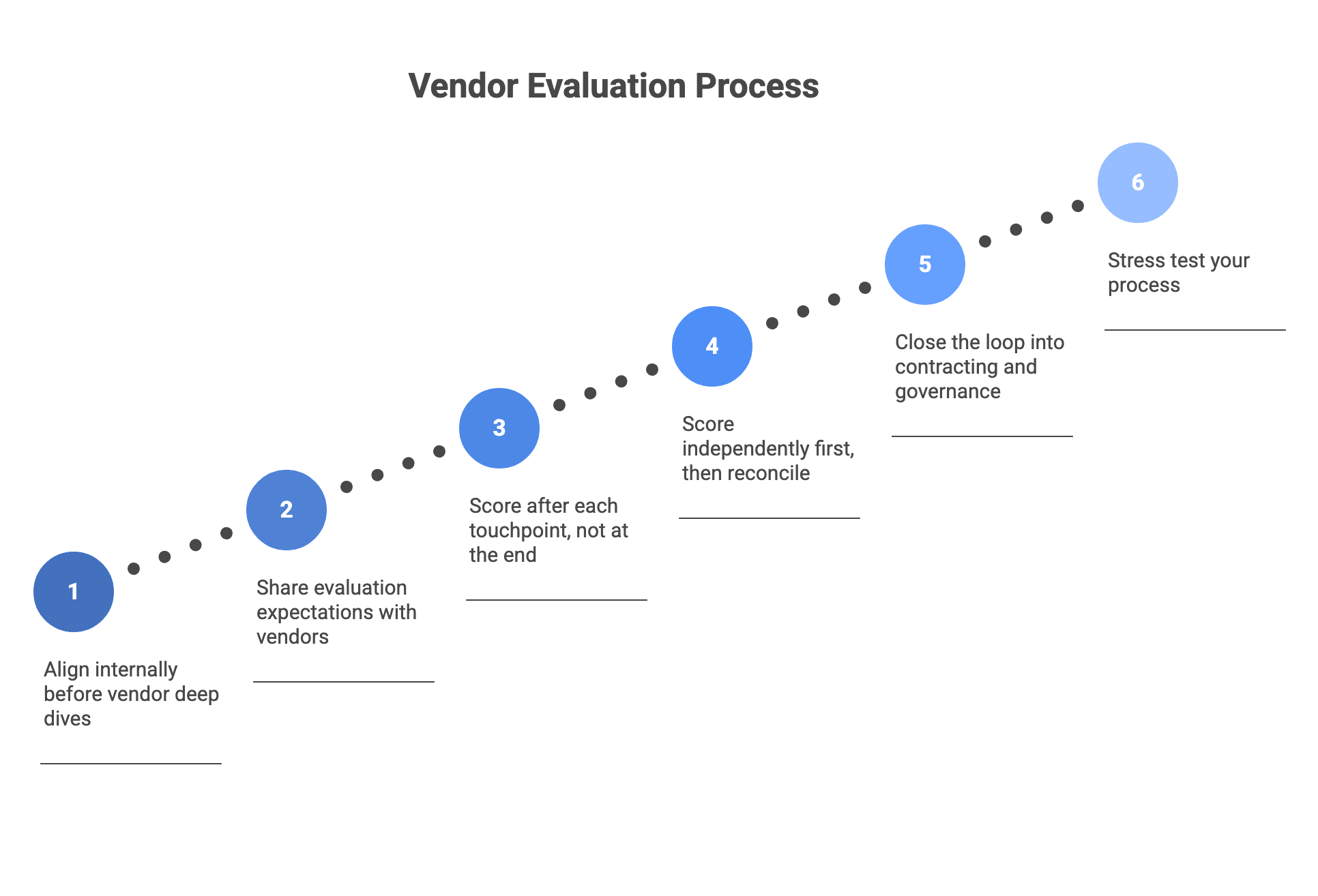

Treat the scorecard as the backbone of the process.

- Align internally before vendor deep dives

Meet with product, engineering, security, procurement, and the business sponsor. Confirm outcomes, constraints, and risk appetite. Tailor criteria and set weights now.

- Share evaluation expectations with vendors

Tell vendors what you will score and what evidence you need. This improves proposal quality and reduces generic marketing responses. If you run an RFP (Request for Proposal), structure it to map to the scorecard.

- Score after each touchpoint

After the screening call, technical deep dive, and governance session, score only the relevant sections while details are fresh. Add notes about what evidence drove the score.

- Score independently first, then reconcile

Have each evaluator score privately. Then consolidate and discuss the biggest deltas. Focus discussion on evidence.

- Close the loop into contracting and governance

Use the scorecard results to decide what needs to be reinforced in your Statement of Work (SOW), Master Services Agreement (MSA), and ongoing ceremonies. If security is uncertain, require stronger validation steps. If continuity is a risk, negotiate staffing protections.

A quick way to stress test your process is to run two vendors in parallel through the same steps and see whether the scorecard makes trade offs clearer or exposes missing criteria.

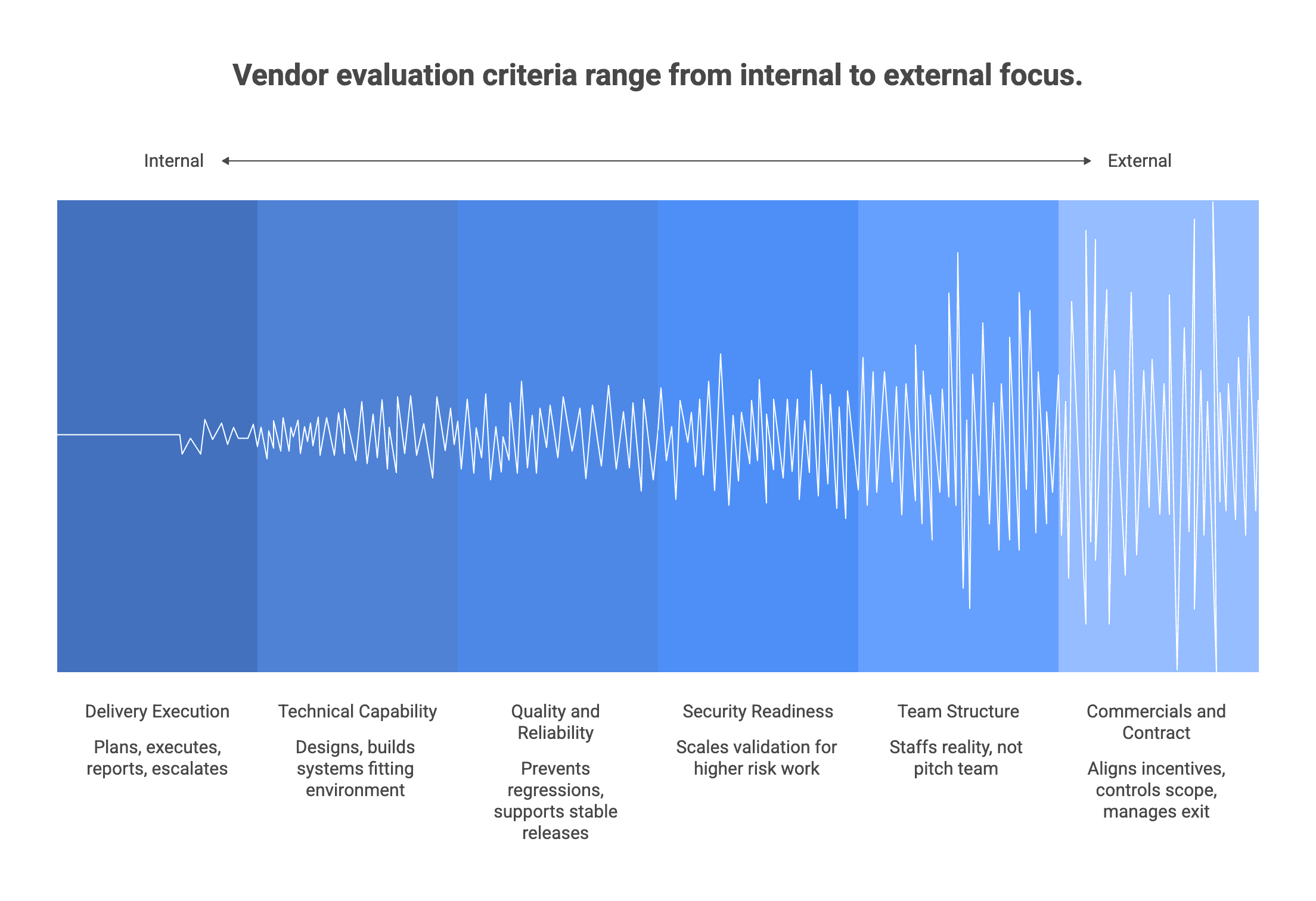

The scorecard fields (criteria categories + what good looks like)

Use these categories as your spine. Keep sub criteria specific, observable, and tied to artifacts.

Delivery execution and governance

You are scoring how the vendor plans, executes, reports, and escalates.

What to look for:

- Clear delivery model (Scrum, Kanban, or hybrid) and when it changes

- Backlog grooming, sprint planning, retrospectives, and acceptance criteria discipline

- Progress reporting that includes risks

- Defined escalation paths and governance forums

Evidence artifacts:

- Delivery plan, status reports, sprint reports, risk register, RACI, meeting cadence

Technical capability and architecture fit

You are scoring whether the vendor can design and build systems that fit your environment.

What to look for:

- Ability to explain trade offs and make architecture decisions explicit

- Comfort with your stack, integrations, and operational constraints

- Evidence of maintainable patterns instead of trendy frameworks

Evidence artifacts:

- Architecture diagram, integration approach, decision records, relevant work samples

Quality and reliability

You are scoring whether the vendor prevents regressions and supports stable releases.

What to look for:

- Test strategy across levels

- Automated testing integrated into CI/CD

- Release discipline, rollback planning, defect triage

- Production support readiness, including service level objectives (SLOs) where relevant

Evidence artifacts:

- QA strategy, test plan, sample test reports, pipeline overview, runbook outline

Security and compliance readiness (right sized)

You are scoring baseline security maturity and the ability to scale validation for higher risk work.

What to look for:

- Secure development lifecycle practices

- Dependency and vulnerability management

- Access control and secrets management

- Incident response approach and transparency

Evidence artifacts:

- Security questionnaire responses, policies summaries, incident response overview, access controls model

- References to SOC 2 (Service Organization Control 2) or ISO 27001 (International Organization for Standardization) can be useful signals, but they should not replace concrete process evidence.

Team structure and continuity

You are scoring the reality of staffing.

What to look for:

- Proposed roles and seniority mix match complexity

- Clear backfill and continuity plan

- Documentation and knowledge distribution to avoid single points of failure

Evidence artifacts:

- Proposed team bios, role descriptions, onboarding plan, knowledge management practices

Commercials and contract realities

You are scoring incentive alignment, scope control, and exit risk.

What to look for:

- Pricing model matches scope certainty

- Transparent assumptions and change control

- IP ownership clarity and exit readiness

- Contract terms that support governance rather than only legal protection

Evidence artifacts:

- Pricing sheet and assumptions, change control process, draft contract term summaries, transition plan

If a criterion cannot be scored with evidence, record a lower confidence level and make it a follow up item rather than guessing.

Scoring guidance (weights, disqualifiers, and confidence levels)

Weights should reflect your project’s risk profile.

- For a long lived, business critical platform, weights usually tilt toward architecture fit, quality discipline, security readiness, and continuity.

- For a time boxed prototype, you may weight speed, collaboration, and commercial flexibility higher, while keeping minimum quality and security gates.

Start by weighting at the category level, then refine within categories. The important rule is to agree on weights before scoring vendors.

Disqualifiers protect you from being seduced by a high total score that hides a critical gap. Common disqualifiers include:

- Cannot meet a mandatory regulatory or data handling requirement

- Refuses to accept required IP or confidentiality terms (NDA, Non Disclosure Agreement)

- No meaningful test strategy for production work

- Materially negative references on transparency or delivery behavior

Use confidence levels to capture uncertainty. A vendor might score “4” on security based on documentation, but with medium confidence until security reviews the evidence or you complete deeper validation. Confidence makes it easier to decide what to verify next instead of treating all scores as equally proven.

Keep the math simple: weighted sum by criterion, rolled into category totals, rolled into an overall score. Then add narrative: top risks, mitigations, and the recommended action.

If you need to decide quickly, focus on whether the top two vendors differ on the highest weighted categories. That is where the decision usually lives.

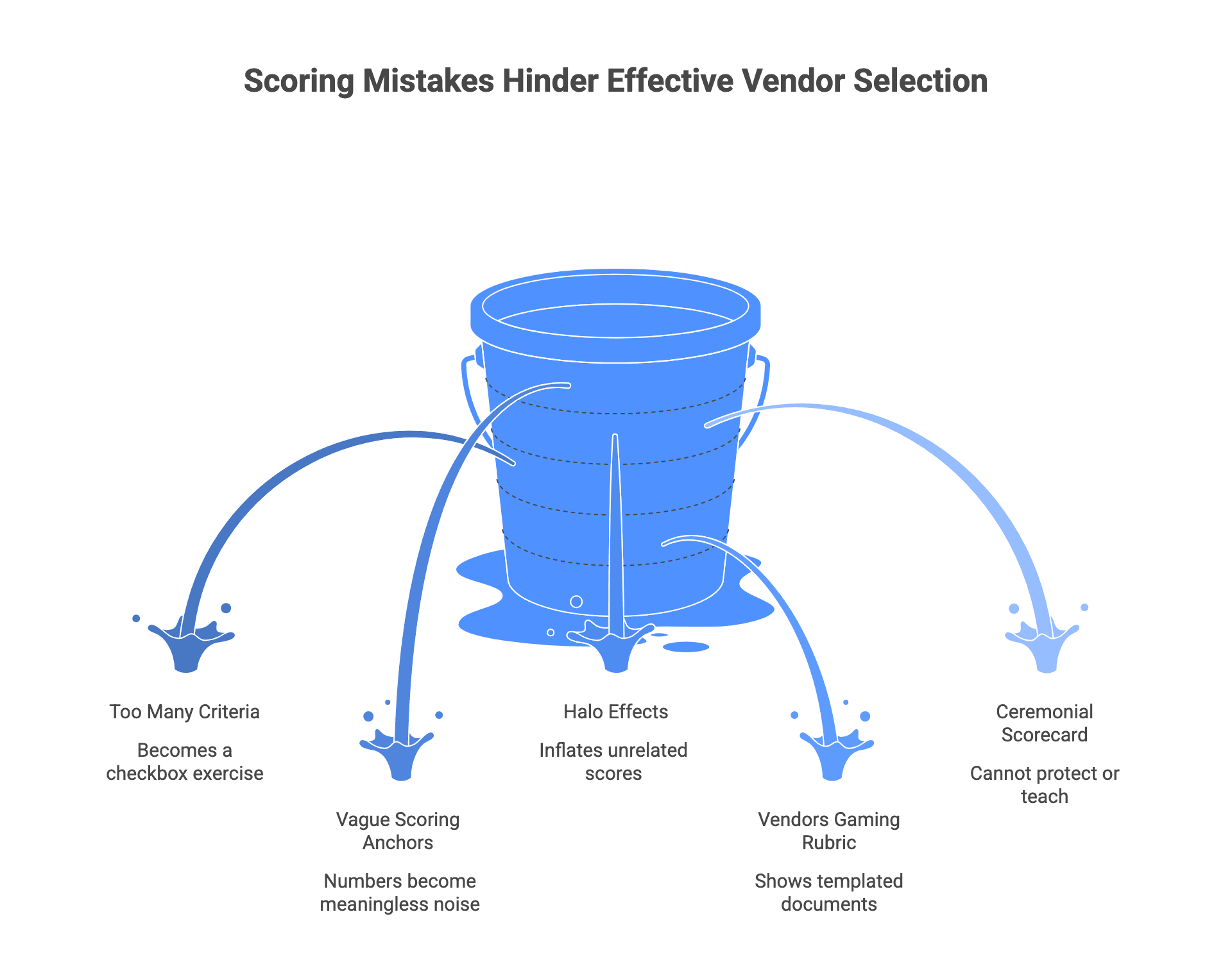

Common scoring mistakes (and how to fix them)

Mistake: Too many criteria

A 200 line scorecard becomes a checkbox exercise. Fix it by pruning to the criteria that truly drive outcomes, and keep specialist checklists separate.

Mistake: Vague scoring anchors

If a “4” means different things to different evaluators, the numbers are noise. Fix it by defining anchors for critical fields and running a quick calibration.

Mistake: Halo effects and brand bias

A polished demo can inflate unrelated scores. Fix it by scoring independently, requiring evidence for high scores, and triangulating with artifacts and references.

Mistake: Vendors gaming the rubric

Vendors may show templated documents or present an A team that will not staff your project. Fix it by meeting the proposed delivery team, requesting live walkthroughs where appropriate, and validating with small exercises.

Mistake: Treating the scorecard as ceremonial

If you fill it out after deciding, it cannot protect you or teach you. Fix it by making scorecard completion a gate before finalist selection and using it to drive contract and governance decisions.

A healthy sign is when the scorecard changes your shortlist order. That usually means it surfaced risks that a pitch would have hidden.

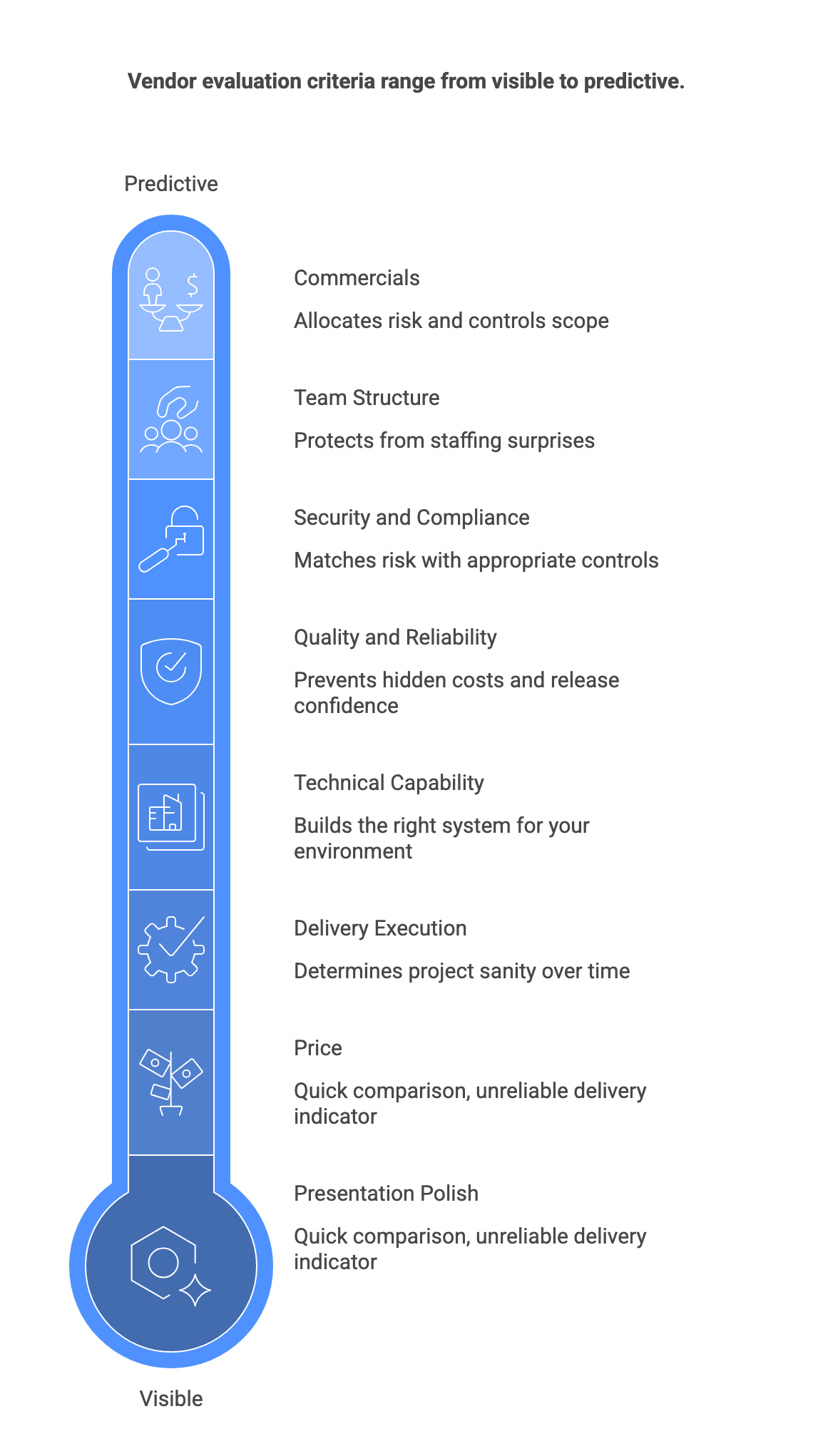

What to evaluate (criteria that actually predict outcomes)

The most predictive criteria are rarely the most visible in early sales conversations. Presentation polish and price can be compared quickly, but they do not reliably indicate delivery success, maintainability, or risk control.

Focus on criteria that reflect:

- Team competence and seniority where complexity demands it

- Communication and governance quality

- Ability to align technical work to business outcomes

- Disciplined engineering practices that prevent long term cost blowups

Expect trade offs. A vendor optimized for speed might accept higher architectural risk. A vendor optimized for reliability might be slower but safer for core systems. Your scorecard makes those trade offs explicit and helps you decide intentionally.

Also separate lagging from leading indicators:

- Lagging: case studies, client satisfaction stories, portfolio claims

- Leading: how the vendor behaves in discovery, how they handle uncertainty, how they respond to pushback, and the quality of evidence they provide

Tailor sub criteria to your project type. Data heavy systems may need more on data architecture. Consumer mobile apps may need explicit UX and performance fields. Keep the spine consistent so you can compare vendors fairly.

If you cannot explain why a criterion predicts success for your project, it probably does not belong in the scorecard.

Delivery execution and governance

This category often determines whether a project stays sane over time.

Score the vendor on:

- How they run discovery and requirements

- Backlog grooming and sprint planning discipline

- Progress reporting, including what happens when a milestone slips

- Risk and issue management, including escalation

- Governance forums and decision rights clarity

Verification steps:

- Ask for a sample status report and a sample risk register, then ask how they were used in a real project.

- Ask how change control works, and what happens when priorities shift mid sprint.

- In references, probe how transparent the vendor was when delivery got hard.

Red flags:

- Cannot explain delivery mechanics beyond generic “we do Agile”

- Reporting focuses only on what is done, not on risks and blockers

- Escalation depends on personal relationships rather than defined paths

If governance is weak, your team will end up carrying hidden program management load. Score it accordingly.

Technical capability and architecture fit

Architecture fit is about building the right system for your environment, instead of building a system that looks impressive in a demo.

Score the vendor on:

- Ability to articulate architecture trade offs

- Integration experience with your core platforms and constraints

- Code quality practices and handling technical debt

- Approach to DevOps maturity, including infrastructure as code where relevant

Verification steps:

- Give a representative scenario and ask for a high level architecture proposal, including trade offs and risks.

- Ask for anonymized architecture diagrams and decision records from past work.

- Ask how they document architecture decisions and keep them current.

Red flags:

- Buzzwords without concrete examples

- One size fits all architectures

- Unwillingness to discuss constraints, operational realities, or long term maintenance

If the vendor cannot explain how their design will be operated, supported, and evolved, it is a fit risk even if they can build quickly.

Quality and reliability (testing, CI/CD, release discipline)

Quality is where hidden costs accumulate. It affects defect rates, release confidence, and how expensive change becomes.

Score the vendor on:

- Test strategy tied to acceptance criteria

- Automated testing maturity across unit, integration, and end to end

- CI/CD practices (Continuous Integration and Continuous Delivery) and gating

- Release management, rollback planning, and incident learning

- Production support readiness and response expectations

Verification steps:

- Ask for a QA strategy and examples of test reporting.

- Ask how CI/CD gates work and what happens when tests fail.

- Ask how defects are triaged and how the team prevents recurrence.

Red flags:

- Heavy reliance on manual testing for production systems

- No clear definition of done

- Releases that are manual, infrequent, or fragile

If you plan to ship regularly, this category should rarely be lightly weighted.

Security and compliance readiness (right-sized to your risk)

Security evaluation should match the risk of the system. A small internal tool still needs baseline controls. A regulated or data sensitive system needs deeper due diligence.

Score the vendor on:

- Secure development lifecycle practices

- Access control and secrets management

- Vulnerability management and dependency hygiene

- Incident response readiness and transparency

Verification steps:

- Ask for a security questionnaire response plus a walkthrough of how security is embedded into delivery.

- Ask who has access to what environments and how access is granted and revoked.

- In references, ask how the vendor handled security concerns or incidents.

Red flags:

- Security framed only as a checkbox or a promise

- No clear incident response approach

- Unclear access control practices

Mentions of SOC 2 or ISO 27001 can help you orient, but the score should still be driven by concrete process evidence and how the vendor will work inside your constraints.

Team structure and continuity

This category protects you from staffing surprises.

Score the vendor on:

- Proposed team roles, seniority mix, and whether the team is dedicated

- Turnover and backfill approach

- Documentation and knowledge distribution

- Continuity protections for key roles

Verification steps:

- Meet the proposed delivery team.

- Ask how onboarding works for new engineers and how knowledge is captured.

- In references, ask how stable the team was and how transitions were handled.

Red flags:

- Generic bios not tied to the actual project

- Key knowledge concentrated in one person

- High rotation presented as normal

If continuity is a risk, negotiate it explicitly and set expectations early.

Commercials and contract realities

Commercial structure isn't just price. It is risk allocation, scope control, and your ability to exit if the relationship fails.

Score the vendor on:

- Fit of pricing model to scope certainty

- Transparency of assumptions and rates

- Change control and scope management

- IP ownership and portability

- Exit readiness and handover plan

Useful terms to align on:

- NDA: Non Disclosure Agreement

- SOW: Statement of Work

- MSA: Master Services Agreement

Verification steps:

- Ask what is included and excluded, and how changes are priced.

- Ask how the vendor prevents vendor lock in through documentation and portability.

- Review exit provisions and what handover looks like in practice.

Red flags:

- Pricing that is opaque or packed with assumptions you cannot validate

- Rigid fixed price structures applied to highly uncertain scope

- Weak exit clauses or unclear IP ownership

A vendor that is easy to exit is often a vendor that is safer to start with.

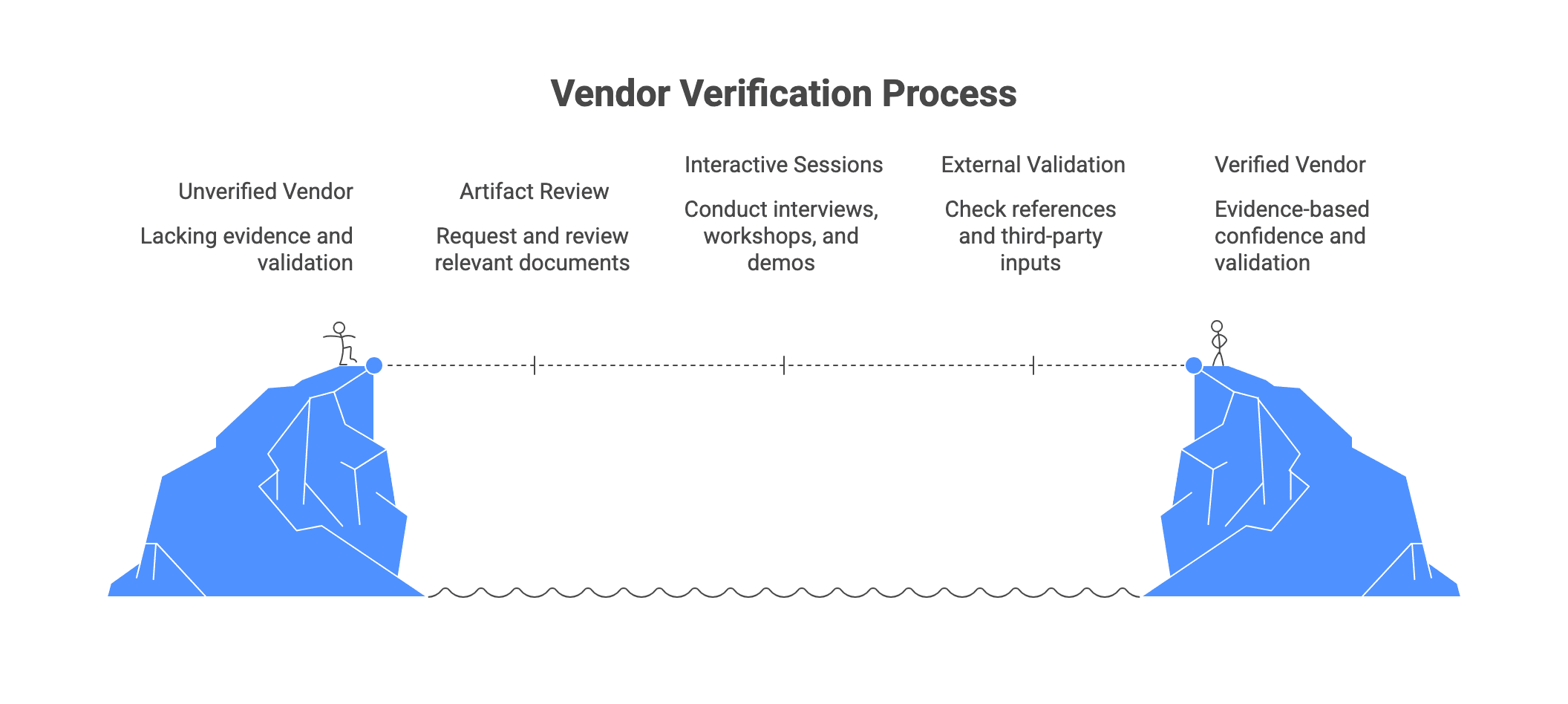

Practical verification steps (what to request, review, and test)

Scores should be grounded in evidence. The most efficient approach is to combine three layers:

- Artifact review (documents and samples)

- Interactive sessions (interviews, workshops, demos)

- External validation (references, third party inputs where applicable)

Keep verification proportional. Use lighter checks to narrow the field, then invest deeper effort with finalists. For each scorecard category, pick two or three high signal artifacts and one interaction that reveals how the vendor really works.

A simple way to keep the process manageable is to pre define your evidence requests and schedule them in the same order for every vendor. That reduces variability and makes comparisons fair.

Artifacts to request (before finalist selection)

Ask for a targeted set of artifacts that map directly to the scorecard. Avoid demanding exhaustive documentation from every vendor up front.

High signal artifacts include:

- Company overview and relevant case studies: focus on similar domain, scale, and stack

- Delivery process and governance docs: process playbook, sample sprint backlog, reporting templates, risk and issue logs

- Architecture and design samples: anonymized architecture diagrams and decision records

- Quality and testing evidence: QA strategy, test plans, example test reports, CI/CD overview

- Security summaries: security practices overview and baseline policies, plus a filled security questionnaire when relevant

- Team bios and role descriptions: for the actual proposed team, not generic profiles

What “good” looks like is clarity and relevance. Documents should connect to the scenarios you care about and avoid generic boilerplate that says little about real practice.

If a vendor cannot share any tangible artifacts, score confidence low and treat it as a material risk.

Reference checks that actually de-risk the decision

Reference checks should not be a formality. Use them as a software development vendor due diligence checklist that probes delivery reality.

A short reference check script:

- What was the project context (scope, duration, team size, criticality)?

- How reliable was delivery against commitments and how were changes handled?

- How transparent was the vendor about risks, blockers, and slips?

- How did governance work (status cadence, escalation, decision rights)?

- What did quality look like (defect trends, release stability, incident handling)?

- How stable was the team over time and how were transitions managed?

- If you expanded or reduced the relationship, what drove that decision?

- What would you insist on doing differently if you started again?

Interpret feedback in patterns. One negative comment is not always decisive. Repeated themes across references are. Triangulate reference feedback against what you saw in workshops and artifacts, and follow up on discrepancies.

If references consistently describe strong engineering but weak communication, score delivery governance accordingly and decide whether you can mitigate that risk internally.

Small validation exercises (workshops, pilot, or paid discovery)

When the project is high stakes, a small validation exercise can reveal what documents cannot.

Options that work well:

- Workshops (half day to multi day): discovery, architecture, or roadmap planning sessions that produce tangible outputs

- Paid discovery (time boxed): structured exploration that results in a plan, architecture direction, and risk register

- Pilot build (short, scoped): a thin vertical slice or proof of concept that mimics real constraints

How to make these exercises decision useful:

- Time box them and define deliverables

- Use the scorecard as the evaluation lens

- Confirm the people on the exercise are the people who will staff the real work

- Set expectations for code quality, testing, and documentation that match the main engagement

Avoid misleading pilots. A demo built by a hand picked team that will not be assigned later can create false confidence. Make staffing and quality expectations explicit.

After the exercise, update scores and confidence levels. The goal is to reduce uncertainty where it matters.

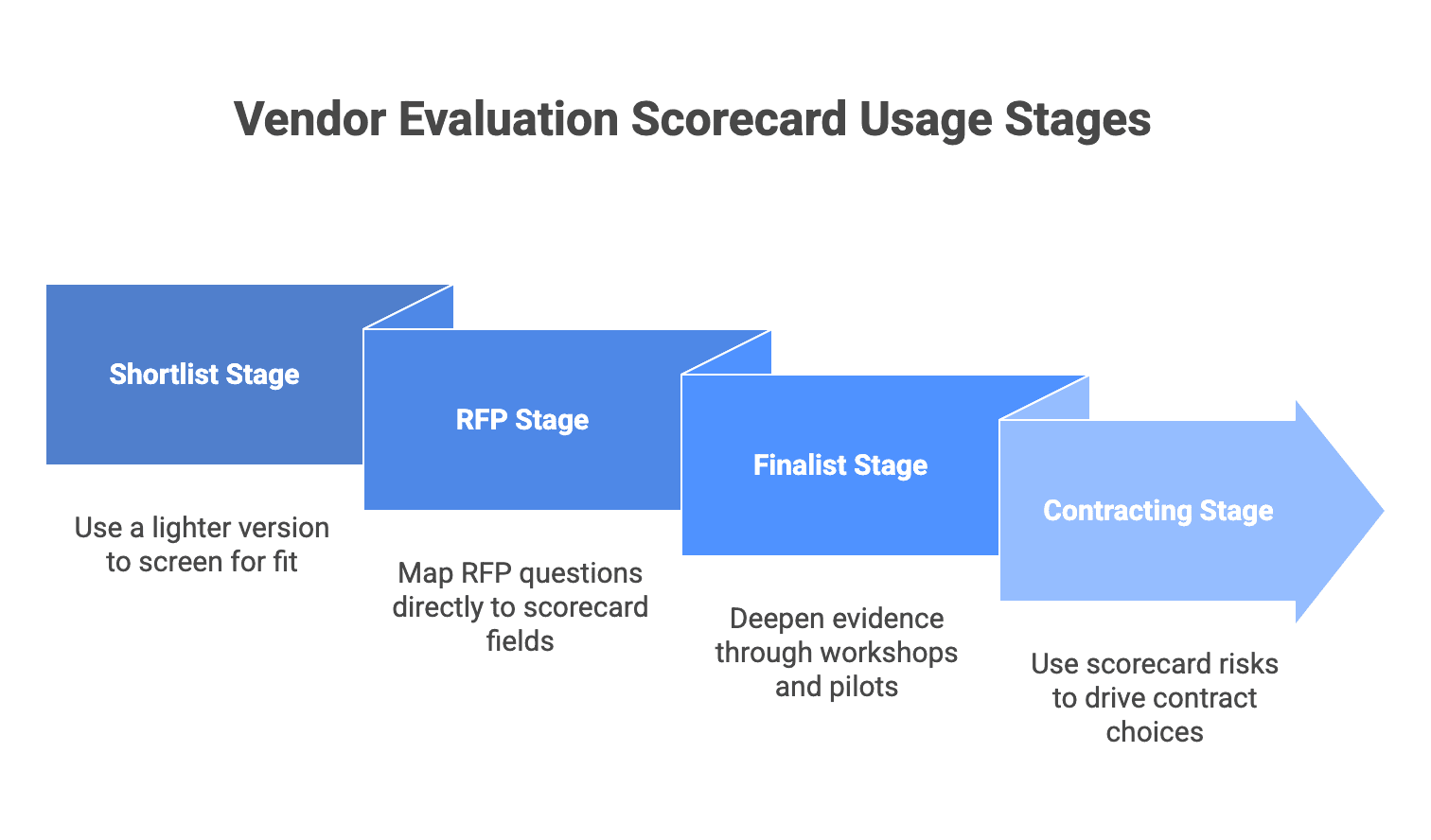

Where to use the scorecard: shortlist, RFP, finalist selection, and contracting

Use the scorecard across four stages, keeping criteria stable while evidence depth increases.

Shortlist stage

Use a lighter version to screen for fit and eliminate obvious mismatches. Keep scoring mostly qualitative and focus on disqualifiers.

RFP stage

Map RFP questions directly to scorecard fields so responses translate into scores cleanly. Score independently to reduce bias.

Finalist stage

Deepen evidence through workshops, reference checks, and a small pilot or paid discovery. Update scores and confidence based on what you observed.

Contracting stage

Use scorecard risks to drive contract and governance choices. If continuity is a risk, negotiate staffing protections. If security is uncertain, require stronger validation steps. If change control is unclear, define it tightly in the SOW.

A scorecard is most valuable when it influences what you negotiate and how you govern.

Conclusion

The fastest way to make this real is to copy the template into a spreadsheet, tailor weights to your risk profile, and run a parallel evaluation of two vendors. You will learn quickly which criteria are most diagnostic for your context and where you need clearer anchors or additional evidence requests.

When stakeholders disagree, use the scorecard to force the conversation back to evidence. Review the biggest scoring deltas, decide whether weights reflect true priorities, and document which risks you are accepting versus mitigating in the contract.