We put excellence, value and quality above all - and it shows

A Technology Partnership That Goes Beyond Code

“Arbisoft has been my most trusted technology partner for now over 15 years. Arbisoft has very unique methods of recruiting and training, and the results demonstrate that. They have great teams, great positive attitudes and great communication.”

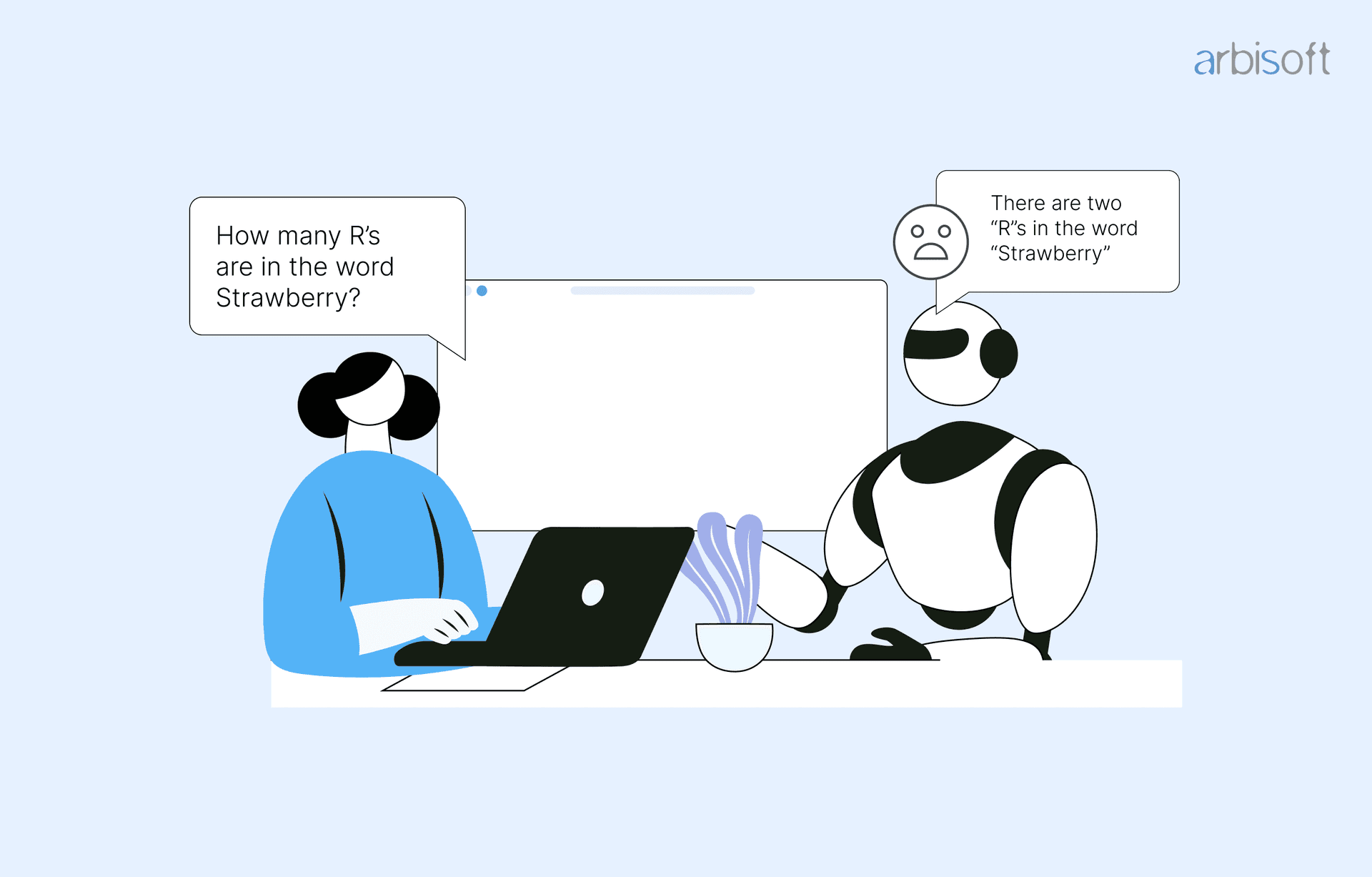

Why LLMs Can't Count the R's in 'Strawberry' & What It Teaches Us

Language models have become increasingly sophisticated, capable of generating human-quality text, translating languages, and even writing code, showcasing the expanding role of artificial intelligence solutions in diverse industries. Yet, despite their impressive abilities, these LLMs still stumble on surprisingly simple tasks. One such challenge that has baffled even the most advanced LLMs is the seemingly trivial task of counting the number of "r"s in the word "strawberry."

Imagine, for a moment, a supercomputer that can write a Shakespearean sonnet or solve complex mathematical equations. Yet, when presented with the simple task of counting the "r"s in a common English word, it stumbles and stutters, often producing incorrect results.

This seemingly absurd situation highlights a fundamental limitation of current LLMs - their inability to grasp the intricacies of human language at a granular level. While they can excel at higher-level tasks like summarization and translation, they often need help with the finer details, such as counting individual characters.

This blog focuses on understanding why this error occurs and what it teaches us about the limitations of modern-age LLMs. Let’s dive in.

Understanding LLMs and Their Limitations

The impact of LLMs has been felt across natural language processing, creative writing, and beyond. To understand how LLMs approach problem-solving, it's essential to delve into their underlying mechanisms. Let's break down the process in simple terms.

The Transformer Architecture

Most modern LLMs are built on the transformer architecture, introduced in the paper "Attention Is All You Need" in 2017. This architecture is designed to capture long-range dependencies in sequences, making it particularly well-suited for natural language processing tasks. Organizations often rely on specialized software development services to implement and optimize these complex architectures.

The key components of the Transformer Architecture include:

Encoder-Decoder Architecture

The Transformer consists of an encoder and a decoder. The encoder processes the input sequence, while the decoder generates the output sequence. Think about translating a sentence from English to French. The encoder would process the English sentence, understanding its meaning and structure. Then, the decoder would use this information to generate the equivalent French sentence.

Attention Mechanism

The attention mechanism allows the model to weigh the importance of different parts of the input sequence when generating the output. This enables the model to capture long-range dependencies and context. Consider translating the sentence "The cat sat on the mat." When translating "mat," the attention mechanism would focus on the words "cat" and "on," as they are more relevant than "the" in determining the correct translation.

Self-Attention

Self-attention allows the model to relate different parts of the input sequence to each other, helping it understand the context and meaning of the text. Self-attention helps the model understand the relationships between different words in the input sequence. For example, in the sentence "The quick brown fox jumps over the lazy dog," self-attention would help the model understand that "quick" modifies "fox," "brown" modifies "fox," and so on.

Positional Encoding

Positional encoding adds information about the position of each word in the input sequence, allowing the model to capture the order of words. For instance, the meaning of the sentence "The dog bit the cat" is different from "The cat bit the dog." Positional encoding helps the model understand the importance of word order.

Here are some more examples to illustrate these concepts:

- Machine Translation - The encoder processes the source language sentence, while the decoder generates the target language sentence. Attention helps the model focus on relevant parts of the source sentence when translating each word in the target sentence.

- Text Summarization - The encoder processes the input text, and the decoder generates a summary. Attention helps the model focus on the most important parts of the input text when generating the summary.

- Question Answering - The encoder processes the question and context, and the decoder generates the answer. Attention helps the model focus on relevant parts of the context when answering the question.

By understanding these components and how they work together, we can gain a deeper appreciation for the power and versatility of the Transformer architecture.

The Problem-Solving Process

When an LLM is presented with a problem, it follows these general steps.

- Tokenization - The input text is broken down into smaller units called tokens, which can be individual words or subwords.

- Embedding - Each token is represented as a numerical vector, capturing its semantic meaning.

- Encoding - The encoder processes the input sequence, using self-attention to capture dependencies and context.

- Decoding (for generative tasks) - The decoder generates the output sequence, using the encoded input and attention mechanisms to produce the desired text.

Example - Question Answering

To illustrate how LLMs approach problem-solving, consider the task of question answering. When presented with a question and a context, an LLM will:

- Tokenize - Break down the question and context into tokens.

- Embed - Represent each token as a numerical vector.

- Encode - Process the question and context using the encoder.

- Decode - Generate an answer by iteratively predicting each word of the answer based on the encoded input and previously generated words.

Now that we have a basic understanding of the mechanisms behind how LLMs work - let’s talk about the elephant in the room - ‘STRAWBERRY!’

From Eliza to GPT-4, witness the incredible evolution of language models. This timeline reveals the key milestones and breakthroughs that have shaped AI language capabilities.

Curious about how computers learn to talk? This timeline will take you on a journey through the evolution of language models - from early chatbots to today's sophisticated AI.

The Case of "Strawberry"

The word "strawberry" presents a unique challenge for LLMs because it involves multiple factors, including phonetic similarity, homophones, irregular spelling, and the limitations of current LLM architectures.

Tokenization and the Word "Strawberry"

As mentioned above, tokenization is a fundamental process in natural language processing that involves breaking down text into smaller units called tokens. These tokens can be individual words, subwords, or characters, depending on the specific tokenization method used.

Think of tokenization as chopping up a sentence into smaller pieces. For example, the sentence "The quick brown fox jumps over the lazy dog" could be tokenized into the following words: "The," "quick," "brown," "fox," "jumps," "over," "the," "lazy," and "dog."

Common Tokenization Methods

Word-Level Tokenization

The most common method, that breaks text down into individual words. For "strawberry," this would result in two tokens - "straw" and "berry."

Subword Tokenization

Breaks text down into subword units, such as characters or character n-grams. This method can be more effective for handling out-of-vocabulary words and structural variations.

Character-Level Tokenization

Breaks text down into individual characters. This method can be useful for tasks that require fine-grained analysis of text, but it can also increase the computational complexity.

Tokenization and "Strawberry"

When tokenizing the word "strawberry," the choice of tokenization method can significantly impact how the LLM processes the word. For example:

Word-Level Tokenization

The LLM would represent "strawberry" as two tokens: "straw" and "berry." This might make it difficult for the LLM to accurately count the "r"s, as it may not recognize the relationship between the two tokens.

Subword Tokenization

A subword tokenizer might break "strawberry" down into smaller units, such as "straw," "berr," and "y." This could be more helpful for the LLM, as it provides more granular information about the word's structure.

Character-Level Tokenization

The LLM would represent "strawberry" as 10 individual characters: "s," "t," "r," "a," "w," "b," "e," "r," "r," and "y." This method would provide the most detailed information about the word's structure but could also be computationally expensive.

The Impact of Tokenization on Character Counting

The choice of tokenization method can directly affect the accuracy of character counting. If the tokenization method obscures the relationship between individual characters, it can be difficult for the LLM to count them accurately. For example, if "strawberry" is tokenized as "straw" and "berry," the LLM may not recognize that the two "r"s are part of the same word.

To improve character counting accuracy, LLMs may need to use more sophisticated tokenization methods, such as subword tokenization or character-level tokenization, that can preserve more information about the structure of words. Additionally, LLMs may need to incorporate additional techniques, such as contextual understanding and linguistic knowledge, to help them better understand the relationship between individual characters within words.

Lessons from the LLM Strawberry Test

The "strawberry" challenge offers valuable insights into the limitations and capabilities of LLMs. Here are some key lessons to consider:

1. The Importance of Prompt Engineering

LLMs sometimes struggle to understand the context of a query, especially when it involves subtle differences. This is because LLMs are trained on large datasets of text, which can introduce biases and limitations. To improve the accuracy of LLM responses, it is crucial to provide as much context as possible. This signifies the importance of prompt engineering. For example, when asking an LLM to count the characters in a specific word, it is helpful to provide the word's definition or usage in a sentence. This signifies the importance of how we enter our prompts.

2. The Limitations of Tokenization

Tokenization can be a limiting factor in character counting. Different tokenization methods can produce different results, and some methods may obscure the relationship between individual characters within a word. To address this limitation, experts are exploring new tokenization techniques that can preserve more information about the structure of words.

3. The Need for Character-Level Processing

While LLMs are often designed to process language at a higher level, focusing on semantic meaning rather than individual characters, there is a growing need for character-level processing in certain tasks. Character-level processing can be particularly useful for tasks that require a deep understanding of the structure of words, such as spell-checking, text normalization, and character recognition.

4. The Impact of Biases and Limitations

LLMs are trained on large datasets of text, which can introduce biases and limitations. These biases can manifest in various ways, such as generating offensive or harmful content, perpetuating stereotypes, or reinforcing existing inequalities. It is important to be aware of these limitations and to interpret LLM outputs with caution.

5. The Potential for Improvement

Despite their limitations, LLMs have the potential to become even more powerful tools for natural language processing. By addressing the challenges highlighted in the "strawberry" challenge, researchers can develop LLMs that are more accurate, reliable, and versatile.

Future Directions - Overcoming the Limitations of LLMs

As LLMs continue to evolve, innovative techniques are being explored to address the limitations, particularly in areas such as character-level processing. Here are some promising future directions:

Enhanced Fine-Grained Analysis

Character-level attention allows models to focus on individual characters, enabling more precise text analysis.

Improved Accuracy for Character-Based Tasks

This technique can significantly improve the accuracy of tasks like spell-checking, text normalization, and character recognition.

Integration with Word-Level Processing

Character-level attention can be combined with word-level processing to create a more comprehensive understanding of text.

Using NMT for Character-Level Tasks

Techniques from Neural Machine Translation, such as attention mechanisms and sequence-to-sequence modeling, can be adapted to improve character-level processing.

Enhanced Contextual Understanding

NMT models can capture long-range dependencies and contextual information, which is crucial for accurate character-level analysis.

Improved Handling of Irregularities

NMT can help LLMs handle irregular spellings, morphological variations, and other linguistic complexities.

Multi-disciplinary

Hybrid approaches can use the strengths of different techniques to address the limitations of LLMs.

Below are some examples of real-world applications of these techniques.

- Google Translate - Google Translate has incorporated character-level processing and hybrid NMT techniques to improve its accuracy for languages with complex writing systems, such as Chinese and Japanese.

- OpenAI's GPT-3 - While primarily focused on word-level processing, GPT-3 has shown some ability to handle character-level tasks, such as spell-checking and text normalization.

In The End

The strawberry test LLM challenge serves as a reminder of both the limitations and potential of language models. While LLMs have made significant progress in recent years, they still struggle with tasks that require a deep understanding of individual characters and their relationships within words. However, by exploring innovative techniques such as character-level attention, neural machine translation, and hybrid approaches supported by deep learning solutions, it is possible to overcome these limitations.

As we continue to push the boundaries of natural language processing, it is essential to remain aware of the challenges and opportunities that lie ahead. By understanding the limitations of LLMs and exploring new avenues for improvement, we can create a brighter future.

FAQs

1. What is the “LLM strawberry test”?

The “LLM strawberry test” refers to the challenge where language models struggle to correctly count the number of “r”s in the word “strawberry.” Despite their high-level language abilities, these models can miss fine details due to tokenization and character-level processing limitations.

2. What does the “strawberry test LLM” show?

It highlights a fundamental limitation in many large language models. Although they excel in generating complex text and understanding context, they often falter on seemingly simple tasks—like counting characters—because of how they break down and process words.

3. What is meant by “LLM strawberry”?

“LLM strawberry” is a shorthand reference to the specific problem where LLMs, such as GPT-like models, miscount or misinterpret the components of the word “strawberry.” This exposes challenges in tokenization methods and character-level analysis within these models.

4. How does “strawberry LLM” relate to current AI challenges?

The phrase underscores how advanced language models sometimes fail at tasks requiring detailed character-level attention. It serves as an example of where the balance between semantic understanding and fine-grained text analysis can be improved.

5. How many “r”s are in “strawberry”?

The word “strawberry” contains three “r”s. The test is used to show that while this is obvious to humans, LLMs can occasionally miscount due to the way they tokenize words.

6. What is the “strawberry problem LLM”?

This term describes the phenomenon where LLMs have difficulty counting specific characters in words like “strawberry.” The problem arises because tokenization can split words into subunits (e.g., “straw” and “berry”), causing the model to overlook or misinterpret the individual letters.

7. How many Rs in strawberry?

There are three “r”s in the word “strawberry.” This simple fact can be a stumbling block for LLMs due to their reliance on tokenization, which may disrupt the continuity of the word.

8. How many “rs in strawberry” when processed by AI?

While the correct answer is three, some AI models might output an incorrect count because their internal processing (such as subword tokenization) can obscure the clear separation of letters.

9. In the context of AI, how many “r” in strawberry?

The correct count is two. However, the example is used to illustrate that AI systems might sometimes err on simple tasks due to the complexities in their language processing mechanisms.