We put excellence, value and quality above all - and it shows

A Technology Partnership That Goes Beyond Code

“Arbisoft has been my most trusted technology partner for now over 15 years. Arbisoft has very unique methods of recruiting and training, and the results demonstrate that. They have great teams, great positive attitudes and great communication.”

Custom Software Development Vendor Shortlisting Framework: From 30 Vendors → 5 → 1

If you are wondering how to shortlist custom software development vendors while staring at a messy longlist of vendors and need to get to one defensible choice without burning weeks of stakeholder time, you need a gated shortlisting process.

This framework is for US buyers (product, engineering, security, procurement) who want a repeatable path from about 30 custom software development vendors to about 5, then to 1, with an evidence trail you can defend later. If you want the broader end to end selection journey beyond shortlisting, see the buyer’s guide to choose a custom software development partner.

Why this decision matters (and why most shortlists fail)

A vendor shortlist fails when it is driven by noise: referrals, brand impressions, unstructured demos, and inconsistent scoring. That is how teams end up signing with a vendor that looks great in a meeting, then struggles to deliver (or surprises you with weak security practices or an over junior staffing mix).

A good custom software development vendor shortlisting process is less about a sophisticated model and more about discipline:

- Clear problem statement and success metrics

- Explicit constraints and disqualifiers

- Stage gates where criteria change as you narrow

- Structured evaluations (standard questions, rubrics, documented notes)

- A simple decision log that preserves the “why”

Your goal is to eliminate wrong fit vendors quickly, then go deep only where depth is justified by risk and project criticality.

Action cue: Before you add another custom software development vendor to the spreadsheet, commit to a gated process and a decision log.

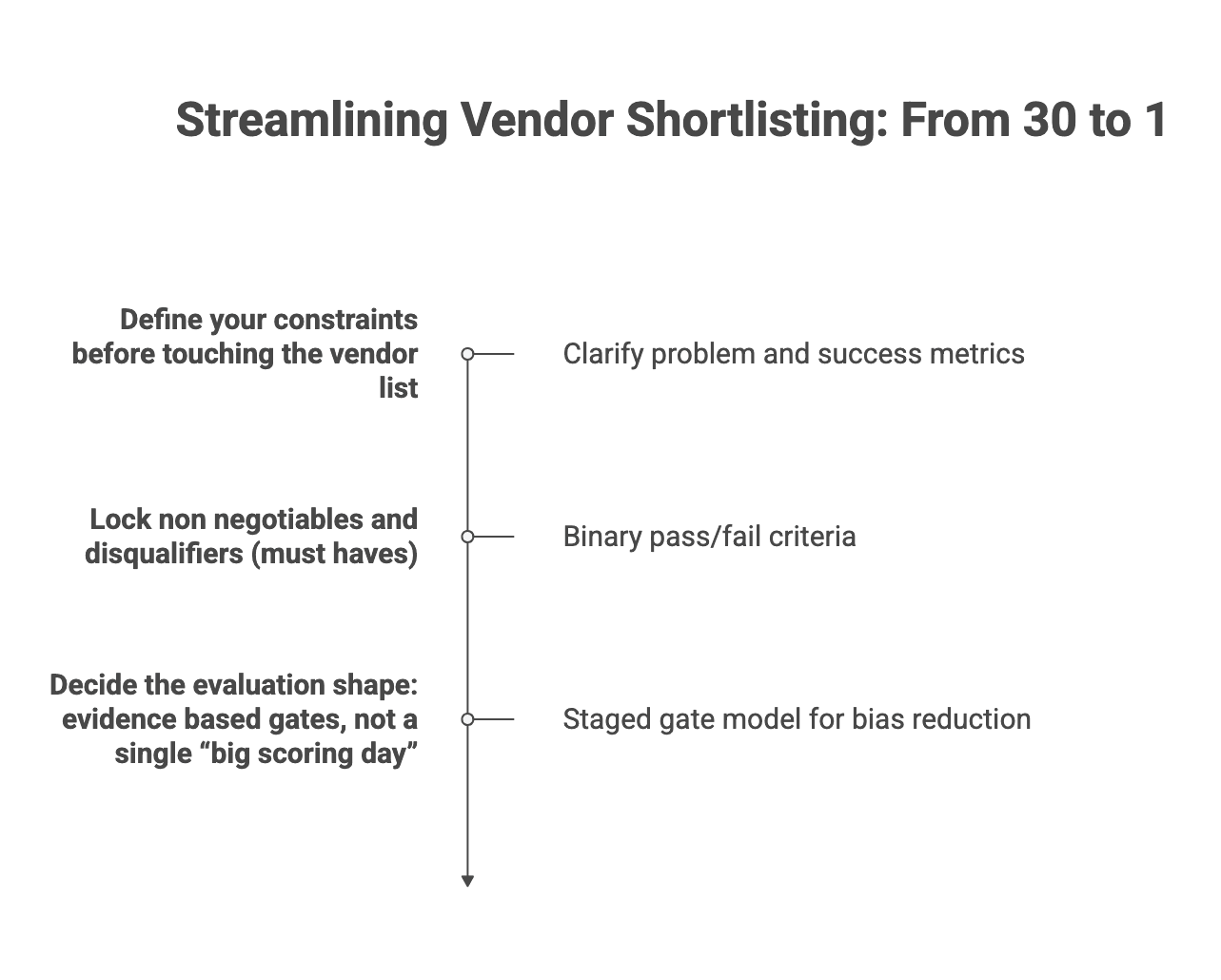

Define your constraints before touching the vendor list

Shortlisting gets dramatically easier when the team stops comparing custom software development vendors against vague hopes and starts comparing them against a shared constraint frame.

Clarify the problem and success metrics (business + technical)

You do not need a full Request for Proposal (RFP) to be clear. A short “problem and success memo” is usually enough to align stakeholders and create comparable vendor responses. At minimum, capture:

- Current state and pain points (what is broken, slow, risky, or expensive today)

- Two to five measurable business outcomes (even if ranges are approximate)

- High level delivery expectations (for example, MVP timing and release cadence)

- Non negotiable quality and reliability expectations (for example, uptime targets)

- Non negotiable security or regulatory obligations (if applicable)

Trade off to manage: overly granular early metrics can create false precision when requirements are still evolving. Directional targets and must not fail criteria tend to work better early, with KPIs refined during discovery.

Action cue: Write the problem and success memo in one page and get it approved by the decision team before outreach.

Lock non negotiables and disqualifiers (must haves)

Most teams under use disqualifiers. A “must have” should be binary and tied to feasibility or risk. Common disqualifier themes for custom software development vendors include:

- Security posture aligned to your minimum baseline (for example, evidence aligned with SOC 2 (Service Organization Control 2) or relevant NIST guidance)

- Data handling and jurisdiction constraints (for regulated or sensitive data)

- Regulatory and privacy obligations the vendor must support (industry dependent)

- Minimum domain or technical experience (at least one relevant comparable project)

- Time zone overlap and communication model that is viable for your team

- Tech stack or ecosystem constraints that are truly non negotiable

To avoid over filtering, separate must haves from preferences. Preferences (tooling familiarity, specific agile flavor, cultural signals) can be scored later without blocking otherwise viable vendors.

Action cue: Convert your must haves into 5 to 10 pass or fail questions and agree on what counts as acceptable evidence for each.

Decide the evaluation shape: evidence based gates

A staged gate model reduces bias and wasted effort. You are not trying to fully evaluate 30 custom software development vendors. You are trying to collect just enough comparable evidence to narrow the field, then increase depth only for finalists.

A practical shape is:

- Early gates: fit and constraints (fast elimination)

- Mid gates: delivery reality and team signals (structured evidence)

- Final gates: risk, security, and commercials (deep verification)

Depth should be proportional. Higher risk work and sensitive systems justify deeper checks and more documentation. Low risk pilots can use lighter gates.

Action cue: Define your gates, owners, and required outputs before you schedule custom software development vendor meetings.

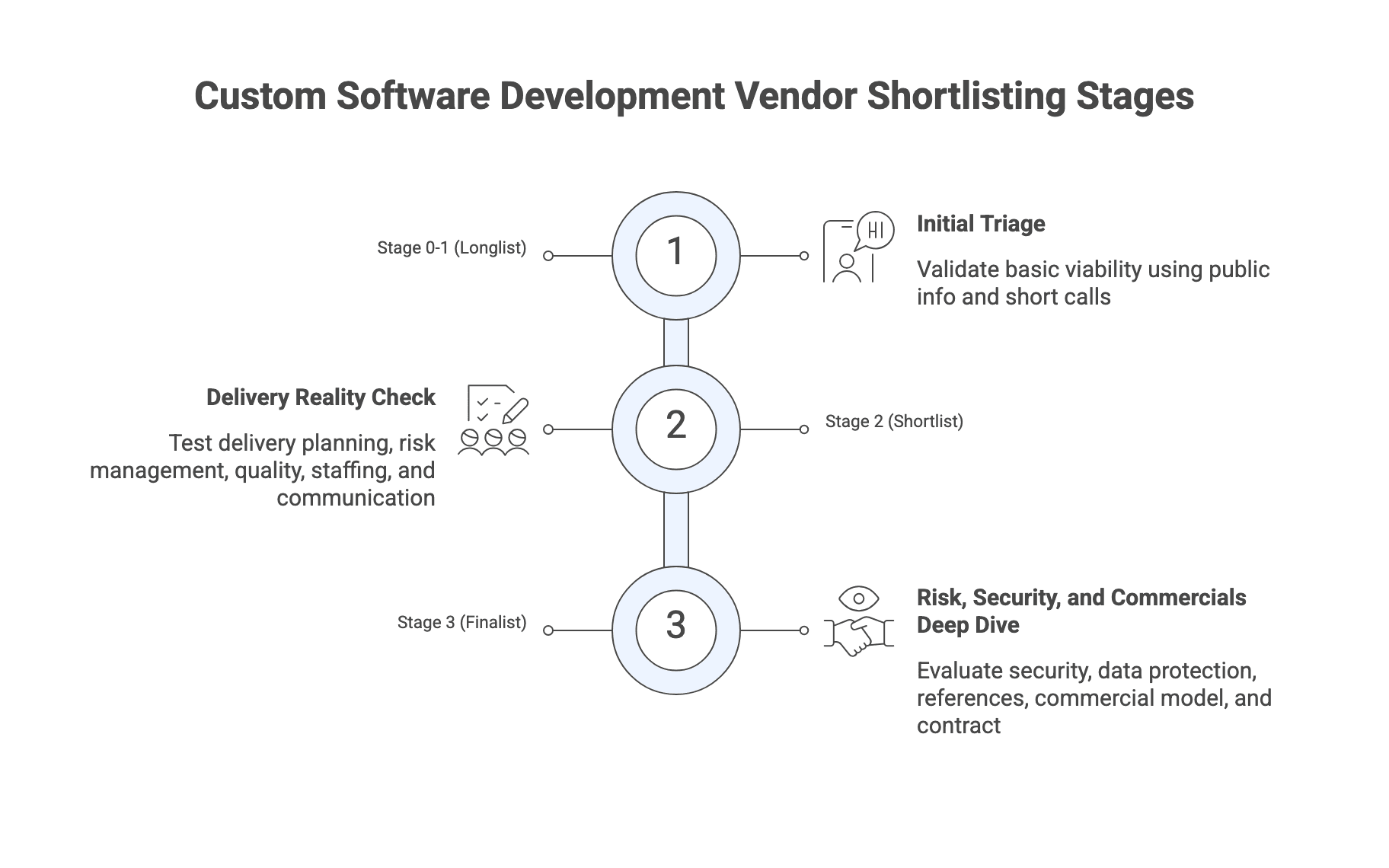

What to evaluate at each stage (criteria that change as you narrow)

One of the most common failure patterns is evaluating everything too early. The criteria should evolve as you narrow.

Stage 0-1 (longlist): fit signals you can validate quickly

At this stage, you are validating basic viability using public information and short intro calls. High signal fit indicators include:

- Case studies comparable in domain and complexity

- Evidence of familiarity with your platform or tech stack

- Client profile alignment (similar size, regulatory profile, governance needs)

- Clear description of delivery model (how teams work, onshore/offshore mix)

- Ability to restate your problem and constraints accurately in an intro call

- Any visible security posture signals appropriate to your risk profile

Fast red flags include:

- Inability to describe a recent relevant project

- Vague or inconsistent claims across website and conversations

- No mention of security practices in contexts where sensitive data is involved

- Refusal or inability to confirm basic constraints (time zone, language coverage)

Public info can be incomplete or curated. Use it to triage and to generate questions.

Action cue: Treat Stage 0-1 as triage: identify obvious mismatches and turn unknowns into targeted questions.

Stage 2 (shortlist): delivery and team reality checks

Now you are moving from marketing to delivery evidence. The goal is to test whether the vendor can run a real engagement the way you need it to be run.

Focus on:

- How they plan, execute, and govern delivery

- How they manage risk, scope, and change control in practice

- How they handle quality: testing strategy, defect triage, and remediation

- How they staff: seniority mix, continuity, and who actually does the work

- How they communicate: cadence, transparency, and escalation paths

This is where structured inputs like a lightweight Request for Information (RFI) (Request for Information) and standardized calls create comparable evidence.

Action cue: Standardize the questions and scoring so every vendor is evaluated on the same core themes.

Stage 3 (finalist): risk, security, and commercials deep dive

Finalists should be evaluated on the things that can ruin delivery after you sign:

- Security and third party risk posture (questionnaires plus evidence for critical controls)

- Data protection expectations and any required contract addenda

- Reference checks that match your scale and complexity

- Commercial model fit and staffing realism

- Contract artifacts that reflect actual working practices, especially acceptance criteria and change control

Action cue: Decide which security, legal, and reference checks are mandatory for selection, then communicate the evidence request early.

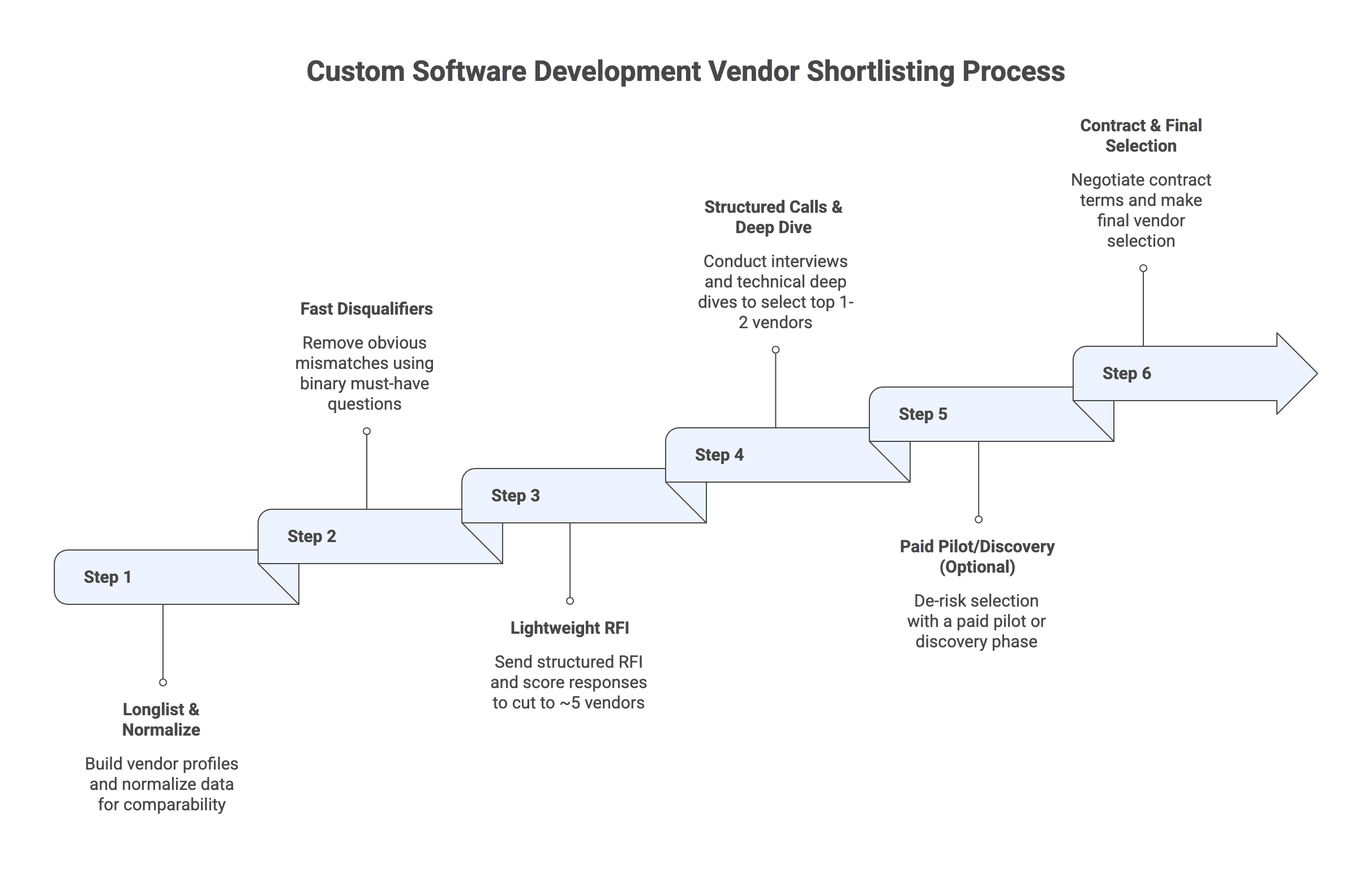

Step-by-step shortlisting process: 30 → 5 → 1 (with a practical timeline)

High quality research on exact timeboxes per gate is limited, but common practice patterns provide directional ranges. For mid size initiatives, teams often complete longlist work plus fast disqualifiers in about 1 to 3 weeks, RFIs and shortlist evaluation in about 2 to 4 weeks, and finalist due diligence plus contracting in several additional weeks depending on security and legal complexity.

Timeline drivers include:

- Clarity and stability of scope

- Stakeholder availability for evaluation sessions

- Depth of security and legal review required

- Number of vendors carried into later stages

- Internal procurement process and approvals

A practical way to stay on track is to set a target decision date and work backward, booking key sessions early. You can compress timelines by overlapping some work (for example, starting security review early), but that can create rework if a vendor is dropped later.

Here is the gated workflow you can run:

Step | Goal | Typical duration (directional) | Output you should have in hand |

| Step 1 | Build a comparable longlist | Part of weeks 1 to 3 | One page profile per vendor |

| Step 2 | Apply disqualifiers to cut to about 10 to 15 | Part of weeks 1 to 3 | Pass fail disqualifier table with evidence notes |

| Step 3 | Run a lightweight RFI to cut to about 5 | Weeks 2 to 6 | Scored RFI results with rationale |

| Step 4 | Structured calls and deep dive to pick top 1 to 2 | After RFI | Ranked finalists with panel notes |

| Step 5 | Optional paid pilot or paid discovery | Optional | Pilot outcome summary and exit decision |

| Step 6 | Commercials, contract fit, and final selection | Final weeks | Signed direction with documented rationale |

Action cue: Draft a one page timeline with owners and “latest decision dates” for each gate, then validate it with procurement, legal, and security.

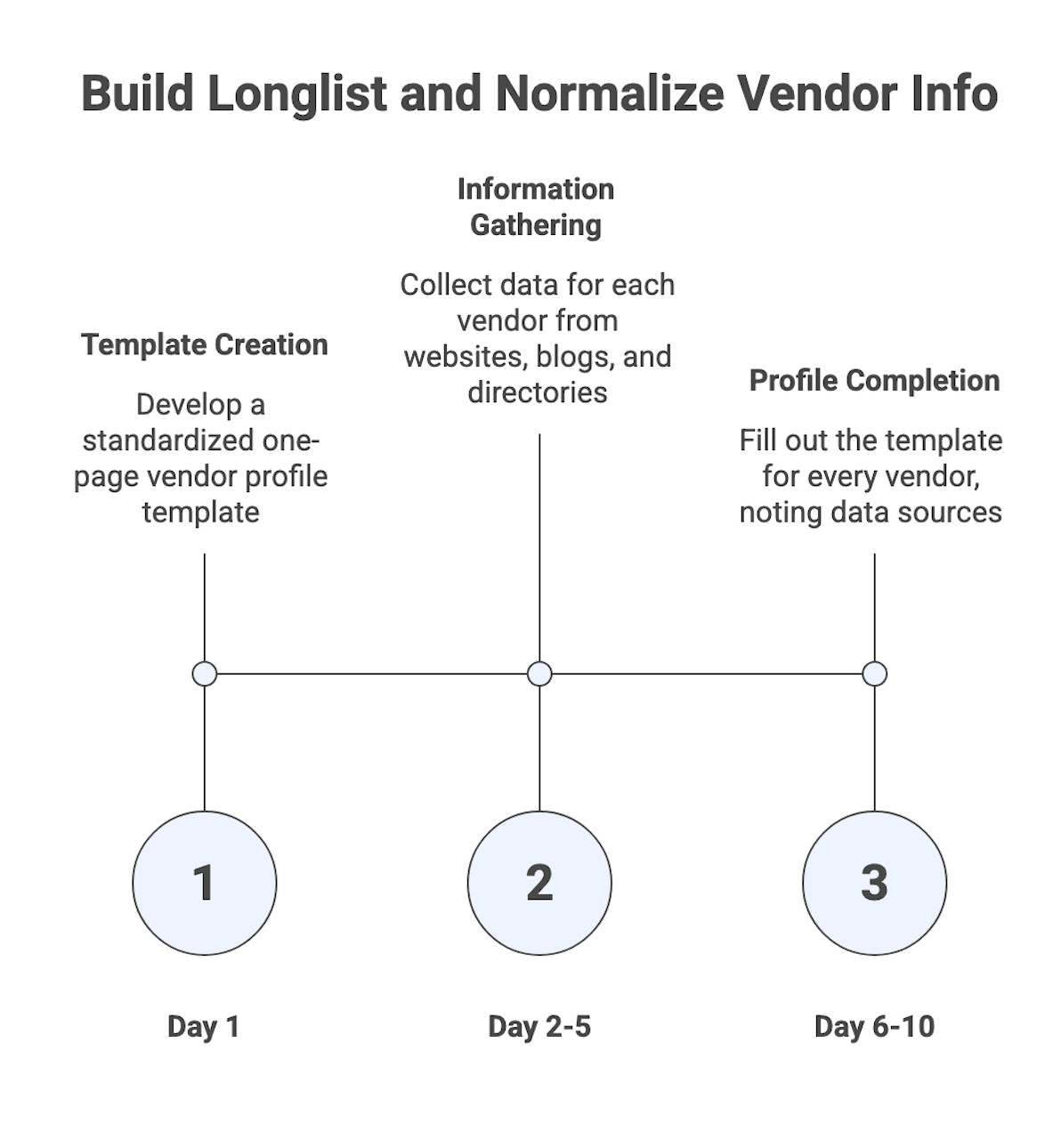

Step 1: Build the longlist and normalize vendor info (1 page per vendor)

The point of Step 1 is comparability. If every vendor description uses different fields, every discussion becomes subjective.

A practical one page vendor profile usually includes:

- Company size and locations

- Core services and domains

- Primary tech stacks

- Example clients or case studies (even if anonymized)

- Indicative project sizes they typically take on

- Basic security posture signals (certifications or secure development claims)

- Known constraints (time zones, data residency, language coverage)

Where to get the information:

- Vendor websites and marketing collateral

- Technical blogs or public engineering content

- Third party directories and profiles (use cautiously)

- A short intro call to fill gaps

Two discipline tips that pay off later:

- Use the same fields and units for all vendors (for example, approximate headcount and typical deal size)

- Log the source of each field so later reviewers know what is “confirmed” vs “claimed”

Action cue: Create the one page template first, then fill it for every vendor before any deep evaluation.

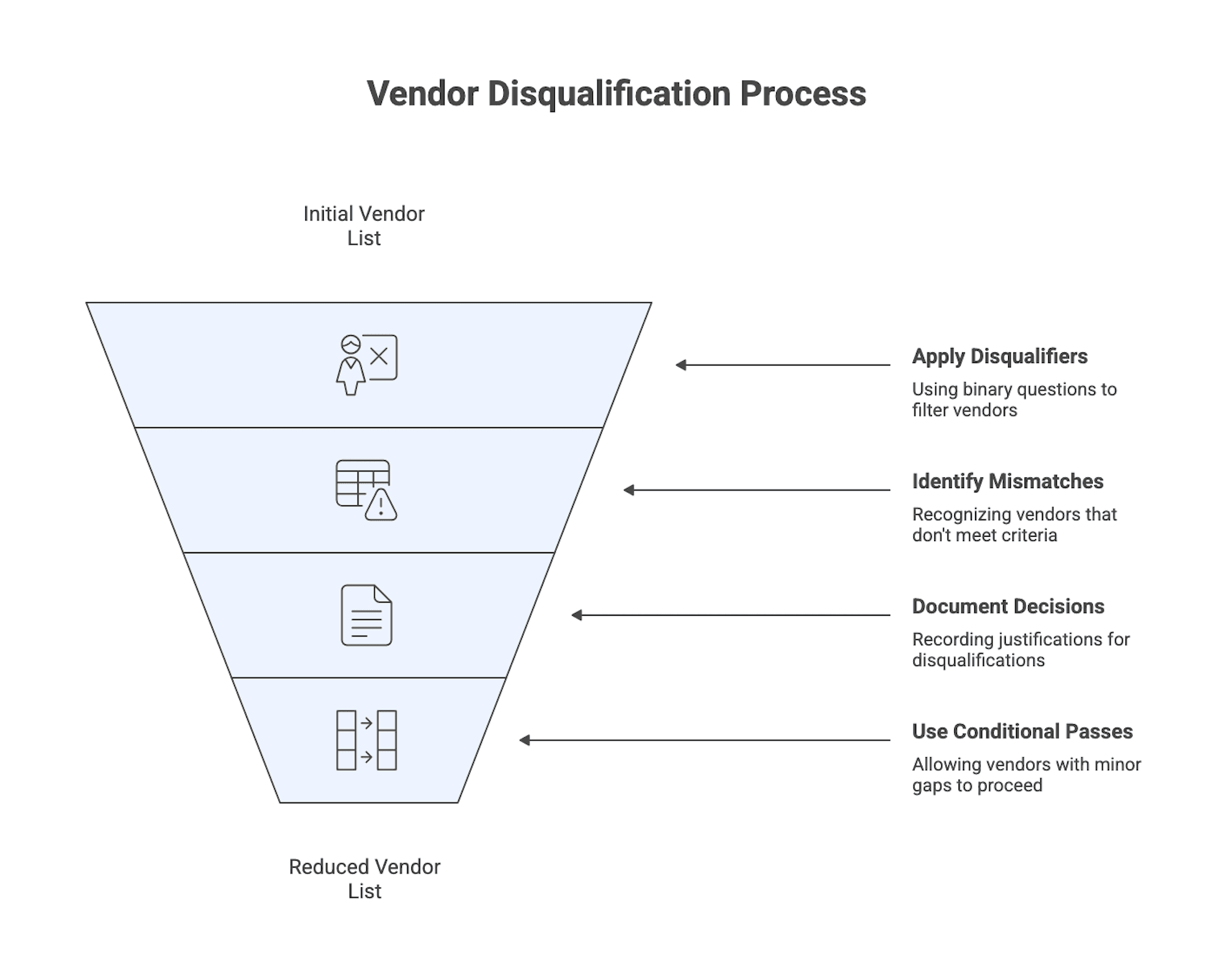

Step 2: Apply fast disqualifiers to cut to about 10 to 15

Now you remove obvious mismatches early, using the binary must have questions you defined up front.

Common early disqualifier buckets include:

- Unwilling or unable to meet security or compliance constraints

- No relevant domain or technical experience

- Scale mismatch (too small or too large relative to your governance needs)

- Non viable time zone overlap or communication model

To keep this defensible and consistent:

- Maintain a simple table: vendor by disqualifier question, marked pass or fail

- Add a one line justification for each fail (what evidence drove the decision)

- Use “conditional pass” sparingly when a vendor lacks one piece of evidence but appears strong, and schedule that missing evidence explicitly for the next gate

Action cue: Hold a short calibration review to confirm disqualifier decisions are consistent and evidence based.

Step 3: Send a lightweight RFI and score responses to cut to about 5

A lightweight RFI collects structured evidence without turning into an RFP. To get high signal responses, provide a short context page first (problem, constraints, success memo), then ask a small number of high value questions aligned to scoring themes.

RFI themes that tend to matter most:

- Their understanding of your problem and goals

- Relevant experience and comparable case studies

- High level solution approach and trade offs

- Delivery model and governance (how the work actually runs)

- Security and compliance posture (and evidence they can share)

- Indicative commercial models and constraints

What strong responses typically look like:

- They restate your problem accurately in their own words

- They provide specific examples of similar work with outcomes or metrics where possible

- They outline a plausible high level architecture and delivery approach

- They describe governance and risk management processes in practical terms

- They provide clear, verifiable security information

Red flag patterns:

- Boilerplate answers not tailored to your context

- Reluctance to discuss risks or trade offs

- Generic security statements with no reference to recognizable controls or evidence

A lightweight scoring method that stays reliable:

- Define 4 to 6 themes (for example, problem understanding, relevant experience, delivery approach, security, commercial fit, communication fit)

- Use a simple 1 to 5 scale with short descriptors

- Require short notes and evidence references with every score

- Run a calibration discussion before finalizing the shortlist so evaluators are scoring similarly

Action cue: Limit the RFI to differentiation questions, score with a rubric, and keep short notes tied to evidence.

Step 4: Run structured calls and a technical deep dive to pick the top 1 to 2

Structured interviews reduce bias. Applied to vendor calls and technical deep dives, that means:

- A fixed agenda

- A core question set asked of all vendors

- Clear scoring criteria

- Multiple evaluators

- The same panel attends all finalist sessions for comparability

A high signal agenda typically includes:

- Vendor recap of your goals and constraints (in their words)

- Delivery approach walkthrough (discovery, planning, execution, governance)

- Technical architecture discussion (integration, scalability, security, trade offs)

- Risk management and incident handling (how they respond when things go wrong)

- Introductions to key team members and role clarity (who does what)

Prompts that test real thinking:

- “Tell us about a time when a milestone was missed. What happened next?”

- “Walk us through a difficult integration you have done before. What surprised you?”

- “What are the biggest risks you see in our constraint set, and how would you mitigate them?”

- “Show how you handle change requests and priority shifts in practice.”

Common pitfalls to counter:

- Being swayed by a polished demo that does not reflect implementation complexity

- Halo effects from charismatic presenters

- Unstructured conversations that drift into small talk and vibes

Countermeasures:

- Use rubrics with behaviors or answers that correspond to higher scores

- Assign panel roles (architecture, delivery, risk and communication)

- Have evaluators record scores independently before group discussion to reduce groupthink

Action cue: Share the agenda in advance, run the same structured panel for every finalist, and score before you debate.

Step 5: De-risk selection with a paid pilot or paid discovery (optional gate)

This gate is optional, but it can be valuable when you need evidence beyond interviews, especially if you expect meaningful uncertainty in scope, integration complexity, or delivery approach.

Two practical principles matter here:

- Decide up front whether you will use a paid pilot or discovery, so it is part of planning and not bolted on late.

- Define what the pilot must prove and what “exit” looks like if it does not meet expectations, before any work begins.

This page intentionally keeps pilot design high level to avoid overlap.

Action cue: If you will pilot, lock the intent and exit rules no.

Step 6: Commercials, contract fit, and final selection

The goal of this step is to prevent last minute surprises and delivery risk created by misaligned contract terms.

Common contract artifacts at this stage include:

- Master Services Agreement (MSA) (overall legal and commercial terms)

- Statement of Work (SOW) (scope, deliverables, timeline, acceptance criteria)

- Service Level Agreement (SLA) (performance metrics and remedies, if applicable)

- Data Processing Agreement (DPA) (data protection terms when personal or sensitive data is involved)

- Non-Disclosure Agreement (NDA) (Non-Disclosure Agreement) if not already executed

Negotiation pitfalls that often create delivery risk later:

- Leaving scope descriptions vague “for flexibility” and creating conflict later

- Failing to define acceptance criteria, causing disputes over when work is done

- Agreeing to aggressive discounts that push the vendor into under resourcing

- Not clarifying change control and how priorities will be managed

- Poorly defined responsibilities for security, data protection, and support

One verification rule that helps: confirm pricing and staffing align with what you learned during technical and delivery evaluation.

Action cue: Treat contract artifacts as delivery tools, and ensure they match real working practices.

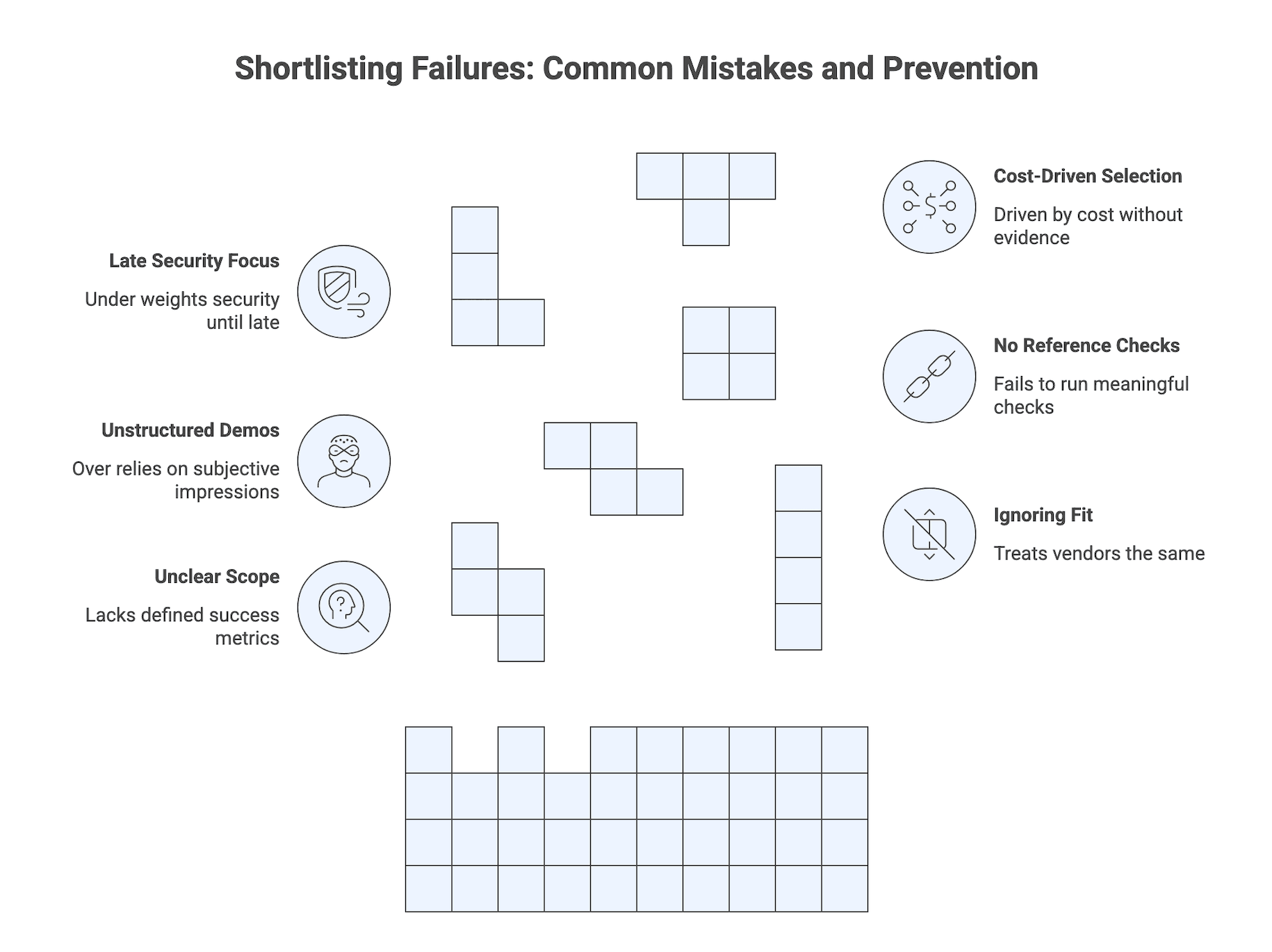

Common failure modes (and how to prevent them)

The predictable shortlisting mistakes are also the easiest to prevent:

- Unclear scope and success metrics

- Treating all vendors the same without considering fit

- Over relying on unstructured demos and subjective impressions

- Failing to run meaningful reference checks

- Under weighting security until late in the process

- Selection driven primarily by cost or relationships without evidence

Governance controls that reduce these errors:

- Assign explicit decision owners and roles (product, engineering, security, legal, procurement)

- Document criteria and thresholds per gate

- Use structured evaluations (standard questions, rubrics, scoring sheets)

- Maintain an audit trail of decisions and evidence

- Run a short retrospective after selection to improve the framework next time

Trade off to manage: over standardization can feel bureaucratic. The goal is not to remove judgment, but to force judgment to be explainable and evidence based.

Action cue: Before you start, write “ways this could fail” and assign one control to each risk.

What “good” looks like at each gate (required outputs and owners)

If you want a process that is easy to run and easy to defend, define minimal required outputs per gate.

A practical “good” standard for a 30 → 5 → 1 framework looks like this:

- After longlisting: normalized vendor list with one page profiles and logged sources

- After disqualifiers: pass fail table with brief justifications and any conditional passes scheduled

- After RFI: scored shortlist of about 5 with rationale tied to evidence

- After structured calls: ranked list of 1 to 2 finalists with panel notes and scores

- After finalist due diligence: chosen vendor with completed security, legal, and reference checks, plus a documented selection rationale

Ownership typically spans:

- Product or business leads: business goals and success metrics

- Engineering or technical leads: technical fit and delivery capability

- Security leads: security due diligence and control evidence

- Legal and procurement: contract alignment and commercial risk

Acceptance criteria should be pass or fail and evidence based. For example:

- To advance from longlist, a vendor must meet all must have constraints and show at least one relevant case study.

- To become a finalist, a vendor must clear the scoring threshold on RFI and calls.

- To be selected, a vendor must satisfy security and contractual requirements defined by internal policy.

Action cue: Write the required outputs and owners into your selection plan so every gate has a clear “done.”

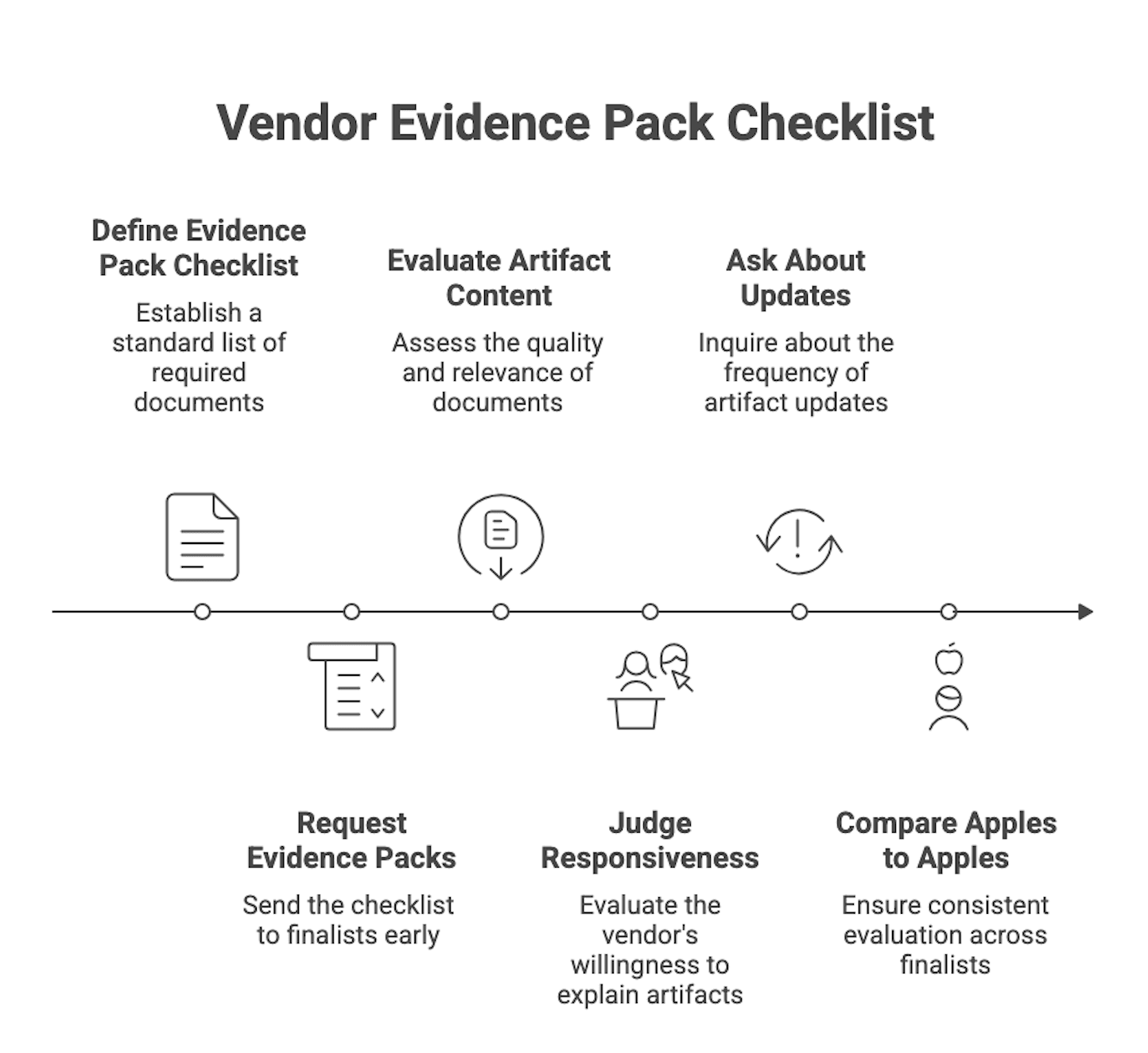

Practical verification steps and artifacts to request (buyer friendly checklist)

Shortlisting improves when you stop accepting slideware as proof and start asking for artifacts and walkthroughs.

If you want a structured scoring method and downloadable mechanics, use this checklist with your evaluation rubric and link it to your scorecard approach. For a deeper scorecard walkthrough and download, see vendor evaluation scorecard.

Evidence pack checklist (what to request from every finalist)

A standard evidence pack keeps inputs consistent and reduces surprises. Common, diagnostic artifacts include:

- Recent or anonymized SOWs and project plans showing how scope and milestones are defined

- Security overview describing key controls, governance, and incident response

- QA and testing strategy documentation

- Sample status reports that show communication cadence and transparency

- Bios for key team members and role definitions

- Reference contacts (aim for comparable complexity and scale)

For security sensitive contexts, independent attestations can be useful where applicable (for example, SOC 2 reports or ISO 27001 certificates).

Confidentiality is real. When vendors cannot share client specific documents, acceptable substitutes include:

- Generic templates

- Anonymized versions

- Redacted screenshots of process tools

- Detailed verbal walkthroughs accompanied by sample artifacts

Two evaluation tips:

- Judge the responsiveness and willingness to explain artifacts.

- Ask how often each artifact is updated and reviewed to distinguish living processes from shelfware.

Action cue: Send the evidence pack request early so finalists can prepare and you can compare apples to apples.

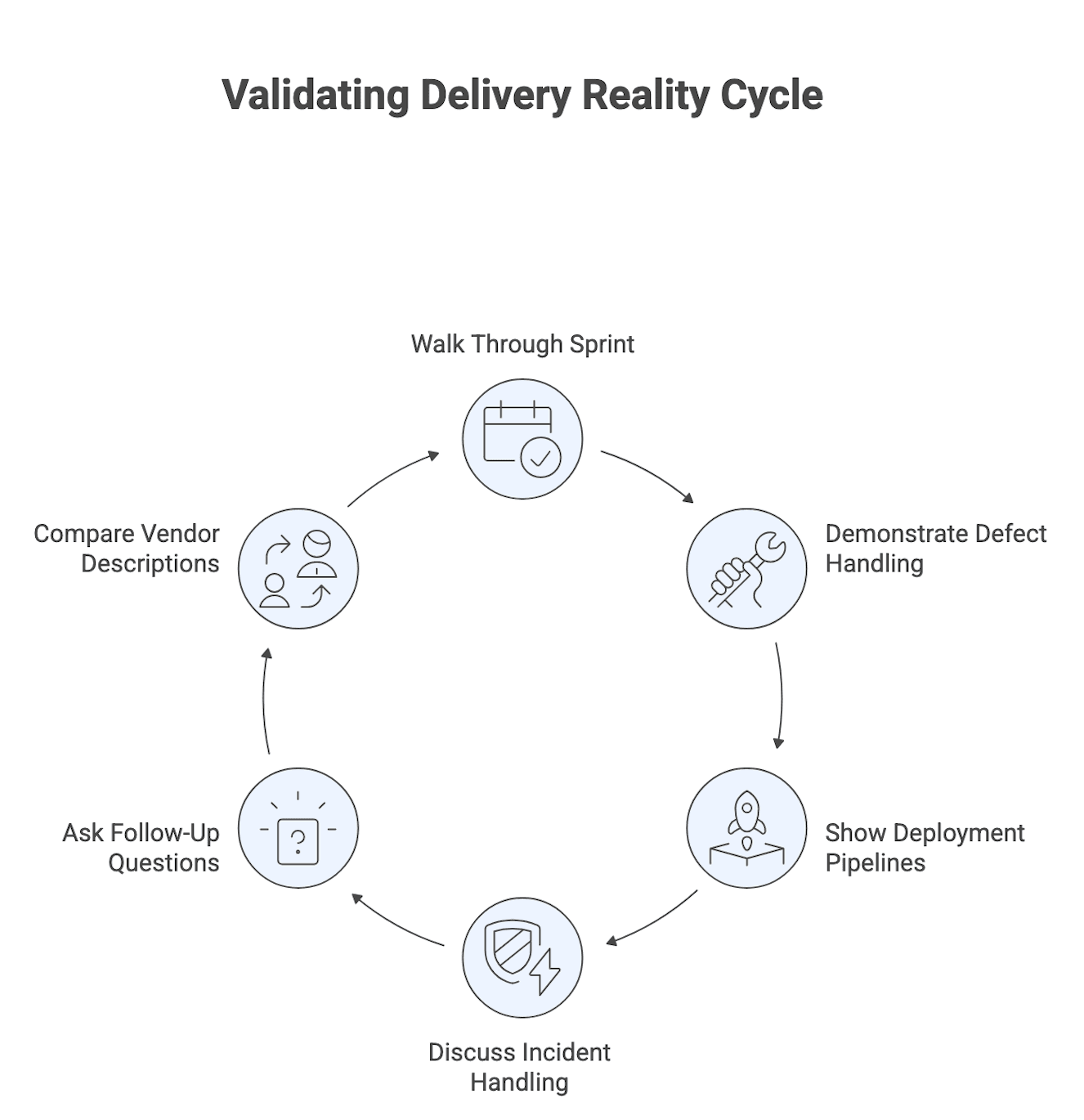

How to validate delivery reality

Verification methods should test whether documented processes are executed in practice. High signal “show me” requests include:

- Walk through a recent sprint or iteration (backlog, planning, review)

- Demonstrate how defects are logged, prioritized, and resolved

- Show deployment pipelines, release controls, and rollback procedures

- Discuss a real incident or project risk and how it was handled

Follow up questions that reveal depth:

- Ask for concrete examples and dates

- Ask what went wrong and how the process changed afterward

- Ask how continuous improvement is measured and enforced

Session design tips:

- Bring at least one technical lead and one delivery or product representative

- Listen for specific, verifiable details (names, dates, metrics) rather than generalities

- Compare how different vendors describe the same process to spot vagueness or inconsistencies

Action cue: Replace “tell us your process” with “show us your process” and score what you see.

Reference checks that actually reduce risk

Reference checks are high leverage when they are structured. A practical structure is:

- Verify the reference context (industry, size, scope, complexity)

- Explore implementation and integration experiences (including data migration if relevant)

- Ask about estimate accuracy versus actual effort and timeline

- Ask how change requests and issues were handled

- Assess training, support, and long term relationship quality

- Ask whether they would choose the vendor again for a similar project

Signals that tend to matter most:

- Did they meet timelines and budget within reasonable variance?

- Was delivered software stable and maintainable?

- Were communication and transparency consistent?

- How did they handle mistakes or incidents?

- Did the team remain stable, or did key people rotate out?

Triangulation makes references more reliable:

- Conduct at least two to three reference calls per finalist

- Prefer references that match your profile and complexity

- Watch for over curated references, evasive answers, or refusal to provide comparable contacts

Action cue: Use a standard reference call script and capture notes in a template so you can compare vendors reliably.

Conclusion: run the gates, collect artifacts, then decide

A defensible custom software development vendor shortlisting process narrows from a wide pool to one partner through staged gates, each with specific criteria, owners, and evidence requirements. Early gates focus on constraints and fit, mid gates on delivery capability and team reality, and final gates on risk, security, and commercial terms.

The decision lens is simple: vendors advance based on evidence tied to your constraints, success metrics, and risk thresholds, not based on charisma, brand, or slide decks.

Your immediate next action should be to write the constraints brief and must have disqualifier checklist first. Then build a normalized longlist and run the 30 → 5 → 1 gates with structured questions, artifacts, and a decision log.