We put excellence, value and quality above all - and it shows

A Technology Partnership That Goes Beyond Code

“Arbisoft has been my most trusted technology partner for now over 15 years. Arbisoft has very unique methods of recruiting and training, and the results demonstrate that. They have great teams, great positive attitudes and great communication.”

How to Build AI Agents for Patient Scheduling, Triage, and Follow-Ups

Healthcare is shaped by how people move through their care journey. A patient seeks an appointment, needs guidance on symptom urgency, and later requires support in recovery. These steps may look simple, but they often decide if patients feel cared for, if conditions are detected early, and if treatments succeed.

Delays in scheduling can discourage people from seeking help. Missed recognition of urgent symptoms can cause serious harm. Poor follow-up can lead to relapse or overlooked recovery signals. For clinicians, these gaps create stress, waste resources, and make it harder to deliver high-quality care.

Artificial Intelligence (AI) agents can help close these gaps. Across AI agents healthcare applications, these systems are transforming how patients book appointments, receive triage guidance, and stay engaged during recovery.

By automating scheduling, guiding triage, and ensuring consistent follow-up, they improve patient experience and give clinicians more time for care. These agents are not abstract ideas. They can be built today using existing data standards, regulatory frameworks, and proven machine learning methods. This blog explains how to build them step by step.

Understanding the Role of AI Agents in Healthcare

Healthcare automation agents are systems that listen, process, and act on patient needs throughout the care journey.

Within the growing field of AI agents healthcare, these tools serve as digital collaborators that combine automation with empathy to make care journeys smoother.

It can take input through conversation or structured data, apply reasoning, and perform tasks such as booking an appointment, recommending an escalation, or sending a reminder.

These agents are most useful in tasks that are repetitive, high-volume, and rules-based, but also where personalization matters. They differ from chatbots that only provide scripted answers. Agents are linked with Electronic Health Records (EHRs), practice management systems, and secure communication channels. They carry accountability for accuracy and must meet privacy and compliance standards.

Patient scheduling, triage, and follow-ups are three areas where AI agents bring immediate and measurable value. Each represents a point of care where errors, delays, or gaps are common, and each can be improved with intelligent support.

These healthcare applications build on broader insights we’ve shared about the role of AI agents in enterprise automation and their impact on business value.

The Three Types of AI Agents

To build well, you first need to define the scope. Healthcare automation agents are not a monolith. There are three distinct agent types, each with unique risks and requirements.

AI Patient Scheduling Agents

An AI patient scheduling agent can help solve one of the biggest frustrations for patients: long waits, unclear availability, and missed reminders. Staff spend hours managing calendars, adjusting slots, and handling cancellations.

An AI patient scheduling agent can:

- Offer patients real-time booking through text, voice, or online portals.

- Automatically reschedule if a slot opens earlier.

- Manage waitlists and match patients to the right clinician and resources.

- Predict no-show risk using past behavior, demographics, and appointment type.

- Send personalized reminders and confirmations.

- Handle insurance and eligibility checks through integration with practice management systems.

These agents must connect with both EHRs and scheduling software through standards like Fast Healthcare Interoperability Resources (FHIR). They should also keep an immutable audit trail of every change to maintain accountability.

The risks in scheduling are operational rather than clinical. A double-booked slot or a missed cancellation can still cause disruption, but these risks can be managed with strong rules, monitoring, and overrides for staff.

Patient Triage Agents

Triage is more sensitive because it touches clinical decisions. A triage agent collects symptoms and guides patients to the right level of care. It may recommend urgent emergency care, a same-day appointment, or self-care advice.

The design of triage agents must balance intelligence with safety. They should:

- Use natural language models for symptom intake to make interactions easy.

- Apply supervised classifiers trained on labeled triage data for decision support.

- Combine structured clinical protocols, such as Manchester Triage or nurse triage pathways, with AI logic.

- Always escalate when red flag symptoms appear, such as severe chest pain, sudden shortness of breath, or uncontrolled bleeding.

- Provide explanations for recommendations to build patient trust.

- Keep clinicians in the loop by sending summaries and alerts for review.

Because triage affects health outcomes, regulators such as the United States Food and Drug Administration (FDA) treat it as high-risk. These agents require rigorous validation, clear limitations, and human oversight.

Patient Follow-up Agents

The patient journey does not end when a visit is over. Recovery depends on adherence to medications, early detection of complications, and consistent communication. Follow-up is often where gaps appear, leading to avoidable hospital readmissions or missed treatment opportunities.

AI follow-up agents can:

- Send reminders for medication schedules, lab tests, or therapy appointments.

- Collect symptom updates through text or voice and flag concerning responses.

- Escalate cases to care managers if patients report worsening conditions.

- Track recovery progress with structured surveys and integrate results into EHRs.

- Provide ongoing education and self-care instructions.

These agents improve outcomes by keeping patients engaged and supported. They also reduce clinician workload by automating routine outreach. The key is to ensure that patients feel cared for by a system that listens and responds, not abandoned to automation.

Data Foundations for Building AI Agents

Every AI agent begins with data. For healthcare, data quality and governance decide whether the system will work safely. Key foundations include:

- Electronic Health Records (EHRs): Store medical history, allergies, medications, and appointments. Agents must read and write EHR data accurately.

- Fast Healthcare Interoperability Resources (FHIR): Provides a standard format for healthcare data exchange. The Appointment and Patient resources are central to scheduling and follow-up.

- SMART on FHIR: Ensures secure authorization when agents access patient records. It gives patients control over what data is shared.

- Practice Management Systems: Coordinate clinician schedules, rooms, and billing. AI patient scheduling agents must link with them to validate availability.

- Consent Management: Stores and enforces patient permissions for data use. Consent must always be respected.

- Audit Trails: Keep a record of every agent action for compliance and transparency.

By building on these standards, organizations avoid fragmented systems and ensure that agents integrate smoothly into existing workflows.

Technical Architecture of AI Agents

Once the data flows are clear, the next challenge is how to structure the agent itself. A safe and effective architecture separates conversation from decision-making.

- User Interface: The entry point where patients interact through mobile apps, portals, SMS, or phone. Interfaces should support multiple languages and accessibility needs.

- Orchestration Layer: Handles dialogue flow, routes requests, and applies policies. A language model can process input here, but its output must pass through rules and guardrails. Retrieval-augmented generation can add context from EHR data without exposing raw records.

- Action Layer: Executes tasks such as booking appointments, generating triage outcomes, or sending reminders. Connects securely to EHR and scheduling APIs.

- Data Plane: Manages encryption, logging, monitoring, and human oversight. Provides accountability and supports compliance with Health Insurance Portability and Accountability Act (HIPAA).

This structure reduces the risk of unsafe outputs and creates transparency across the system.

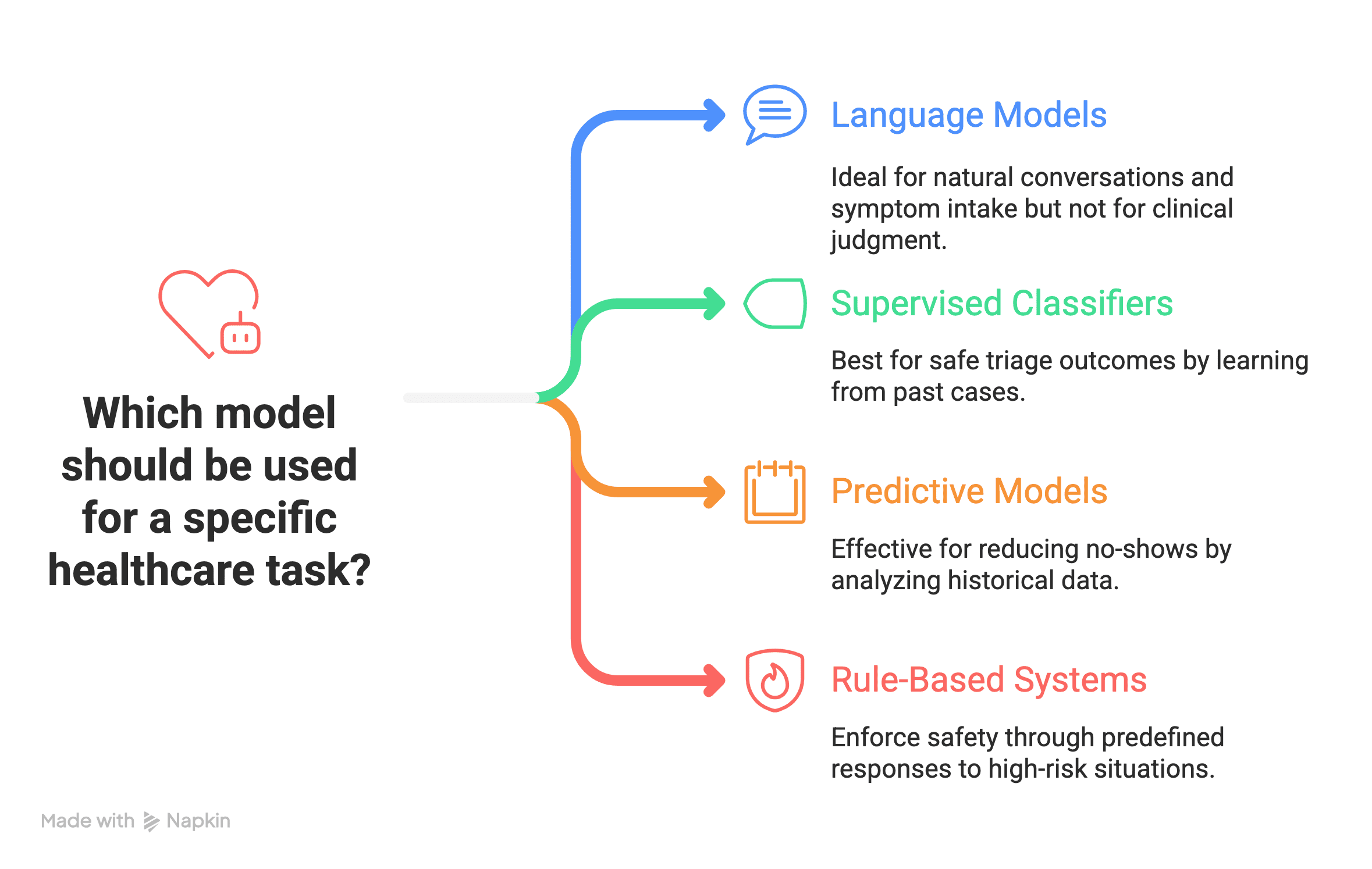

Choosing the Right Models

The most common mistake is assuming one model can do everything. In practice, you need a hybrid approach. Different tasks require specialized models.

- Language Models: Power natural conversations, symptom intake, and clarification. Best for interaction, not clinical judgment.

- Supervised Classifiers: Provide safe triage outcomes by learning from labeled datasets of past triage cases. Can be tuned to avoid missing urgent conditions.

- Predictive Models for No-shows: Use historical attendance data to identify patients likely to miss appointments and trigger additional reminders.

- Rule-Based Systems: Enforce safety through predefined responses to high-risk symptoms or events.

The best results come from combining models rather than relying on one to do everything.

Regulations and Compliance

Healthcare AI must operate within a strict regulatory framework.

- United States Food and Drug Administration (FDA): Reviews AI tools that affect clinical outcomes. Triage agents often fall under this scope. The FDA requires lifecycle management and ongoing monitoring.

- United States Department of Health and Human Services (HHS): Enforces nondiscrimination rules under Section 1557. AI agents must be tested for fairness across patient groups.

- Health Insurance Portability and Accountability Act (HIPAA): Governs how Protected Health Information (PHI) is used, stored, and shared. Requires risk analyses, vendor agreements, and breach response plans.

- National Institute of Standards and Technology (NIST): Provides the AI Risk Management Framework, which is becoming a reference for responsible AI practices.

Following these rules builds trust and avoids legal or ethical failures.

Validating AI Agents

The only way to know if your agent is safe is to validate it in the real world. This validation has stages, such as:

- Retrospective Testing: Run agents on historical data to measure accuracy and fairness.

- Silent Prospective Runs: Deploy in real workflows without exposing outputs, and compare with clinician decisions.

- Human-in-the-Loop Pilots: Allow staff to review agent outputs before action is taken.

- Controlled Trials: Especially important for triage agents that guide urgent care.

- Continuous Monitoring: Detect drift, bias, or errors after deployment.

Validation builds confidence for both patients and regulators.

Privacy-Preserving Methods

Protecting patient privacy is non-negotiable. Modern techniques include:

- Federated Learning: Train models across multiple sites without sharing raw data.

- Differential Privacy: Add statistical noise to protect individual identities during training.

- De-identification: Remove identifiers from data before model development.

- Synthetic Data: Use artificially generated datasets for safe testing and prototyping.

Arbisoft builds on these foundations through our Generative AI solutions. From fine-tuning to managing large datasets, our methods are designed to embed Gen AI into healthcare workflows with the same rigor. We focus on keeping control, privacy, and fairness at the center so that organizations can innovate confidently without compromising patient trust.

These methods allow innovation while respecting patient rights.

Monitoring and MLOps in Deployment

Even with strong design, incidents will happen. A scheduling agent might double-book, a triage agent might misclassify, or a follow-up agent might miss a dangerous response. What matters is how you detect and respond. You need to focus on the major points, such as:

- Audit Logs: Record every decision and action.

- Dashboards: Track performance metrics such as accuracy, latency, and subgroup fairness.

- Rollback Mechanisms: Quickly revert changes if safety issues arise.

- Incident Response Plans: Outline steps for notifying patients and clinicians when problems occur.

Machine Learning Operations (MLOps) platforms support these needs by versioning models, tracking lineage, and enabling safe rollouts.

Building Trust with Patients and Clinicians

Technology works only when people trust it. For patients, that means transparency. They must know when they are speaking with an AI agent, what data is being used, and how to reach a human at any moment. For clinicians, that means control. They must be able to override, correct, and guide the agent.

Multilingual access, plain language, and culturally sensitive phrasing are also part of trust. If patients feel excluded or confused, they will disengage.

This is where Arbisoft’s experience matters. Over the years, Arbisoft’s healthcare solutions have supported medical education and patient care across diverse settings, always with an emphasis on accessibility, empathy, and reliability. That same commitment guides how we now build AI agents for scheduling, triage, and follow-ups.

Trust is not a byproduct of accuracy alone. It is built by clarity, respect, and the ability to escalate when needed.

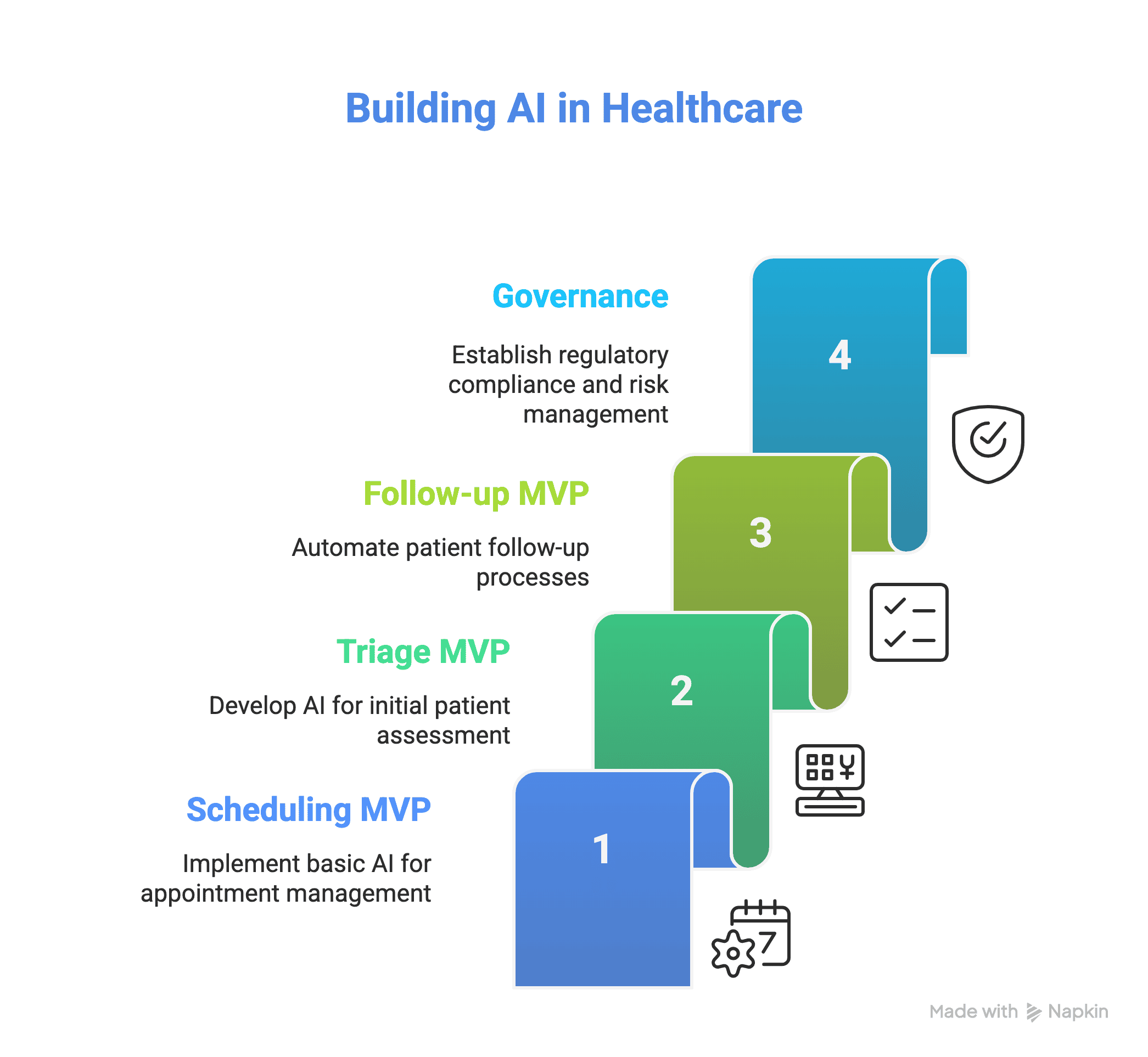

A Practical Roadmap

Many organizations wonder where to start. The safest path is to begin with low-risk scheduling and move toward higher-stakes triage only after mastering governance.

- Scheduling MVP: integrate SMART on FHIR for appointments, deploy predictive no-show scoring, and pilot automated reminders. Include logging and override controls.

- Triage MVP: start with language models for intake, but base decisions on validated classifiers. Run silent prospective validation before real deployment. Always include red flag rules.

- Follow-up MVP: launch simple automated checklists and reminders, with clear escalation to care managers. Expand gradually as trust builds.

- Governance: conduct risk assessments, prepare model cards, document clinical evidence, and align with FDA and HHS guidance.

By sequencing adoption this way, you deliver value early while building the foundation for higher-risk use cases.

Managing Risks

Every agent carries risks that must be managed.

- Clinical Risk: Reduced with conservative thresholds, rules, and human oversight.

- Privacy Risk: Managed through encryption, privacy-preserving techniques, and legal agreements.

- Bias Risk: Addressed by fairness testing and inclusive design.

- Compliance Risk: Avoided by aligning with FDA, HHS, HIPAA, and NIST guidance.

Responsible risk management is part of building safe systems.

Lastly, Building Agents that Strengthen Care

Scheduling, triage, and follow-ups define how patients move through healthcare. They decide when access begins, how urgency is recognized, and how recovery is supported. These are the touchpoints where patients often feel either cared for or left behind.

AI agents can make these steps smoother and safer. They bring efficiency for clinicians and reliability for patients. But their value comes only when built with strong foundations: accurate data, layered architecture, careful validation, privacy safeguards, and ongoing oversight.

The path is clear. With responsible design, AI agents can turn routine processes into moments of support and trust. They can transform healthcare into a system that feels more connected, responsive, and humane.