We put excellence, value and quality above all - and it shows

A Technology Partnership That Goes Beyond Code

“Arbisoft has been my most trusted technology partner for now over 15 years. Arbisoft has very unique methods of recruiting and training, and the results demonstrate that. They have great teams, great positive attitudes and great communication.”

How to Deploy AI Agents for Order Tracking and Customer Support Automation

We all know that, when a customer asks, “Where is my order?” they expect an instant, reliable answer. Traditional customer service teams often spend hours resolving these repetitive inquiries, while complex cases wait in the queue. AI agents can change that. By directly connecting to order management systems, shipping APIs, and customer communication channels, they deliver fast updates, manage basic requests, and escalate only when necessary.

In the world of e-commerce AI customer service, this approach transforms how businesses handle post-purchase interactions, ensuring customers get quick, consistent, and reliable responses.

In this blog, we will walk you through the step-by-step process of deploying AI agents for order tracking and customer support automation.

Why Order Tracking And Support Automation Matter

Customers often contact support teams to check the status of their orders or raise concerns about what they received. While these questions are usually straightforward, they can quickly overwhelm service teams, but since they follow clear, predictable patterns, they are well-suited for automation.

With the right AI agent, customers can instantly check order status, track shipments, get answers to policy questions, and even start returns or refunds. AI order tracking eliminates guesswork by pulling verified shipping data from multiple carriers, giving customers clear and consistent updates every time they ask.

For businesses, this shift is equally valuable. Instead of spending time on repetitive inquiries, support teams can focus on more complex and meaningful interactions.

Companies adopting e-commerce AI customer service solutions report faster resolutions, more satisfied customers, and reduced operational costs, making automation a critical part of modern customer engagement.

The outcome is faster resolutions, more satisfied customers, and a more efficient operation overall. When automation also covers tasks like cancellations, returns, or eligibility checks, its value multiplies even further. In fact, 80% of customers who have interacted with AI in customer service report a positive experience, showing that automation can enhance satisfaction while reducing support load.

With this foundation in place, the next step is to define what success looks like and how it should be measured.

Defining Scope and Success Metrics

It is essential to clarify what the AI agent should handle and how success will be measured. The agent must address intents such as:

- Providing accurate current order status and estimated delivery times

- Allowing address updates before fulfillment when permitted

- Enabling eligible order cancellations

- Starting returns or exchanges

- Verifying refund eligibility

- Explaining relevant policy or product details

To measure effectiveness, use the following key metrics:

- Automated Containment Rate: Showing how often inquiries are resolved without escalation

- First-Contact Resolution: Tracking how many cases are resolved in the first interaction

- Average Handle Time: Measures the speed of resolution

- Customer Satisfaction (CSAT): Based on feedback surveys

- Handoff Quality: Evaluating context passed to human agents when escalation occurs

With these goals in place, the next step is to design the underlying architecture needed to support this functionality.

Building The Reference Architecture

Deploying AI agents is like building a house. You need layers that work together, each supporting the next. Many companies that deploy ecommerce AI customer service platforms effectively usually follow a five-layer architecture that ensures speed, accuracy, and scalability.

- Channel Ingestion: This is where customers talk to the agent. It could be website chat, WhatsApp, SMS, or voice calls. Twilio provides APIs for WhatsApp and SMS, while OpenAI’s Realtime API allows natural voice conversations and even connects with phone systems using SIP calling.

- Cognitive Agent Core: This is the brain. The large language model receives the customer’s request, decides what to do, and generates a reply. In some cases, multiple smaller AI agents work together, using frameworks like Microsoft AutoGen, to split tasks such as looking up an order versus processing a return.

- Enterprise Tools: These tools connect the AI to real business systems. Shopify’s Admin GraphQL API allows access to live order data and provides a link (statusPageUrl) that customers can use to check their order. EasyPost collects tracking information from carriers like UPS, FedEx, and DHL, making it consistent across shipping partners. Messaging tools ensure customers receive proactive updates.

- Safety and Compliance: This layer protects both customers and businesses. Services like AWS Bedrock Guardrails and Azure AI Content Safety filter harmful or sensitive content and enforce rules before the AI responds.

- Observability and Monitoring: Once the system is running, you need to measure its performance. Tools like LangSmith let you log conversations, track errors, and analyze how well the AI is working.

When all these layers are in place, the system has a solid foundation. The next step is to connect the data that powers customer interactions.

Wiring The Data Layer

For an AI agent, information is everything. Without data, it cannot give reliable answers. Usually, three types of data must be connected:

- Live Order Context: This includes order ID, payment and fulfillment status, and customer details. Shopify’s API makes this available, including the new statusPageUrl, which customers can click to verify order details themselves.

- Shipping and Carrier Events: EasyPost’s Tracking API combines updates from multiple carriers into a single format. Instead of trying to read FedEx, UPS, and DHL separately, the AI receives clear status labels like “in transit” or “delivered.” This unified data structure is the foundation of AI order tracking, ensuring the agent always provides accurate, real-time updates.

- Secure References: To protect privacy, sensitive details like full addresses or payment info should not be shown directly. Instead, the AI can give verified links or use masked identifiers. This builds trust without exposing private data.

Once the data is connected, the AI needs a clear set of steps to follow in every conversation.

Designing The Agent Workflow

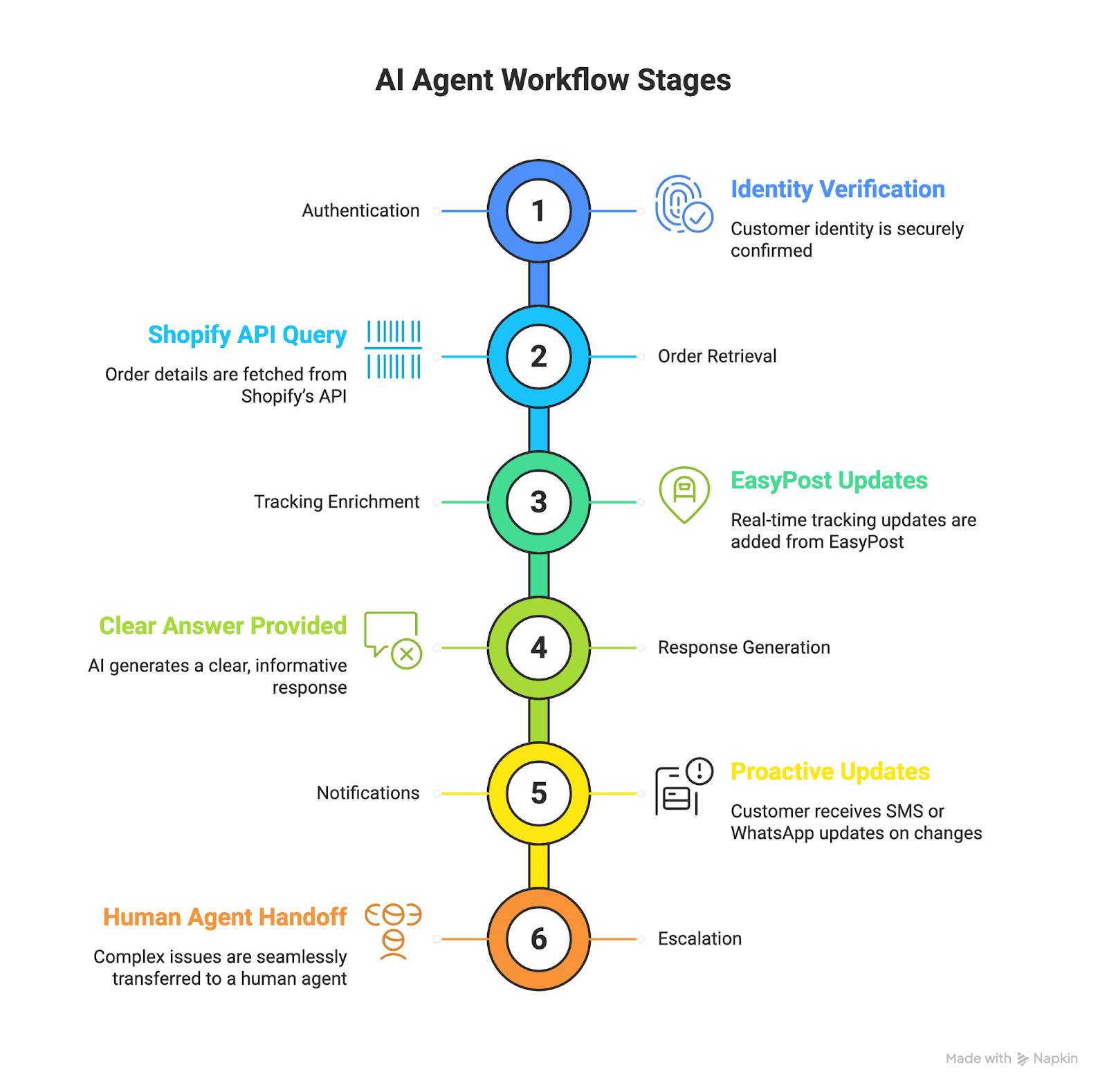

Think of the workflow as the rules the AI follows in each customer interaction. A basic workflow includes six stages:

- Authentication: Verify identity through email, code, or a secure status link.

- Order Retrieval: Pull order details from Shopify’s API.

- Tracking Enrichment: Add real-time updates from EasyPost.

- Response Generation: Give a clear answer, such as “Your order is in transit and should arrive Tuesday.”

- Notifications: Send updates through SMS or WhatsApp if something changes, like a delay.

- Escalation: If the AI cannot solve the issue, hand the customer over to a human agent with full context.

This flow ensures fast answers while keeping complex or unusual cases in human hands. But to work safely, the system needs strict guardrails.

Guardrails And Building Trust

Customers will only trust an AI agent if it always stays safe, accurate, and respectful. Companies have relied on three key guardrail strategies:

- Content Filtering: AWS Bedrock Guardrails and Azure AI Content Safety block unsafe language, fraud attempts, and sensitive content before the AI responds.

- Groundedness Rules: The AI is forbidden to guess or hallucinate. If it cannot confirm the data (e.g., no carrier update available), it must escalate instead of inventing an answer.

- Privacy Safeguards: Mask personal data in logs, tokenize IDs, and only use secure links when sharing information.

These rules ensure conversations are not only helpful but also responsible.

Supporting Multiple Customer Channels

Nowadays, with so many advancements, customers no longer stay on a single support channel. A conversation might begin on a website chat, move to WhatsApp while the customer is traveling, and end with a phone call if further help is needed. Modern AI agents are expected to follow these conversations seamlessly across every stage.

For voice support, OpenAI’s Realtime API enables speech-to-speech conversations that happen almost instantly. With SIP integration, this capability connects directly to existing call centers and replaces outdated menu-driven phone trees with natural conversations.

On WhatsApp, Twilio’s Business API allows businesses to send verified order updates and handle two-way interactions. Since 2025, WhatsApp Business Calling has added more flexibility by giving customers the option to switch from chat to a phone call without leaving the app.

To create a unified experience, platforms such as Zendesk Sunshine Conversations bring all customer interactions together across web, WhatsApp, SMS, and email into one record. This prevents customers from having to repeat information each time they change channels.

When AI agents support multiple channels in this way, they function as true extensions of the customer service team. The result is a consistent and reliable experience no matter where the conversation starts.

This multichannel capability is one of the strongest advantages of ecommerce AI customer service, giving customers a unified, seamless experience across web, chat, voice, and mobile.

P:S: For e-commerce businesses, speed matters. Customers expect instant answers, and delays can cost a sale. Arbisoft builds chatbot solutions that keep your store available around the clock, handling inquiries, providing quick responses, and ensuring shoppers stay engaged through checkout.

Step-By-Step Deployment Process

The deployment phase usually covers four stages: foundation, build, pilot, and scale. Let’s cover each in a bit more detail.

Stage 1: Foundation: Prepare Systems and Contracts

Goal: Create a safe, minimal working system that can return factual order and tracking data.

What to do and why it matters:

- Confirm business scope and acceptance metrics. Decide which customer intents the agent must handle at launch, for example, order status, basic cancellations, returns initiation, and refund eligibility. These scope decisions drive all technical work.

- Define data contracts. Produce function schemas for each backend operation the agent will call, for example, getOrder(locator) and getTracking(carrier, trackingId). These contracts must specify input types, required fields, and exact output JSON structure so the agent always receives the same shape of data.

- Provision API access to commerce and carriers. Create scoped credentials for Shopify or your OMS and for EasyPost or your chosen tracking aggregator. Use read-only keys for status lookups and separate keys for privileged actions.

- Implement basic channel plumbing. Stand up a test web chat widget and connect a WhatsApp/SMS sandbox, and enable a test voice endpoint if you plan to use voice later.

- Put basic guardrails in place. Configure content filters and a minimal rule that blocks any response claiming order facts unless a successful getOrder and getTracking call returned data.

Who owns it:

- Product Manager to define scope and success metrics

- Backend Engineer and Data Engineer to define contracts and wire APIs

- DevOps to provision credentials and sandboxes

- Security lead to set guardrail defaults

Deliverables and acceptance:

- Function schemas documented and signed off

- Working API connections in a test environment

- A simple chat and messaging sandbox that can display factual data returned from the APIs

- A minimal safety rule that prevents unverified claims

When this stage is complete, you have a safe sandbox that proves the agent can fetch real data and not invent answers. The next stage is to build the agent logic and the human handling paths on top of that foundation.

Stage 2: Build: Agent Logic, Tools, And Human Handoff

Goal: Implement the AI agent core, its tool integration, and the handoff paths for escalation.

What to do and why it matters:

- Implement the cognitive layer that calls the functions defined in the data contracts. Ensure every user-visible order or ETA statement in the agent response is sourced to a tool return in your logs.

- Build strict tool call logic. The agent should only format and surface the data the tools return. If a tool returns no data or an error, the agent must follow a scripted fallback: apology, short explanation, and escalation offer.

- Create human escalation flows. When the agent cannot resolve the issue, it should open a ticket in your helpdesk with the conversation transcript, API call logs, and suggested next steps for the agent. Include a short summary the human agent can read in 10 seconds.

- Develop policy prompts and templates. Create short, explicit templates that tell the agent how to phrase answers, how to refuse when necessary, and how to ask clarifying authentication questions.

- Implement basic telemetry. Log each conversation, the tool calls made, the tool outputs, whether the interaction was solved or escalated, and safety filter activations.

Who owns it:

- AI Engineer to implement the LLM integration and tool calls

- Backend and Integration Engineers to surface the data reliably

- Support Ops to design the escalation content and ticket templates

- Observability Engineer to wire logs and traces

Deliverables and acceptance:

- Agent that returns grounded answers in test conversations

- Escalation tickets created automatically with all necessary context

- Policy templates that are tested against sample prompts and obey safety rules

- Telemetry that captures tool call success/failure and escalation counts

When this stage passes acceptance tests, you have a functional agent that is prevented from inventing facts and that hands off properly to humans. The next stage is to validate performance with real users in a controlled way.

Stage 3: Pilot: Real Traffic, Limited Scope, Measure Heavily

Goal: Run the agent with a subset of customers to measure real behavior and tune for production.

How to run the pilot:

- Choose the pilot cohort: Select a limited percentage of traffic or a customer segment where order patterns are simple and returns are rare. This reduces noise while you measure behavior.

- Set containment and safety targets: For example, aim for a containment rate of 30 to 50 percent for order status queries and zero lapses of groundedness where the agent claimed facts without tool confirmation.

- Monitor live metrics: Track automated containment rate, escalation rate, first contact resolution, AHT for human-handled cases, CSAT, and safety filter activations.

- Run daily reviews: Each day, review a sample of conversations that were auto-resolved, escalated, and flagged by safety filters. Use these reviews to refine prompts, guardrails, and escalation summaries.

- Add human-in-the-loop for edge cases: Route suspicious or high-value cases to a live agent for confirmation, and use those examples to improve the agent over time.

Who owns it:

- Product and Support leads to choose cohorts and acceptance thresholds

- Data and Observability to create dashboards and daily reports

- AI and Prompt Engineers to tune behavior rapidly based on observations

- Customer Support to own live human review and agent feedback loops

Deliverables and acceptance:

- Pilot metrics meeting or exceeding containment and CSAT thresholds you set

- No production security incidents

- Clear action list of prompt, tool, or data fixes resulting from pilot reviews

A successful pilot proves the agent works with real users and surfaces the quirks you cannot reproduce in a test environment. With pilot learnings incorporated, you will prepare for scale.

At scale, AI order tracking seamlessly integrates with customer support systems, enabling consistent responses even during high-volume shopping periods.

Stage 4: Scale: Gradual Ramp and Full Production Controls

Goal: Expand agent coverage and traffic while preserving safety and metrics.

Steps to scale with confidence:

- Ramp traffic gradually: Increase traffic in measured steps such as 10%, 25% and so on, while observing the KPIs. Gate each increase with checklists for containment, error rate, and CSAT.

- Harden reliability: Add redundancy for API calls, implement caching strategies for non-critical data, and apply rate limiting with backoff to avoid carrier or OMS throttles.

- Add more intents incrementally: After order status is stable, add returns, cancellations, and policy lookups one at a time, each with its own pilot and acceptance criteria.

- Automate monitoring and alerting: Create alerts that trigger when groundedness errors or safety violations exceed thresholds, or when escalation volume spikes unexpectedly.

- Invest in continuous training data: Store high-value transcripts and labeled outcomes to expand validation and retraining datasets.

Who owns it:

- Engineering leaders for scaling strategy and reliability

- DevOps for infrastructure scaling and cost optimization

- Legal and Compliance for expanded geographic coverage and data residency

- Product and Data Science for ongoing model evaluation

Deliverables and acceptance:

- Full monitoring and automated alerts in production

- Stable containment and CSAT at scale

- Documented rollback plan and canary release procedures

When production KPIs stabilize, you can consider the agent part of your standard support stack and begin continuous improvement cycles.

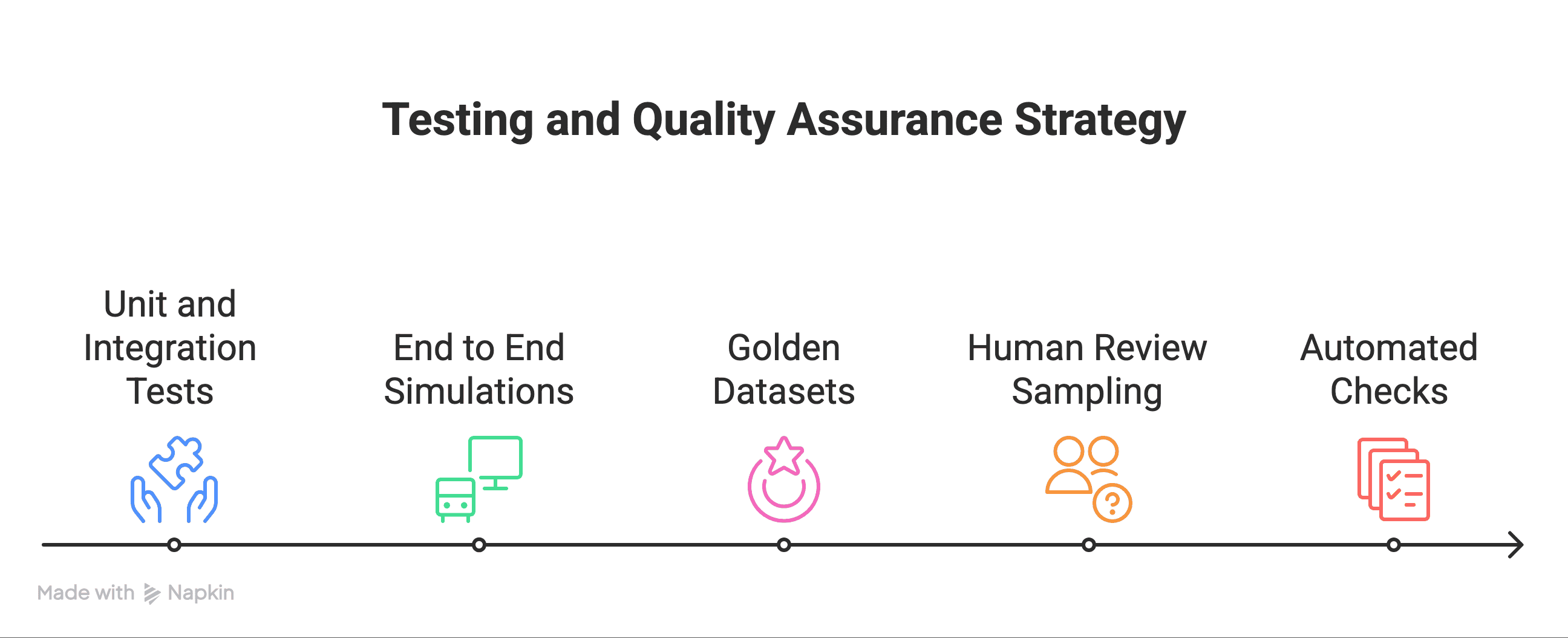

Testing, Quality Assurance, And Regression Strategy

This flow of tests prevents small code or data changes from introducing regressions at scale.

Operational Playbook: Monitoring, Alerts, And Incident Response

A clear runbook prevents small problems from becoming outages. You can do simple things like:

- Define alerts for high error rates, sudden spikes in escalations, or safety filter activations.

- Create a priority matrix that maps alert severity to response SLAs and owners.

- Prepare a rollback checklist that includes how to disable the agent on a channel, how to route traffic back to human agents, and how to preserve logs for postmortem.

- Hold regular postmortems on incidents and feed the findings back into prompts, guardrails, and tool contracts.

A tested operational playbook keeps customer impact small and fixes fast.

KPIs, Targets, And Acceptance Criteria

Use measurable targets to judge success and to gate rollouts:

- Initial containment target: 30% to 50% for order status in the pilot

- Target AHT reduction for human agents: Measurable improvement vs baseline

- CSAT: Equal or better than human-only baseline

- Groundedness error rate: Zero critical incidents and a very low acceptable rate for minor issues

- Escalation quality: Human agents should be able to resolve 90 percent of escalations without extra follow-up.

These metrics should appear in dashboards and be reviewed in regular business reviews.

Final Quick Checklist To Start Today

If you want a checklist you can act on now, here it is in one place:

- Define scope, success metrics, and acceptance gates.

- Document function contracts for order retrieval and tracking.

- Provision test API keys for OMS/Shopify and a tracking aggregator.

- Implement minimal guardrails that prevent unverified claims.

- Build a simple web chat and a WhatsApp sandbox for pilot testing.

- Prepare human escalation templates and ticket creation rules.

- Create dashboards for containment, CSAT, AHT, escalation rate, and

- groundedness errors.

- Plan phased ramping with canary releases and rollback steps.

When each box is checked, you are ready to move from foundation to pilot and then to scale.

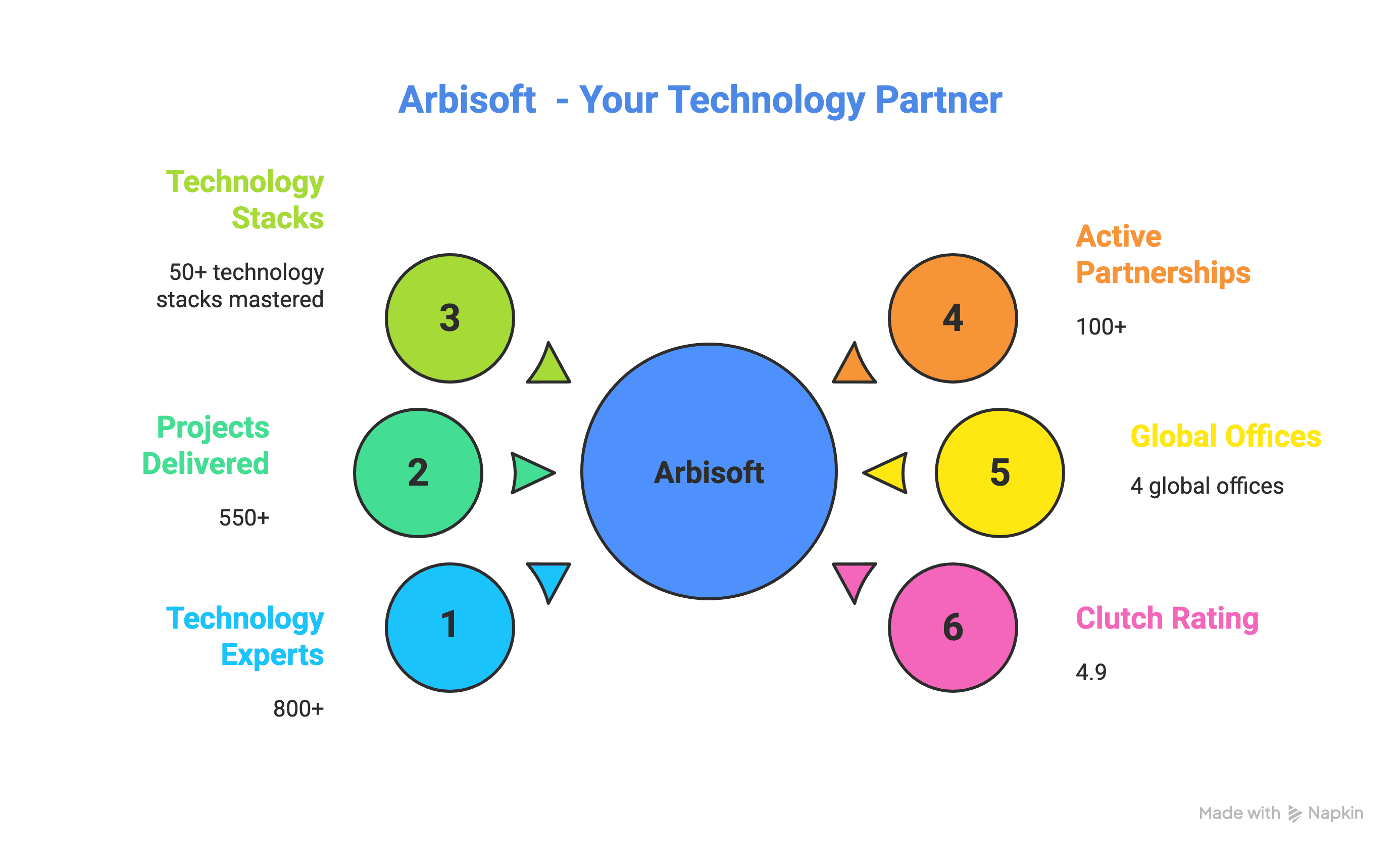

Why Choose Arbisoft as Your Technology Partner?

Deploying AI agents is not just about integrating tools; it requires a partner who understands how to design systems that are safe, scalable, and enterprise-ready. Arbisoft brings this expertise with a proven track record of delivering e-commerce AI customer service solutions across industries.

Generative AI, Done Responsibly

Arbisoft helps organizations incorporate generative AI into their workflows with a focus on control, privacy, and fairness. From fine-tuning models to handling large-scale datasets, our approach ensures AI is implemented thoughtfully and delivers measurable business outcomes.

Proven Experience and Scale

FAQs

1. What is an AI agent for customer support?

An AI agent is a software program that can understand and respond to customer inquiries automatically. It can provide order updates, handle basic requests, and escalate complex issues to human agents.

2. How can AI agents help with order tracking?

AI agents connect to order management systems and shipping APIs to give customers instant updates on order status, delivery times, and shipment tracking without waiting for a human agent.

3. Can AI handle order cancellations, returns, or refunds?

Yes. AI agents can check eligibility, initiate returns, process cancellations, and provide guidance on refunds, all while following safety rules to avoid mistakes.

4. What channels can AI agents support?

AI agents can operate across multiple channels including website chat, WhatsApp, SMS, email, and voice calls. They maintain context even if the customer switches channels.

5. How do AI agents in e-commerce stay accurate and safe?

AI agents use guardrails such as content filtering, groundedness rules (never guessing), and privacy safeguards (masking sensitive data) to ensure responses are accurate and secure.

6. What metrics measure the success of AI agents?

Key metrics include automated containment rate, first-contact resolution, average handle time (AHT), customer satisfaction (CSAT), and escalation quality.

7. How do businesses deploy AI agents safely?

Deployment involves four stages:

- Foundation: Set up systems, APIs, and minimal safety rules.

- Build: Implement AI logic, tool integrations, and human handoff processes.

- Pilot: Test with real users on a limited scale and measure performance.

- Scale: Expand traffic and functions while monitoring safety and KPIs.

8. Do AI agents in e-commerce replace human customer support?

No. AI agents handle repetitive and predictable tasks, freeing human agents to focus on complex or sensitive cases. Humans still handle escalations and final approvals.

9. How do AI agents integrate with existing business systems?

They connect through APIs to order management systems, shipping platforms, and messaging tools to retrieve live order data and provide real-time responses.

10. What are the benefits of using AI agents for e-commerce businesses?

Benefits include faster customer response, reduced support workload, higher customer satisfaction, fewer errors, and the ability to handle multiple customer interactions simultaneously.

11. How can I monitor and improve AI agent performance?

Use observability tools to log conversations, track errors, measure metrics, and refine AI behavior continuously through pilot testing, dashboards, and feedback loops.

12. Why should I choose Arbisoft for AI agent deployment?

Arbisoft provides expertise in designing safe, scalable AI systems, integrating them with enterprise workflows, and ensuring measurable results while maintaining privacy and compliance standards.