We put excellence, value and quality above all - and it shows

A Technology Partnership That Goes Beyond Code

“Arbisoft has been my most trusted technology partner for now over 15 years. Arbisoft has very unique methods of recruiting and training, and the results demonstrate that. They have great teams, great positive attitudes and great communication.”

How To Use Deep Learning For Credit Scoring And Risk Prediction

Every credit decision affects lives. A student waiting for an education loan, a family preparing to buy their first home, or a small business owner planning to expand all depend on accurate and fair credit scoring. If risk is underestimated, lenders lose money. If risk is overestimated, borrowers are denied opportunities they deserve.

Lending has always involved balancing risk and opportunity. For many years, decisions were guided by statistical models. Today, the financial environment is more demanding. Borrowers create continuous streams of digital data, and their financial behavior is influenced by global trends, technology use, and changing habits. Traditional methods often cannot capture this complexity, which is why ai risk prediction fintech has become essential for modern lenders.

Deep learning provides a new approach to credit scoring and risk prediction. It can find complex patterns in large datasets, giving more accurate and fair predictions. Using deep learning responsibly is essential. This includes being transparent, monitoring models continuously, and making fairness a key part of the process.

In this blog, we explain how deep learning is changing credit scoring. We cover its history, the models that make it work, and best practices for building models responsibly. Let’s get started.

The Old Way of Scoring Credit

To understand deep learning’s role, we first need to look at traditional credit scoring. Early models used logistic regression, a type of statistical model. Logistic regression predicts the probability that a borrower will fail to repay a loan. It looks at a few key numbers, such as:

- Income

- Credit usage, which is the percentage of available credit being used

- Number of open accounts

- Past repayment history

Logistic regression is transparent. It clearly shows how each factor affects the final score. For example, if a borrower uses most of their available credit, the model shows that this increases the chance of default. Loan officers can see exactly which inputs matter.

This simplicity is useful but has limits. Logistic regression assumes relationships between variables are straight-forward and additive. Financial behavior is more complex. Two borrowers with the same income can act very differently. One may have steady work and manage debt carefully. Another may have irregular work and struggle with payments. Simple models cannot see these differences.

To improve predictions, lenders started using tree-based models like random forests and gradient boosting machines. These models handle nonlinear relationships, meaning the effect of one factor can depend on the value of another. They also handle interactions between variables, where multiple factors together influence risk. Tree-based models work well with structured data, like numbers in a table.

Over time, lenders began collecting richer data, such as:

- Detailed transaction histories from bank accounts and credit cards

- Free-text applications where borrowers describe their jobs or businesses

- Connections between borrowers, including co-signers and shared accounts

Traditional models cannot fully use these data types. As finance became more digital and interconnected, lenders needed methods that could analyze large and complex datasets. Deep learning became the solution.

The Rise of Deep Learning in Risk Prediction

Deep learning is a type of artificial intelligence that can learn patterns directly from raw data. It works best when data is large, sequential, relational, or unstructured, which is common in modern financial systems. Let’s discuss different data types.

Example: Transaction Data

Every purchase, card swipe, or online payment contributes to a borrower’s transaction history. These histories show patterns such as:

- Consistency of income deposits

- Spending habits

- Ability to manage debt responsibly

Deep learning models can detect subtle changes in these sequences. For example, if a borrower starts using credit lines more heavily or misses certain payments, it may indicate financial stress. Traditional models often reduce these histories to averages or totals, which can hide early warning signs.

Example: Textual Data

Loan applications and financial disclosures often contain unstructured text. These texts include explanations that numeric fields cannot show, for example:

- "My income decreased temporarily due to health reasons"

- "My small business had unexpected growth last quarter"

Deep learning models, especially natural language processing models, can turn this text into numerical features. This lets the model consider important borrower details when predicting risk.

Example: Relational and Network Data

Borrowers are connected through shared accounts, co-signers, or common employers. Defaults can affect people in the same network. Graph neural networks can map these connections and find groups of borrowers at similar risk. This uncovers risks traditional models cannot see.

Deep learning became important in this area because financial data became more complex. Lenders needed models that could process and understand this complexity.

This is where partners like Arbisoft make a difference, building deep learning pipelines that handle complexity while remaining explainable and fair.

How to Build a Deep Learning Credit Scoring System

Building and deploying credit scoring models responsibly requires a structured process. Teams can follow a clear sequence to reduce risks and improve reliability:

Step 1: Define the Problem Clearly

Ask: What exactly should the model predict?

- Default prediction: Will this borrower fail to pay within 12 months?

- Delinquency: Will this borrower be late on payments?

- Risk ranking: How does this borrower compare to others?

This matters because if your label (the outcome you want to predict) is unclear, the model will be unreliable.

Step 2: Build the Data Pipeline

Credit scoring is only as good as its data. A strong pipeline collects, cleans, and organizes data so it can feed a model.

Data sources:

- Structured data: salary, debt-to-income ratio, number of open accounts

- Transactional data: every credit card swipe, ATM withdrawal, or loan repayment

- Text data: loan applications, call center notes

- Relational data: co-signers, shared employers, guarantors

Pipeline steps:

- Ingest data from banks, credit bureaus, and internal systems.

- Clean errors like missing ages or negative loan amounts.

- Align timelines so you don’t use “future” data (e.g., only use data available at the loan approval date).

- Store securely in a warehouse with encryption and access control.

Think of the pipeline as the plumbing: if it leaks, the whole system fails.

The practical tip here is to use ETL frameworks like Apache Airflow or Apache Spark to automate ingestion and cleaning. Always apply encryption at rest and in transit.

Step 3: Turn Raw Data into Features

Deep learning models do not understand raw tables or text. You must represent data in ways the model can learn from.

- Normalization: Scale income or balances so big numbers do not drown out smaller ones.

- Sequences: Keep order in transactions. Missing two payments in a row is worse than two random misses.

- Embeddings: Convert words into numerical vectors. Example: “temporary job” and “freelance work” should appear close to each other.

- Graphs: Represent borrowers as connected nodes. If one person defaults, the model can see risk spreading through their network.

Example: Instead of just “12 late payments,” create a sequence like [on-time, late, on-time, late, late]. This shows patterns.

Step 4: Choose a Model Architecture

Pick the right tool for each data type:

- Structured/tabular: Deep tabular networks (e.g., TabNet).

- Sequences: Transformers or recurrent networks for transaction histories.

- Text: Pretrained language models (e.g., BERT).

- Graphs: Graph neural networks for borrower connections.

- Fusion models: Combine everything for a 360° view of risk.

Each model type specializes in one data form. Fusion models bring them together.

Step 5: Train the Model

Training teaches the model to recognize risk patterns.

- Split by time: Train on older applications, test on newer ones. Random splits leak future info.

- Class imbalance: Defaults are rare (<10%). Balance data with oversampling or weighted losses.

- Batch training: Train in small batches (e.g., 128 samples at a time) to stabilize learning.

- Loss function: Use binary cross-entropy for classification, adjusted for imbalance.

- Regularization: Use dropout and weight decay to stop overfitting.

- Calibration: Adjust outputs so a 0.7 default probability really means 70 out of 100 borrowers default.

Frameworks like TensorFlow/Keras and PyTorch Lightning provide utilities for handling imbalance, calibration, and dropout efficiently.

Step 6: Evaluate in Depth

Accuracy alone is not enough. Use multiple checks:

- ROC-AUC: Measures ranking quality.

- Precision/recall: Captures trade-off between catching defaulters vs approving good borrowers.

- Fairness tests: Compare approval rates and error rates across groups.

- Stress tests: Simulate a recession by increasing unemployment in your data to see if the model holds up.

Fairness metrics to use in practice: Equal opportunity, demographic parity, and adverse impact ratio. Libraries like AIF360 and Fairlearn can automate these checks.)

Step 7: Deploy in Production

Once tested, the model must work in real systems.

- API integration: Loan officers or apps send data, get back a risk score instantly.

- Thresholds: Scores above X are approved, below Y are denied, in-between go to human review.

- Fallback: Keep a baseline model (e.g., logistic regression) ready if the deep model fails.

- Audit logging: Record every decision for compliance.

Common tools: FastAPI or Flask for serving models, Docker/Kubernetes for scaling, MLflow for model versioning.

Step 8: Monitor and Retrain

Borrower behavior changes with the economy. A model that works today may drift tomorrow.

- Drift detection: Check if new applicants look statistically different from training data.

- Performance monitoring: Compare predicted defaults to actual defaults every month.

- Fairness auditing: Re-run fairness checks regularly.

- Retraining: Update with new data quarterly or yearly.

- Rollback: Keep old models ready to restore if the new one underperforms.

Continuous monitoring is critical for any AI risk prediction fintech system to maintain accuracy and fairness.

These practices keep the system accurate, stable, and accountable after launch, allowing you to use it confidently.

When Deep Learning Truly Adds Value

Deep learning may not always be needed. If a lender has only basic features, such as credit history, income, and account balances, traditional models like gradient boosting may work well. However, deep learning fintech credit scoring provides significant advantages when data is complex and varied.

- Transaction Histories: Recurrent neural networks or transformers can read sequences of transactions to detect patterns over time. This includes spotting income stability, spending changes, and shifts in debt repayment.

- Unstructured Text: Language models turn text from applications or call center notes into features, capturing important borrower information.

- Graph and Network Data: Graph neural networks analyze borrower connections to find shared risk factors. For example, borrowers sharing accounts or working at the same employer may have similar risks.

- Multimodal Data: Fusion models combine several data types, such as tabular data, transactions, text, and network information. This gives a complete picture of borrower risk.

Lenders should use deep learning where complexity requires it. The goal is not to replace all models, but to apply it where it adds most value.

These deep learning techniques can be extended to other enterprise deep learning applications, helping organizations turn complex data into actionable insights.

Modern Architectures That Work

Different types of data need different deep learning architectures. Financial institutions implementing deep learning fintech credit scoring solutions typically choose from these proven models:

- Tabular Models: For structured data like credit bureau information, models like TabNet and TabPFN are effective. TabNet uses attention to focus on important features while keeping some interpretability. TabPFN uses pretraining on millions of synthetic datasets, allowing it to adapt quickly.

- Sequence Models: For transaction histories, transformers and temporal convolutional networks work well. Transformers can detect patterns over long periods, and temporal convolutional networks pick up seasonal or repeating trends in income and spending.

- Graph Neural Networks: For relational data, these networks detect clusters where risk is shared among connected borrowers.

- Fusion Models: When multiple data types exist, fusion models combine tabular, sequential, textual, and relational inputs. These models are more complex but provide a full view of borrower risk.

Choosing the right architecture ensures models capture borrowers’ financial behavior accurately.

Explainability and Fairness Are Not Optional

Credit scoring models must be explainable and fair. Borrowers need to understand why a decision was made, and regulators require transparency.

- Explainability: Tools like SHAP values show how much each input influenced a decision. Surrogate models simplify complex networks for easier understanding. Counterfactual explanations show what small changes a borrower could make to improve their score.

- Fairness: Bias can occur even without explicit gender or race data because other variables act as proxies. Fairness testing looks at performance across subgroups and measures disparities. Common metrics include equal opportunity (ensuring similar true positive rates across groups), demographic parity (ensuring approval rates are not skewed), and adverse impact ratios (used in regulation to detect discrimination in lending). If bias is found, adjustments can be made during preprocessing, training, or calibration.

Embedding fairness and explainability protects borrowers and the institution’s reputation.

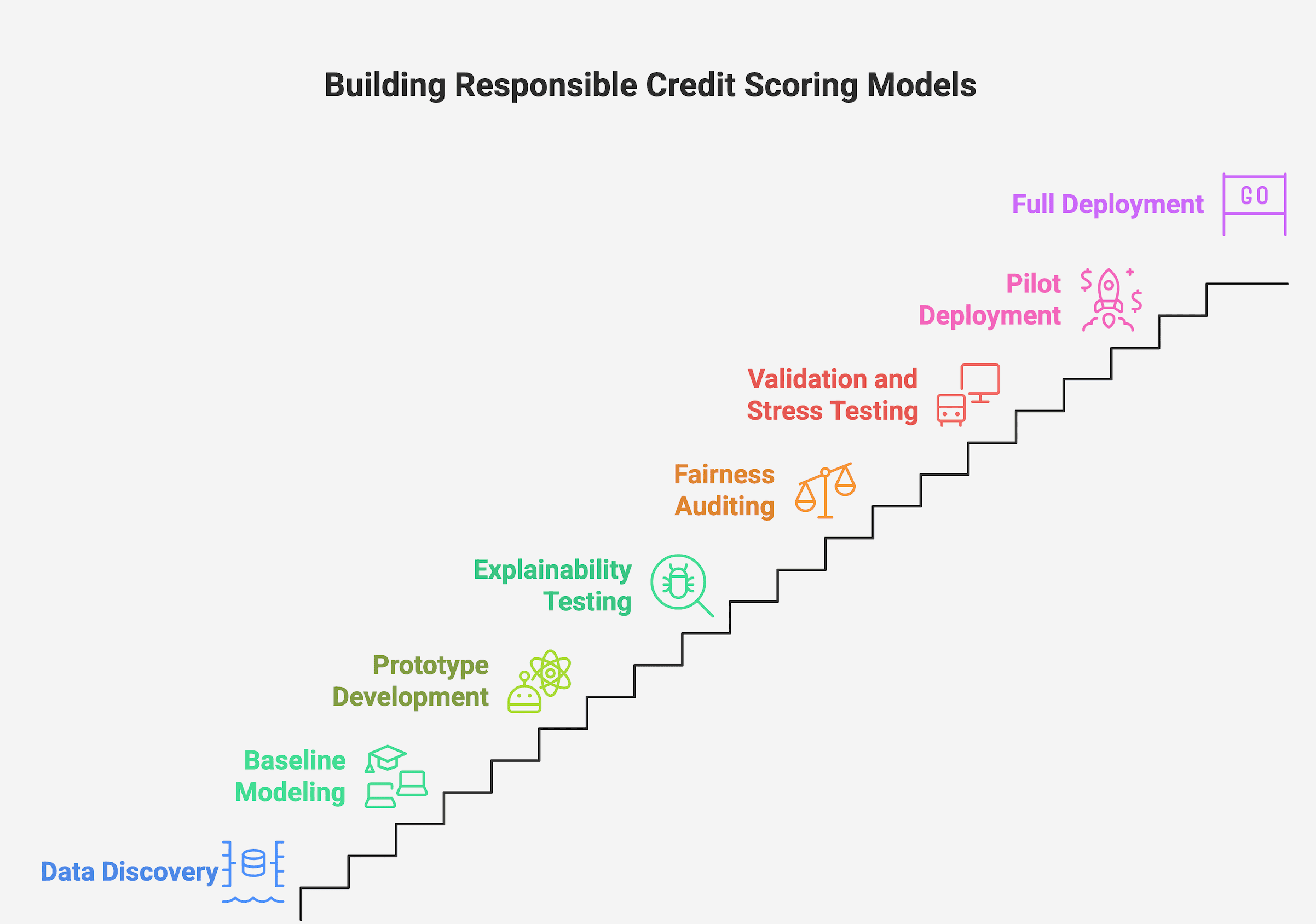

Quick Step-by-Step Roadmap for Teams

Building and deploying credit scoring models responsibly requires a structured process. Teams can follow a clear sequence to reduce risks and improve reliability:

- Data discovery: Gather all relevant datasets while ensuring privacy and compliance.

- Baseline modeling: Build simple models like logistic regression and gradient boosting to establish benchmarks.

- Prototype development: Experiment with deep learning models tailored to different data types.

- Explainability testing: Apply interpretability methods to confirm that model reasoning is understandable.

- Fairness auditing: Test how the model performs across borrower subgroups to detect and mitigate bias.

- Validation and stress testing: Use time-based splits and simulate economic downturns to test resilience.

- Pilot deployment: Release the model in a controlled environment with close monitoring.

- Full deployment with monitoring: Scale the system only after confirming fairness, stability, and accuracy.

Following this roadmap helps teams balance innovation with responsibility, avoiding shortcuts that could harm borrowers or institutions.

What Success Really Looks Like

A successful credit scoring model is not judged only by technical accuracy. True success is measured by the real-world impact it creates:

- Approving more borrowers without increasing defaults, helping more people access credit safely.

- Allocating credit fairly, minimizing hidden biases and ensuring equal opportunities.

- Making decisions transparent and compliant, so borrowers and regulators can understand the reasoning.

- Adapting to change, staying reliable even as economies and borrower behavior evolve.

When models achieve these outcomes, lenders build trust while borrowers gain fair access to opportunity. That balance of innovation, fairness, and accountability is what success in deep learning for credit scoring really means.

Building Models That Protect People and Institutions

Deep learning can transform credit scoring and risk prediction. It analyzes sequences, text, and networks that traditional methods cannot. It can detect early warning signs and improve lending accuracy.

The potential of deep learning is realized only when models are built responsibly. Training must be thorough, monitoring must be ongoing, and fairness must be included at every step. Human oversight must remain part of the process.

Credit is more than a number. It is the foundation for families, businesses, and communities. Responsible use of deep learning allows lenders to protect themselves while giving borrowers the opportunities they deserve. The future of deep learning fintech credit scoring will be shaped by institutions that prioritize innovation alongside accountability and fairness.

P.S. You can also explore how deep learning is making a difference in medical imaging and helping doctors deliver faster, more accurate diagnoses.

FAQs

1. What is credit scoring?

Credit scoring is a system lenders use to evaluate a borrower’s likelihood of repaying a loan. Scores are calculated based on financial history, income, and other relevant data.

2. How does deep learning improve credit scoring?

Deep learning can analyze complex and large datasets, including transaction histories, text data, and borrower networks, detecting patterns that traditional models may miss. This improves prediction accuracy and fairness.

3. What types of data do deep learning models use for credit risk?

- Structured/tabular data: Income, credit usage, number of accounts

- Transaction histories: Spending patterns and debt repayment behavior

- Textual data: Loan applications, call center notes

- Relational/network data: Connections between borrowers through co-signers or shared accounts

4. Are traditional models still useful?

Yes. Logistic regression and tree-based models like random forests or gradient boosting are still effective for simpler datasets. Deep learning is most valuable when data is complex and multi-dimensional.

5. What deep learning models are commonly used in credit scoring?

- Tabular models: TabNet, TabPFN

- Sequence models: Transformers, temporal convolutional networks

- Graph neural networks: For borrower networks and relational data

- Fusion models: Combine multiple data types for a complete risk view

6. How do lenders ensure fairness in deep learning models?

Fairness is checked using metrics like:

- Equal opportunity: True positive rates are similar across groups

- Demographic parity: Approval rates are not biased

- Adverse impact ratios: Identify discrimination patterns

Adjustments are made during data preprocessing, training, or model calibration.

7. How do lenders explain deep learning decisions?

Explainability tools like SHAP values, surrogate models, and counterfactual explanations show which factors influenced a score and how small changes could improve it.

8. What best practices ensure reliable deep learning models?

- Handle class imbalance in defaults

- Use temporal validation

- Apply regularization and calibration

- Monitor model drift over time

- Conduct independent validation and human oversight

9. When should deep learning be used over traditional models?

Use deep learning when datasets are large, complex, and multi-modal (combining text, transactions, and network data). For simple datasets, traditional models often perform well.

10. What does success look like for a deep learning credit model?

- Approving more borrowers safely

- Allocating credit fairly

- Transparent and compliant decisions

- Models adapt to economic or behavioral changes