We put excellence, value and quality above all - and it shows

A Technology Partnership That Goes Beyond Code

“Arbisoft has been my most trusted technology partner for now over 15 years. Arbisoft has very unique methods of recruiting and training, and the results demonstrate that. They have great teams, great positive attitudes and great communication.”

Future-Proofing Fraud Detection: Governance, Ethics, and the Next Generation of AI Agents

Last year, a CEO transferred $25 million after hearing what he thought was his boss’s voice on the phone. It wasn’t. It was an AI deepfake.

Fraud no longer looks like suspicious emails or sketchy logins. It looks and sounds like the people you trust most. And while money now moves at the speed of light through instant transfers and global payments, fraudsters are moving even faster.

The only way to keep up? AI agents that think, act, and fight back in real time.

If you want to understand the foundation of these systems, see our blog on How Do AI Agents Work? A Technical Breakdown of Their Architecture.

Governance and Ethical Guidelines

An overwhelming 89% of banks now prioritize explainability and transparency as non-negotiable features in their AI systems. This isn't just about ethics; it's about trust, compliance, and brand reputation.

Implementing a clear framework, like Feedzai's TRUST Framework, provides a practical, five-pillar roadmap to ensure your AI is built responsibly from the ground up.

For practical advice on building task-specific AI agents that are safe and scalable, check our post on Best Practices for Scalable, Safe, and Reliable Development.

- Decisions must be explainable to everyone, from regulators to customers.

- Systems must perform reliably under changing, real-world conditions.

- Proactive measures must be in place to identify and mitigate discriminatory outcomes.

- Data integrity and user privacy must be protected at all times.

- Continuous validation is needed to ensure performance, safety, and efficiency.

Putting Governance into Practice

This means moving from theory to action by establishing concrete operational rules.

- Define exactly what each AI agent can and cannot do autonomously. For instance, an agent can investigate an alert, but only a human can approve the filing of a Suspicious Activity Report (SAR).

- Create seamless escalation paths. When an AI agent encounters a high-complexity case or hits a confidence threshold, it must know exactly how to flag it for human review.

- Use secure systems that act as a single source of truth for both AI agents and human investigators. This way, everyone works with the same validated data and policies.

- Integrate tools for real-time monitoring, bias detection (like IBM’s AI Fairness 360 or Google's What-If Tool), and automated auditing to continuously check for drift, bias, or security threats.

But how do deepfakes impact financial security? Let’s dig in!

The Trends to Look Out For

The financial fraud is accelerating, and 2025 is the year defense pulls ahead. The old, reactive models are obsolete. Today's winning strategies are built on AI that predicts, adapts, and acts in real-time, creating a seamless shield that protects customers without interrupting their experience.

Here are the key innovations leading the charge.

Real-Time Monitoring and Behavioral Analytics

Money now moves in real-time, and so must your defenses. The rise of instant payment systems like FedNow and Zelle has made real-time monitoring not just an advantage but a necessity.

Why are traditional fraud detection methods failing? Why is it a game-changer?

Modern AI systems can analyze a transaction and assess its risk in milliseconds. Now that is faster than a customer can click "confirm purchase." This allows institutions to block fraudulent transactions before they are even finalized, preventing financial loss instantly.

But the real magic isn't just speed; it's context. The latest innovation goes beyond asking "Is this transaction valid?" to asking "Is this behavior normal for this user?".

This is where Behavioral Analytics excels by creating a unique "digital fingerprint" of how each user typically behaves. The algorithm can recognize their typing rhythm, typical login times, and common transaction amounts. These subtle deviations scream "account takeover!".

Network Analysis and Graph Technology

So, what is graph analytics in fraud detection?

Simply put, the traditional fraud detection approach looks at transactions one by one. Graph analytics works differently: it maps the hidden connections between everything. The users, devices, phone numbers, and merchants, to reveal the complex web of a fraud ring that would otherwise stay invisible.

For instance, ten people might look unrelated. But graph analytics acts like a master investigator, uncovering that they all used the same device to log in. This is a huge red flag for a money mule operation, where criminals use multiple accounts to move stolen money.

This is especially crucial for fighting synthetic identity fraud, which has jumped 30%. These are fake identities built from stolen and made-up data. They're nearly impossible to spot alone, but graph technology exposes their secret links to other known fraudulent accounts, unraveling the entire scheme.

Privacy-Enhancing Technologies (PETs)

Here's the modern dilemma: How do you analyze vast amounts of customer data to stop fraud without violating their privacy or breaking strict regulations like GDPR?

The answer lies in Privacy-Enhancing Technologies (PETs). These are a suite of advanced cryptographic techniques that allow you to analyze data without ever actually seeing the raw, sensitive information.

Data privacy is one of the biggest challenges in AI. If you’re exploring this further, our blog on How LLM-Powered AI is Revolutionizing Software Quality Assurance explains how secure AI testing practices ensure systems remain both effective and compliant.

The most exciting PETs for fraud include:

- Homomorphic Encryption: Run calculations on data while it's still encrypted. The bank can analyze your transaction patterns without ever decrypting your personal details.

- Zero-Knowledge Proofs (ZKPs): This allows a user to prove they are over 18 or that their account is in good standing without revealing their exact age or account balance.

- Federated Learning: Train AI models across multiple institutions without any of them sharing their raw customer data. Only the lessons learned (model updates) are shared, not the data itself.

Best Practices for Implementation and Operation

Building a powerful AI system is one thing; running it responsibly and effectively is another. This is where the real work begins. The following best practices will ensure your AI fraud detection is not only intelligent but also trustworthy, resilient, and constantly evolving.

Ethical AI Governance and Transparency

You can't deploy AI and just hope for the best. With great power comes great responsibility, and 89% of banks now prioritize explainability and transparency as non-negotiable requirements in their AI systems. This is about risk management, compliance, and maintaining your customers' hard-earned trust.

This is where the next question comes in: how do you prevent AI bias in fraud detection systems?

Your governance practices should include:

- Every AI decision must be explainable. If your AI denies a transaction or flags an account, your team must be able to understand why and explain it in simple terms to a customer or regulator.

- Proactively and continuously test your AI models for unfair biases across different customer demographics (e.g., age, race, location). This is critical as AI bias lawsuits are on the rise, with courts ruling that companies are liable for discriminatory outcomes from their AI systems.

- Be open with regulators about your AI methodologies. Building a collaborative relationship is far better than waiting for a punitive audit.

- Always provide a clear and easy path for a human to override an AI decision. This is a crucial safety valve.

The Human-AI Collaboration

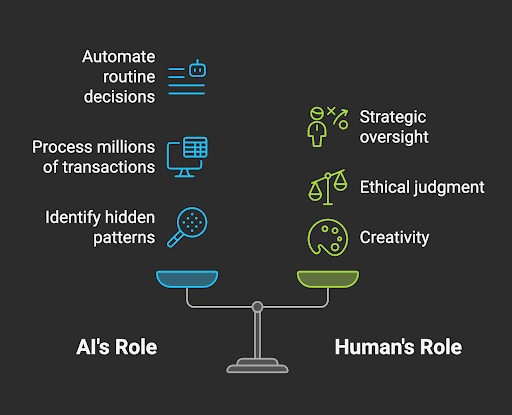

Can AI completely replace human fraud investigators? No. The goal of AI is not to replace humans, but to augment them. The most successful implementations create a powerful partnership where humans and AI each do what they do best.

In practice, this means your human experts should focus on the following:

- Let humans tackle the high-complexity, high-risk cases that AI can't confidently resolve (typically 15-20% of all alerts).

- Humans must continuously monitor AI agent performance, provide feedback, and "coach" the models to improve.

- Your best human analysts should be freed from mundane tasks to develop new fraud-fighting strategies and refine existing AI approaches.

- Regularly sample and validate the AI's work through manual reviews to ensure quality and accuracy.

Continuous Improvement and Adaptation

A static AI model is a vulnerable one. Your system must be built to learn and adapt constantly.

- Implement closed-loop systems where investigator feedback on false positives and confirmed fraud is automatically fed back into the AI models. This allows them to learn from mistakes and successes in real-time, a process known as continual learning. This approach reduces false positives over time while enhancing detection accuracy.

- Don't wait for a real fraudster to find your weaknesses. Regularly conduct simulated attacks and penetration testing to proactively identify and patch vulnerabilities in your defenses. Many organizations engage external "white hat" security experts for independent assessments, as they often find blind spots that internal teams miss.

- The threat is evolving. Deepfake technology is advancing rapidly, and generative AI is being used to create sophisticated phishing campaigns and synthetic identities. Your team and your systems must be prepared for these new tactics.

Key Takeaways for Implementation:

| Best Practice | Core Principle | Key Action |

| Ethical Governance | Trust is mandatory, not optional. | Implement a framework like TRUST, conduct bias audits, and ensure full explainability. |

| Human-AI Collaboration | Augment, don't replace. | Design roles where humans handle exceptions, strategy, and coaching for AI agents. |

| Continuous Learning | A static system is a vulnerable system. | Integrate feedback loops, run attack simulations, and constantly update against new threats. |

The Future Outlook

The AI in fraud management market is projected to grow from $14.72 billion in 2025 to $65.35 billion by 2034, representing a compound annual growth rate of 18.06%. This growth will be driven by several key developments:

- Advanced behavioral biometrics that analyze subtle patterns in user interaction

- Cross-institutional collaboration through federated learning models that improve detection without sharing sensitive data

- Quantum computing applications for detecting previously invisible patterns

- Self-healing systems that automatically adapt to new fraud techniques

As AI agents in Fintech become more sophisticated, we can expect them to take on increasingly complex responsibilities in financial crime prevention while maintaining the necessary human oversight to ensure ethical and compliant operations.

In the End

We are at a definitive inflection point in financial security. The convergence of Generative AI-powered threats, which was increased fourfold in 2024, from deepfakes to AI-generated phishing emails that now comprise 82% of attacks, and aggressive new regulations holding financial institutions accountable, has created a burning platform for action. The time for incremental upgrades is over. The projected growth of the AI fraud detection and prevention market to over $246 billion by 2032 signals an industry investing heavily in its own survival and the protection of its customers.

Financial institutions that are successfully deploying agentic AI aren't just buying a new tool, they are building their most resilient and scalable line of defense. They are future-proofing their operations, protecting their customers, and preserving the trust that is the foundation of the entire financial system.

The transition won't always be easy, but the cost of inaction is far greater. The question is no longer if you should implement AI agents, but how fast you can do it responsibly.

Start with a pilot, assemble your cross-functional team, and build your digital workforce. AI agents are no longer a luxury; they are the shield and the sentinel for the current times.