We put excellence, value and quality above all - and it shows

A Technology Partnership That Goes Beyond Code

“Arbisoft has been my most trusted technology partner for now over 15 years. Arbisoft has very unique methods of recruiting and training, and the results demonstrate that. They have great teams, great positive attitudes and great communication.”

How Transformers Redefined Natural Language Processing

Training an AI to understand language used to feel like teaching a toddler every single word, grammar rule, and nuance from scratch. Possible? Sure. Efficient? Not even close.

Then Transformers arrived, and everything changed.

Instead of forcing AI to read in a strict sequence like a typewriter, Transformers taught models to pay attention to what actually matters in a sentence, all at once. Suddenly, machines could grasp meaning faster, handle longer contexts, and scale in ways we had only imagined in science fiction.

No more crawling. NLP started sprinting.

Ready to see how this architecture turned AI into a language powerhouse and why 2025 is its most exciting year yet? Let us unpack it.

1. Introduction: The Dawn of the Transformer Era

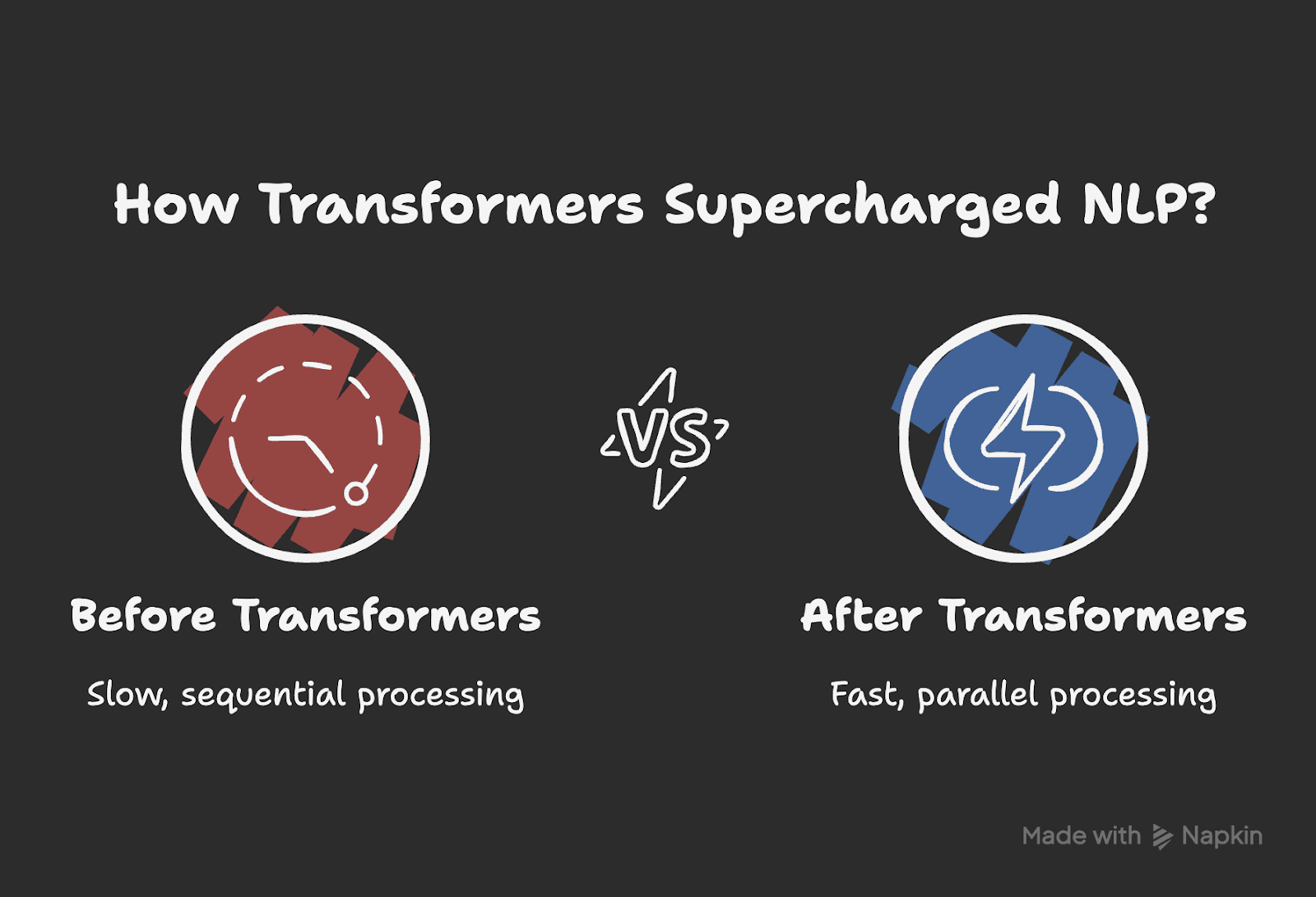

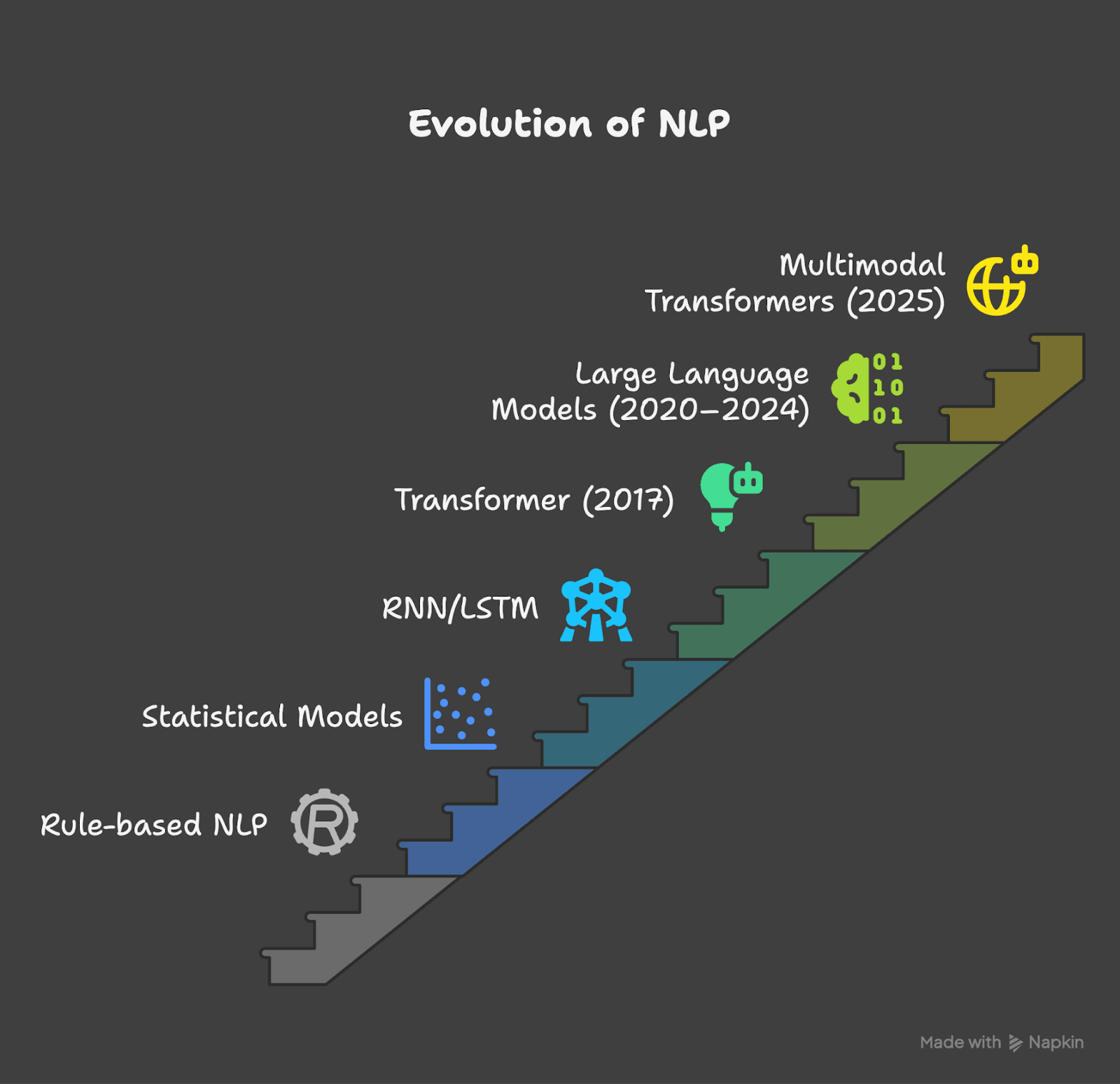

Just over a decade ago, teaching machines to understand language was slow, clunky, and full of compromises. Early systems were built on rules that had to be painstakingly crafted by humans. Then came statistical models, which could crunch numbers to find patterns but still struggled with nuance. Recurrent neural networks (RNNs) and long short-term memory networks (LSTMs) marked real progress, giving AI a way to remember context across sentences. Even so, they still read text one step at a time, which limited how much they could handle and how fast they could process it.

Everything changed in 2017 with the release of the paper Attention Is All You Need. The Transformer architecture introduced a way for models to focus on the most important parts of a sentence, no matter where those words appeared. Instead of reading text in a strict order, Transformers could process everything at once, making them dramatically faster and far more capable of understanding long passages.

In the years since, Transformers have powered a wave of large language models that write, translate, summarize, and answer questions with uncanny fluency. By 2025, they are no longer just tools for processing text. Scaling laws have pushed their performance to new heights, multimodal models can handle images, audio, and video in the same conversation, and on-device Transformers are running on smartphones and wearables. What began as clever architecture has become the backbone of modern AI.

2. How Do Transformers Work?

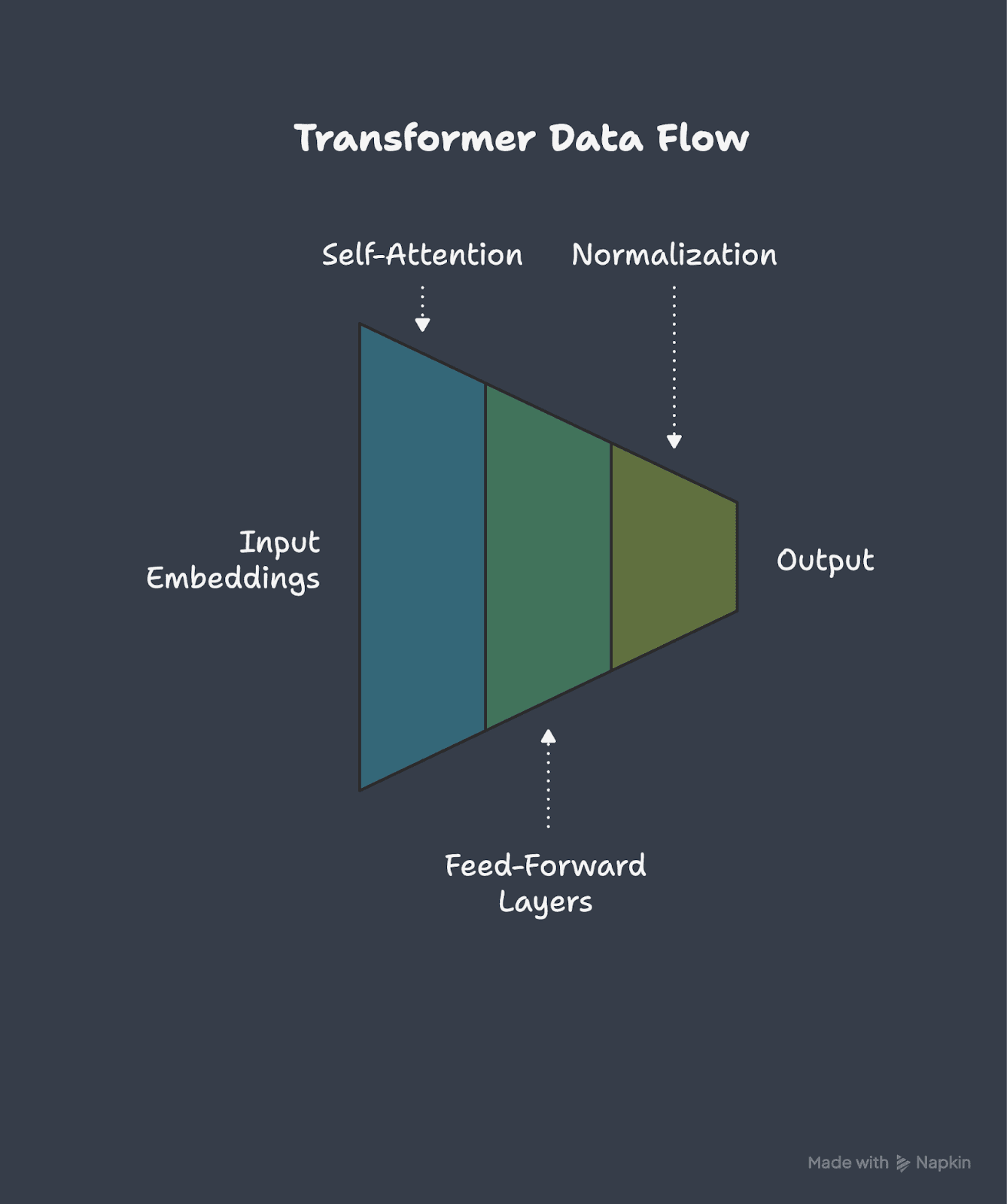

Imagine reading a story. You remember the important parts and use them to understand what happens next. Transformers do something similar through self-attention. This allows the model to look at all the words in a sentence and figure out which ones matter most for understanding the meaning.

Older models like RNNs read one word at a time, which was slow. Transformers process all words in parallel, like a group of people reading different sections of a chapter at the same time and then sharing notes instantly. This parallel approach makes them much faster and better at handling large amounts of text.

Order still matters in language. Transformers use positional encoding to know where each word belongs. This is like giving every word a small tag with its position so the model knows the difference between “cat chases mouse” and “mouse chases cat.”

There are two main setups. Encoder-decoder models read input with the encoder and produce output with the decoder, which works well for translation. Decoder-only models predict the next word from everything they have seen, which is ideal for chat and content creation.

Because Transformers can process words in parallel, remember long contexts, and adapt to many tasks, they scale better than older architectures. This is why they have become the backbone of modern NLP systems.

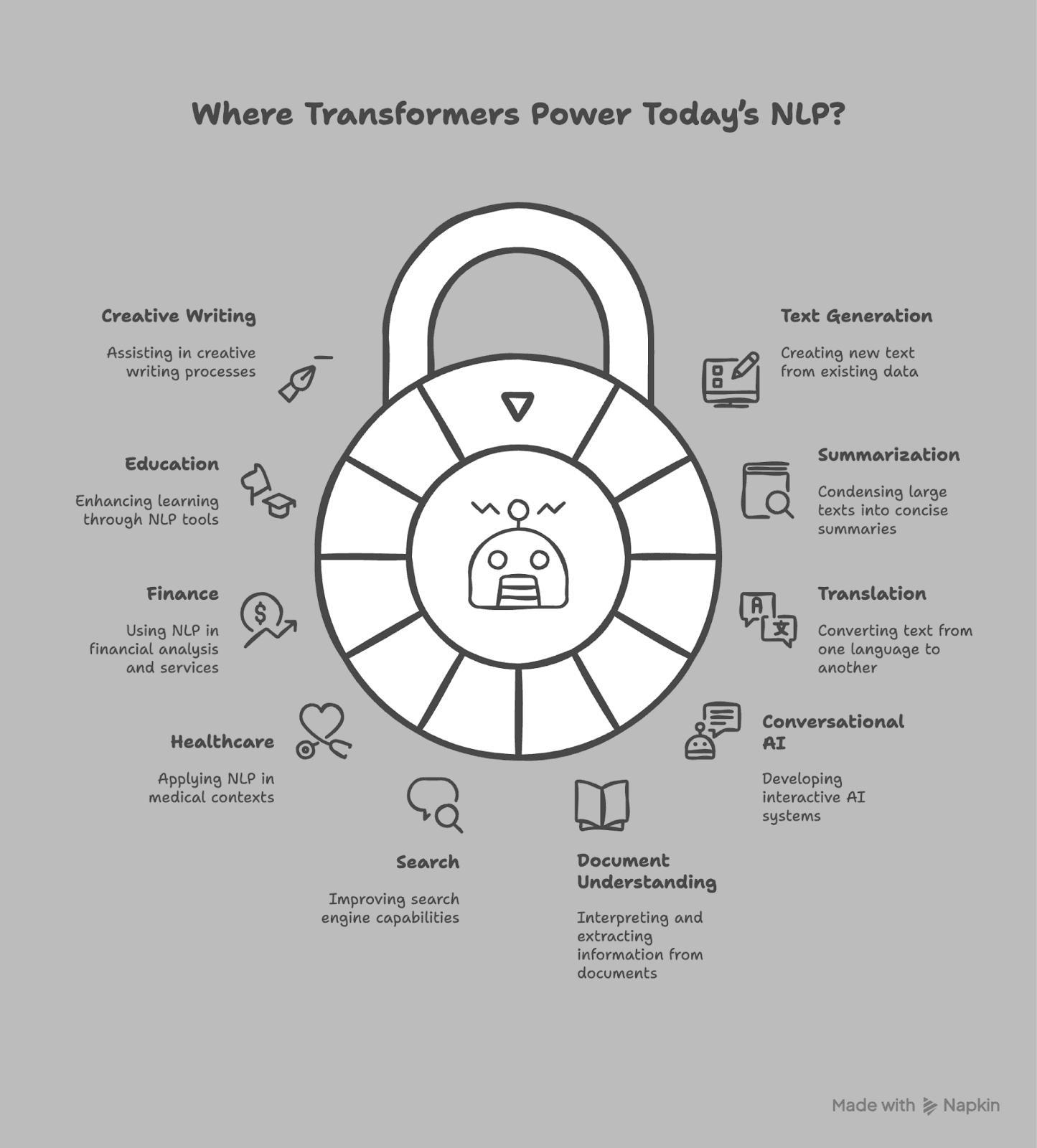

3. Breakthrough Applications in NLP

Transformers have moved NLP far beyond basic text processing. Today, they power applications that feel natural, fast, and often surprisingly human-like.

One of the most visible breakthroughs is text generation and summarization. Tools built on models like GPT-4 and GPT-5 can draft articles, write stories, or condense long documents into clear and concise summaries within seconds.

Machine translation has also advanced significantly. Languages that once produced awkward or word-for-word translations now benefit from context-aware outputs that capture tone and meaning accurately.

In conversational AI, Transformers drive chatbots and virtual assistants that can remember context, handle follow-up questions, and respond in a natural flow. This makes them valuable in customer service, technical support, and personal productivity tools.

There is also progress in real-time document understanding, where models can read contracts, reports, or forms and instantly extract key information. Search engines have improved as well by using transformer-based ranking models to return results that better match user intent rather than just keywords.

These capabilities are no longer limited to large tech companies. Industries including healthcare, finance, and education are using transformer-powered tools to work faster, reduce errors, and make complex information easier to understand.

4. Transformers in the Era of Personal AI

In 2025, Transformers are no longer confined to large data centers. Many are now compact and efficient enough to run on smartphones, tablets, and even smart glasses. This edge deployment allows AI to respond instantly without sending every request to the cloud.

Local processing also boosts privacy. With federated learning, devices can improve their AI using personal data while keeping that data on the device.

Personalized assistants are becoming common as well. They adapt to how a person speaks, remember preferences, and deliver more relevant answers over time.

Together, local processing, privacy safeguards, and personalization are making AI feel less like a distant service and more like a trusted companion.

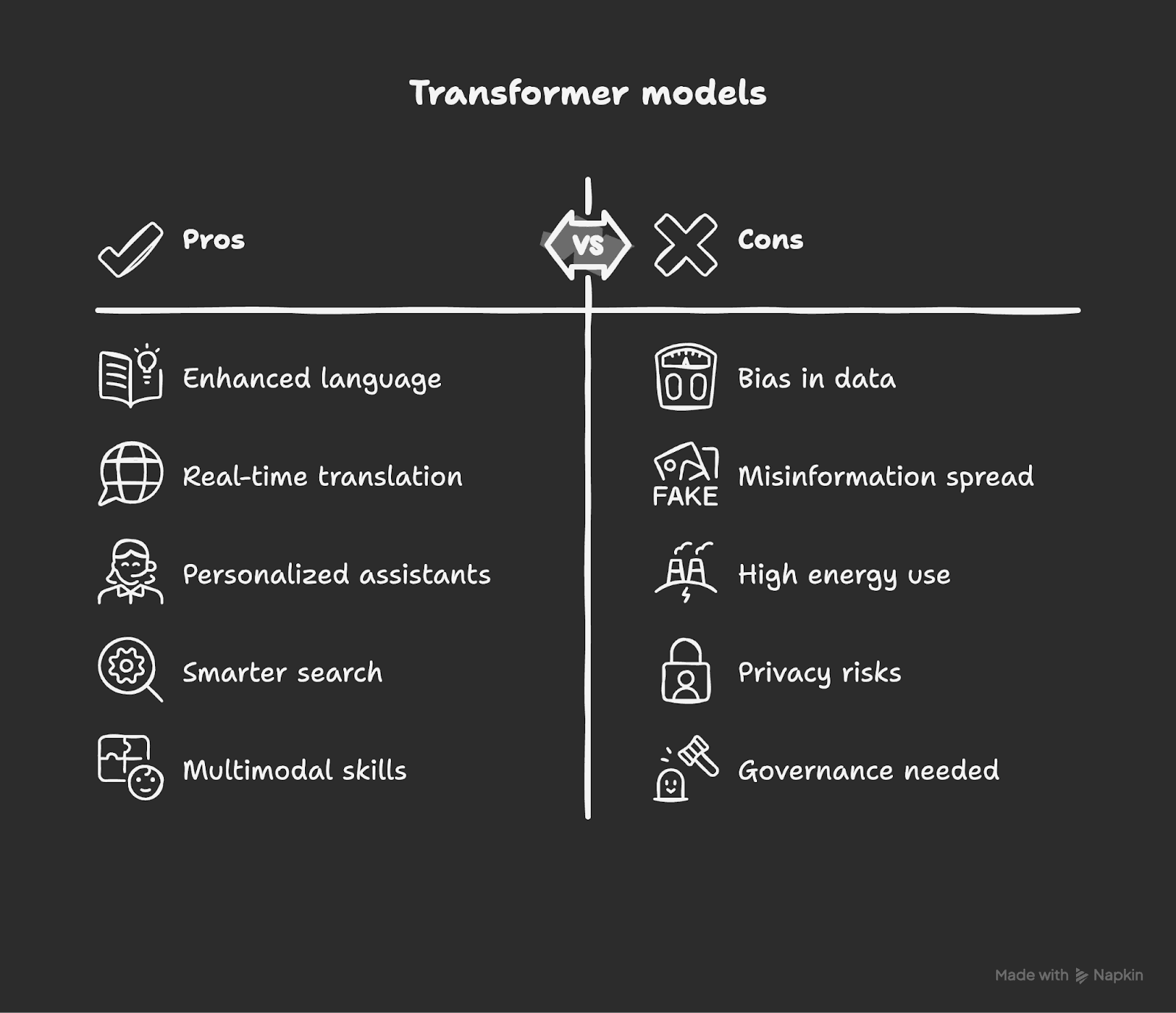

5. Opportunities and Risks of Transformers

Transformers bring unmatched potential but also real risks. Their future depends on how well we balance progress with responsibility.

Overview

Transformers have redefined the old ways of natural language processing and how we interact with digital tools. We have transformed from slow, narrow-focused systems to innovative systems that can understand, generate content, and connect ideas to reality. With these advancements, it becomes a responsibility to utilize these tools wisely while prioritizing privacy and fairness.