We put excellence, value and quality above all - and it shows

A Technology Partnership That Goes Beyond Code

“Arbisoft has been my most trusted technology partner for now over 15 years. Arbisoft has very unique methods of recruiting and training, and the results demonstrate that. They have great teams, great positive attitudes and great communication.”

Generative AI in Enterprise LMS: Hype vs Reality

More than 60,000 employees at LTI Mindtree participated in a company‑wide Generative AI training program, yet that success story coexists with a sobering reality. According to an MIT study, roughly 95% of enterprise GenAI pilots fail to deliver a measurable profit & loss impact.

Generative AI’s promise in the learning space is being sold as a fast track to content creation, personalization, and scaled instructional design. The pitch makes it sound simple: type, train, scale. But as adoption accelerates, a fundamental truth is becoming clear: what enterprises think they are buying and what they actually get are rarely the same thing.

Put simply, GenAI is less about churning out more content and more about re‑engineering the flow of knowledge across the organization. That is where most LMS strategies go off track.

As talent‑strategy luminary Josh Bersin has said,

“Generative AI is about to change all this(what we know) forever … This AI‑driven approach to learning is not only more efficient but also more effective, enabling learning in the flow of work.”

This blog cuts through the noise. It unpacks where GenAI actually delivers inside enterprise LMS environments, where it falls short, and how leaders can harness it with intention, rigour, and long-term value in mind.

Roadmap: Generative AI in Enterprise LMS

This roadmap highlights the capability clusters that determine whether a GenAI-enabled LMS can scale beyond experimentation and deliver enterprise value. Each cluster reflects a specific area where leaders must assess maturity, investment readiness, and operational gaps before moving toward full adoption.

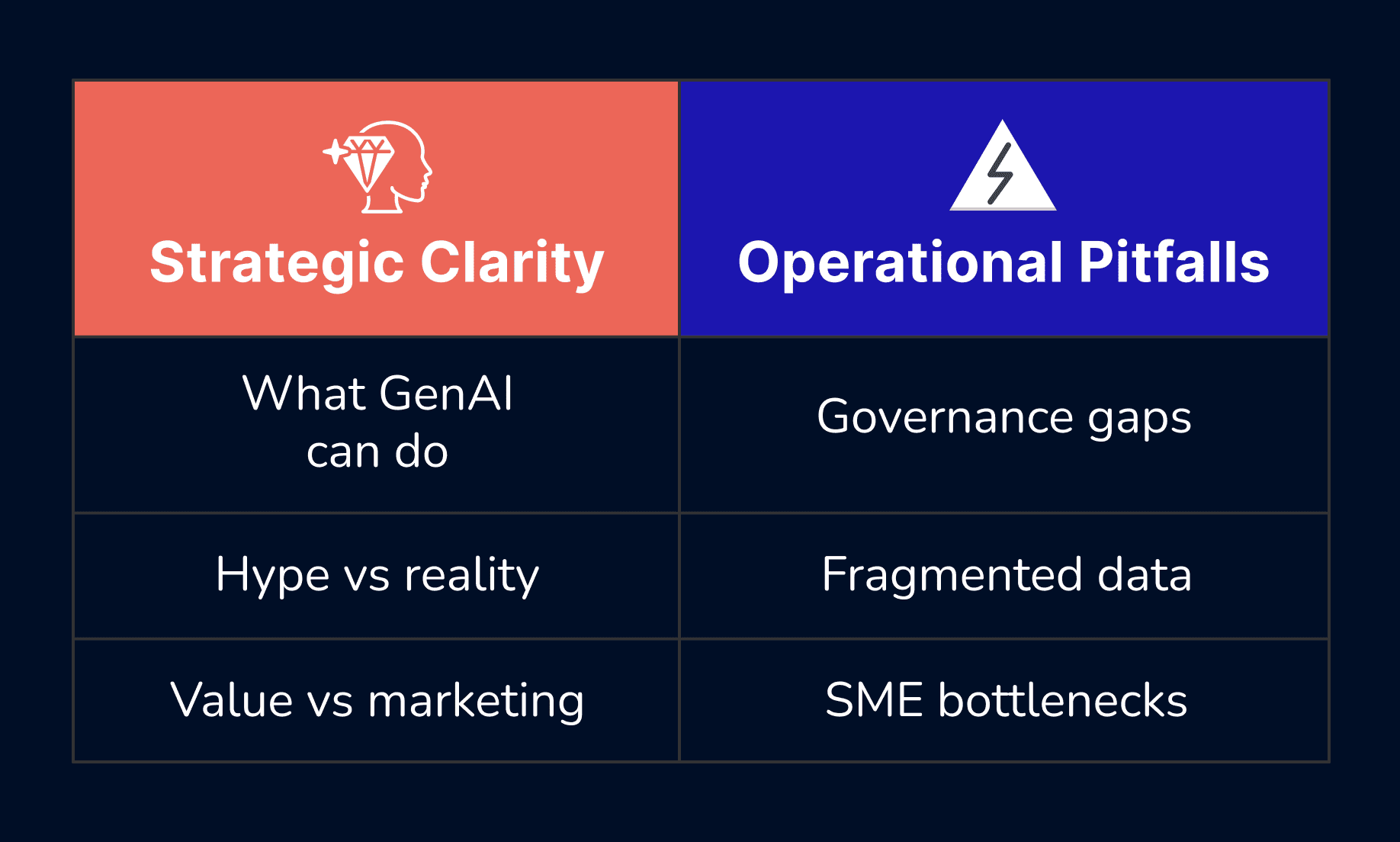

The Core Misconception: Generative AI Is Not a Learning Strategy

Generative AI (GenAI) has captivated enterprise leaders, but too often it is mistaken for a learning strategy when in fact it is a capability layer. This misunderstanding lies at the heart of many failed or underwhelming GenAI-LMS initiatives.

Market Reality: GenAI Adoption Is Rising, But Not as Common

While AI is increasingly part of learning and development toolkits, only a minority of learning teams currently use GenAI deeply. The market growth of LMS generally is strong, but GenAI is not yet the default. Of the organizations not yet using AI in learning, 46% plan to start within the next year.

- According to Continu’s corporate e-learning research, 30% of L&D teams report that they are already using AI-powered tools in their learning programs.

- At the same time, the broader LMS market is growing explosively: Grand View Research projects the global LMS market will reach USD $70.83 billion by 2030, with AI cited as a key growth driver.

- According to Future Market Insights, the LMS market is estimated to reach USD $172.4 billion by 2035, at a CAGR of 18.1%.

Misaligned Expectations

According to many experts, they have seen in multiple organizations where the core issue was not content generation speed, but content curation quality and content relevance. GenAI sure does accelerate, but it does not strategize.

- GenAI ≠ Instructional Design

Many executives believe that GenAI can replace the role of instructional designers (IDs). In practice, GenAI works best when paired with human design expertise. Without pedagogical oversight, auto-generated content can be generic, misaligned with business goals, or simply unfit for regulation-heavy environments.

- GenAI ≠ Learning Culture

Technology alone does not create a culture of continuous learning. Leaders who imagine GenAI will fix broken learning cultures are overlooking deeper structural issues: lack of governance, fragmented content, and disjointed learning pathways.

- GenAI ≠ Complete Learning Automation

Real-world enterprise deployments consistently show that validation, review, and governance remain necessary. Even with GenAI, subject-matter experts (SMEs) are often required to review output, especially in regulated or technical domains.

Risk of Overinvestment and Under-Delivering

According to a recent MIT analysis, as many as 95% of generative AI projects are failing to produce meaningful transformation.

In one survey by Investopedia, 42% of CFOs said their organizations are exploring GenAI, but 63% plan to commit less than 1% of next year’s budget to it. Talent remains a constraint, as among CFOs, 63% point to limited talent resources as a barrier, and 45% cite risk and governance concerns.

These numbers tell a deeper story: while exploration is widespread, commitment is measured. Many initiatives stall not for lack of enthusiasm, but because companies lack the internal readiness or governance to scale GenAI effectively.

The Governance & Ethical Blind Spots

For learning leaders, failing to define governance structures before deploying GenAI is like handing a power tool to an amateur. The risk of misuse is high, and the outcomes are unpredictable.

Research in education and LLMs shows substantial challenges. A systematic review of 118 studies identified practical and ethical risks around transparency, replicability, privacy, and fairness.

On the contrary, in corporate settings, governance can become the bottleneck: enterprises must enforce guardrails around content validation, data use, and versioning.

Strategic Reframing: GenAI as Capability, Not Strategy

To succeed, enterprises must reframe how they think about GenAI:

- View GenAI as an accelerant, not a replacement. Use it to speed up drafting, translation, and ideation, not to eliminate human roles.

- Build your infrastructure first. Establish content governance, SME review pipelines, and version control before scaling GenAI content generation.

- Align GenAI deployment with business metrics. Focus not on volume of content, but on impact: knowledge reuse, SME efficiency, time-to-competency, and compliance adherence.

- Iterate in low-risk domains. Start with pilot areas such as internal job aids, scenario-based microlearning, or knowledge codification—not high-risk compliance training.

What Enterprises Actually Want (But Do Not Say)

Despite the noise surrounding Generative AI in learning, enterprise leaders often avoid expressing their true motivations directly. Not because they are unclear, but because the language of corporate learning has spent a decade orbiting around “engagement”, “innovation”, and “modernization.” All happening while avoiding the operational pressures that actually drive decisions.

Let’s move past the surface-level narratives and address the underlying expectations that consistently shape GenAI adoption.

1. Shrinking the Learning Production Burden

Most organizations do not say this openly, but what they truly want is relief from the content production bottleneck that has weighed down L&D teams for years. Traditional instructional design workflows can take 3 to 12 weeks for a single mid-complexity course, and even minor revisions often require multi-step approvals involving SMEs, compliance teams, and functional leads.

Executives want GenAI to reduce content creation timelines drastically, without compromising compliance or accuracy. This expectation becomes even more pronounced when content volume grows faster than budgets, a reality most learning leaders navigate quietly.

2. Making Learning Personal

Personalized learning has been a strategic aspiration for nearly a decade. What prevented its full realization was its operational cost. The idea of producing individualized content paths for thousands of employees sounds ideal, but doing so manually is neither feasible nor financially practical.

GenAI is now seen as a potential equalizer. A way to deliver personalization at scale without expanding instructional design headcount. The enterprises want cost-neutral personalization that aligns learning to roles, skills, and performance expectations without introducing administrative overhead.

This reveals an underlying shift that the leaders are no longer chasing engagement for its own sake but looking for learning experiences that are measurably aligned to business performance.

3. Capturing Tacit Knowledge

Every large organization carries a silent risk: knowledge loss. Retirements, attrition, and restructuring can erase years of undocumented expertise. While leadership teams may not articulate this as a GenAI use case, their urgency around knowledge retention is unmistakable.

GenAI’s ability to turn SME conversations, playbooks, and informal insights into structured learning material addresses a fear many leaders hesitate to express openly. They need a way to preserve institutional knowledge before it disappears. This need becomes even more pressing in industries where compliance, safety, or operational continuity relies heavily on expert judgment.

4. Actionable Insights, Not More Data

LMS platforms have spent years accumulating dashboards filled with completion rates, time spent, and similar metrics that offer little strategic value. Leaders no longer want more data.

They want decision-ready insights. The quiet expectation is that GenAI should help interpret learning signals, not simply collect them. Executives want to identify skill gaps, anticipate training needs, and recommend interventions with clarity without requiring dedicated analytics teams.

This expectation reframes GenAI from a content tool into an intelligence layer, one that connects learning activity to business outcomes.

5. Reducing Dependency on External Vendors

For many enterprises, vendor costs account for 30% to 50% of their total L&D budget. Although this is rarely stated publicly, leaders recognize that overreliance on vendors creates financial rigidity and slows down content iteration cycles.

GenAI creates a viable path to shift from vendor-heavy ecosystems to hybrid models, where internal teams can produce more and outsource less.

But this shift is not simply about cost. It is also about ownership, controlling the pace, quality, and relevance of learning content.

6. The Pressure to Modernize, Without Raising Risk Exposure

Executives feel the organizational pressure to modernize learning, especially as talent strategies lean more heavily toward skills-based approaches. Yet at the same time, they must minimize operational risk.

GenAI is perceived as a way to modernize without dramatic process disruption, but the expectation is nuanced. Leaders want innovation that slots cleanly into existing workflows and compliance frameworks. They want modernization without jeopardizing governance and without demanding massive cultural change.

This dual pressure to innovate quickly, but safely, shapes nearly every GenAI-LMS adoption timeline, even when not stated explicitly.

7. What These Expectations Reveal

Taken together, these unspoken motivations point to a deeper truth. Enterprises are not looking for flashy GenAI capabilities.

- They are looking for operational relief.

- They want to unblock learning bottlenecks that have compounded for years.

- They want a better flow of knowledge, not just better tools.

- They want efficiency without disorder, personalization without overhead, modernization without upheaval.

The Hype Cycle

The conversation around Generative AI in enterprise learning has been shaped less by operational truth and more by aggressive marketing. Vendors have positioned GenAI as a rapid, transformative engine that can overhaul instructional design, automate personalization, and eliminate production backlogs. The narrative is polished and confident, but often disconnected from what actually happens after implementation.

The Promise of Instant Content Creation

The most common claim is that GenAI can produce complete, instructionally sound content within minutes. The pitch usually includes phrases like "auto-generate full courses" or "replace weeks of SME consultation."

The reality is more restrained, that surely a lot of us have experienced in one way or another.

GenAI can create initial drafts and provide structure, but the responsibility for accuracy, contextual relevance, regulatory alignment, and cultural nuance still rests with human teams. The content produced by AI often lacks domain depth and requires validation from SMEs. This means production time may shrink, but it does not disappear. The gap between expectation and reality becomes obvious when enterprises discover that fast content does not equal ready content.

Donald H. Taylor, the L&D researcher, Global Sentiment Survey adds,

“If we're only using AI to create more content, we are probably dooming ourselves to irrelevance … We can use it … for far greater impact.”

Taylor talks about the bigger and more common issue here, saying that using AI just to produce more content misses the real opportunity. The real value comes when AI is used to solve bigger learning problems, not just to increase volume.

Personalization

Vendors frequently claim that GenAI can produce personalized learning paths at scale with little or no additional overhead. Each employee receives a tailored experience based on skills, performance, or role requirements.

In practice, personalization requires clean data, standardized taxonomies, and a governance framework that ensures recommendations are accurate. Many enterprises do not have these foundations in place. When the underlying data is inconsistent, personalization becomes unreliable and sometimes counterproductive.

What is marketed as a plug-and-play capability usually requires months of foundational work behind the scenes.

The Overstatement of Instructional Intelligence

Another claim is that GenAI can replicate the decision-making of experienced instructional designers. Vendors often showcase flawless multi-step scenarios, assessments, and learning journeys during demos.

In real deployments, GenAI can recommend structures and outline content, but it cannot independently determine which instructional approach best supports a specific performance objective. Learning design requires judgment, context, and an understanding of organizational skill needs that AI cannot fully replicate.

The Oversimplification of LMS Integration

A significant portion of vendor messaging implies that GenAI can integrate smoothly with any LMS and instantly enhance existing workflows. The demos usually show clean interfaces, seamless content generation, and automatic publishing.

In reality, integration depends on API maturity, data consistency, content formats, and internal review processes. Most organizations require custom configuration, alignment with compliance protocols, and content verification layers. These steps add time and resource demands that vendors rarely emphasize upfront.

Full Automation

Some vendors promote GenAI as a way to automate entire learning cycles from content creation to assessment and impact measurement. The pitch is that AI will run the lifecycle end-to-end.

Enterprises still need humans at key decision points, especially in regulated sectors. Content review, compliance approval, risk assessment, and business alignment all remain human-driven. GenAI automates fragments of the workflow, not the entire system. Automation improves efficiency, but it does not eliminate accountability.

Where the Hype Meets Resistance

Eventually, the marketing narrative collides with operational constraints. Issues such as data readiness, SME involvement, version control, and learning governance cannot be avoided. This is where enterprises begin to recalibrate expectations and shift from a hype-driven conversation to a capability-driven one.

The moment an organization sees the workload associated with validation, integration, and governance, it becomes clear that GenAI is not a disruptor on its own. It is a multiplier for teams that already have strong processes. It is not a repair tool for teams that do not. The hype cycle ends not because GenAI fails, but because the real work of enterprise transformation is still required.

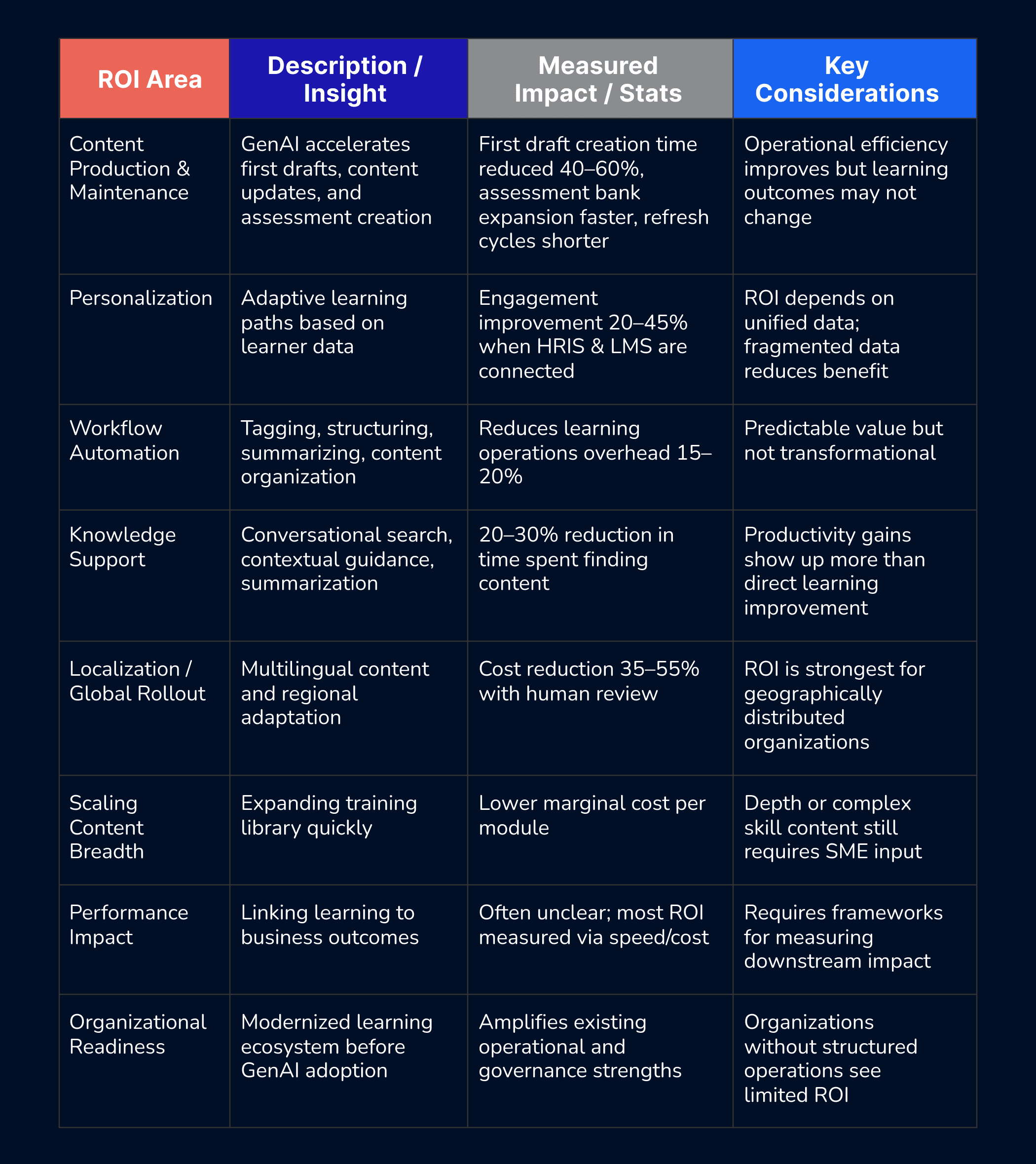

What GenAI Actually Does Well Inside an LMS

A clear view of GenAI strengths helps cut through the marketing noise and focus on the capabilities that genuinely move the needle for enterprise learning teams.

- Accelerates content drafting without compromising SME control

GenAI can reduce first draft creation time for modules, scripts, scenario outlines, and knowledge checks by 40 to 60 percent. It speeds up production but does not replace the need for domain validation.

- Improves content variation and refresh cycles

Enterprises often struggle with annual or quarterly updates. GenAI can generate updated versions of compliance modules, product knowledge sessions, or skill content in minutes, which lowers refresh overheads by 25 to 35 percent.

- Supports adaptive learning paths when fed with clean, unified data

When an LMS is integrated with an HRIS or skill graph, GenAI can orchestrate personalized learning paths that increase learner engagement by 20 to 45 percent. Without unified data, these benefits do not materialize.

- Streamlines assessment creation and calibrates difficulty levels

Automated question generation, distractor creation, and rubric drafting help reduce assessment development time by 30 to 50 percent. Human review remains essential, but the workload becomes significantly lighter.

- Summarizes large content repositories for faster navigation

Enterprises with years of legacy training material often lack visibility into existing assets. GenAI can create searchable summaries, knowledge cards, and concept maps that improve discoverability and reduce redundancy.

- Enhances training operations rather than replacing them

The real efficiency gains appear in workflow automation such as tagging, metadata generation, content classification, and transcript structuring. These tasks typically consume 15 to 20 percent of instructional design time and are easily automated.

- Drives multilingual expansion without full re-authoring

GenAI-powered translation, paired with human linguistic review, can reduce localization costs by 35 to 55 percent. This makes the global rollout of training more feasible and consistent.

- Improves knowledge support inside the LMS

Conversational search, contextual assistance, and chat-based navigation help employees find content faster. Large enterprises report a 20 to 30 percent reduction in time spent locating relevant modules or documentation.

- Boosts learner productivity in self-paced programs

Learners benefit from instant clarifications, content breakdowns, and examples tailored to their role. Early deployments show measurable improvements in course completion rates and learning satisfaction.

- Strengthens analytics quality when paired with clean behavioral data

GenAI can detect patterns in learner progression, skill gaps, and content performance more quickly. This enables faster iteration cycles for L&D teams and more accurate forecasting of training needs.

Where GenAI Consistently Fails or Underperforms in an LMS

Even with strong momentum and rapid experimentation across enterprises, GenAI has clear structural limitations inside learning ecosystems. These gaps are not temporary. They stem from data constraints, organizational realities, and model limitations that vendors often gloss over. Understanding these failure points is essential for making responsible investment decisions.

Josh Bersin further adds to the nature of the human-AI relationship.

“AI doesn’t have ingenuity … what makes a business successful … is new ideas, it’s innovation … empathy … AI doesn’t have the genetic characteristics of humans.”

Bersin is reminding leaders that AI can help us work faster, but it cannot think creatively or understand people the way humans do. Real innovation still depends on human judgment, empathy, and original ideas.

1. Weak Performance in Highly Regulated or Precision Heavy Domains

- GenAI underperforms in domains that require strict regulatory, compliance, medical, or audit-ready language.

- Hallucination risk rises when models attempt specialized terminology or policy-aligned statements.

- SME review cycles often increase, negating expected efficiency gains.

2. Inability to Personalize Without Deep, Connected Enterprise Data

- True personalization is impossible without unified, connected enterprise data; the limitation is architectural, not model-driven.

- Most organizations operate with fragmented learner records, shallow skill profiles, and siloed HR and talent systems.

- Without this foundation, an LMS cannot generate meaningful learning paths, adaptive sequences, or role-specific recommendations.

- The result is surface-level personalization that often misrepresents job needs and learner capability.

3. Overreliance on Generic Models That Cannot Understand Context

- Many vendors rely on generic foundation models that cannot interpret domain nuance or organizational context.

- These models produce content that looks polished but lacks functional accuracy, especially for technical, engineering, or healthcare teams.

- Fine-tuning can solve this gap, but it demands clean data, governance maturity, and organizational bandwidth that most enterprises do not yet have.

4. Difficulty Handling Dynamic, Real-Time Operational Knowledge

- GenAI performs well with stable content but struggles in environments where operational knowledge changes frequently.

- Product updates, compliance shifts, and rapid feature releases create knowledge drift that the model cannot track on its own.

- Without disciplined update pipelines, the LMS generates content that is outdated, inconsistent, or misaligned with current workflows.

5. Limited Ability to Evaluate True Skill Mastery

- GenAI can rapidly produce assessments, but most fail to measure real competence or on-the-job capability.

- Models can generate questions, yet they cannot ensure psychometric validity, cognitive progression, or bias control.

- Auto-generated tests often default to recall-level items instead of application, reasoning, or problem-solving.

- This leads to misleading proficiency signals and weak data foundations for skills mapping and career pathways.

6. Poor Integration With Legacy LMS Architectures

- The primary constraint is often the LMS architecture, not the GenAI model.

- Most legacy platforms were never built for real-time inference, dynamic content generation, or granular personalization.

- This results in latency, performance instability, and inconsistent learner experiences.

- Enterprises frequently experience a gap between polished vendor demos and what their LMS can realistically support at scale.

7. Lack of Clear Governance for Accuracy, Bias, and Risk

GenAI in learning requires higher oversight than traditional training tools.

- Without governance, content can introduce inaccuracies, biased explanations, or inappropriate references.

- Enterprises often underestimate how much internal review and red teaming are required to maintain quality, leading to hidden operational costs that offset expected savings.

8. Weakness in Measuring True ROI Beyond Speed and Volume

Efficiency gains are easy to capture, but the strategic impact is not.

- Many organizations track content production speed but do not measure learning transfer, behavior change, or downstream business impact.

- This leads to inflated expectations and frustration when leadership cannot see the promised performance outcomes.

9. Misalignment With How Adults Actually Learn

Most GenAI learning features optimize for speed, not cognitive effectiveness.

- Adults learn through repetition, contextual stimulation, reflection, and application.

- GenAI-generated content can feel surface-level or overly compressed, which impacts retention and motivation.

- Without instructional design oversight, AI-assisted learning becomes more about volume than value.

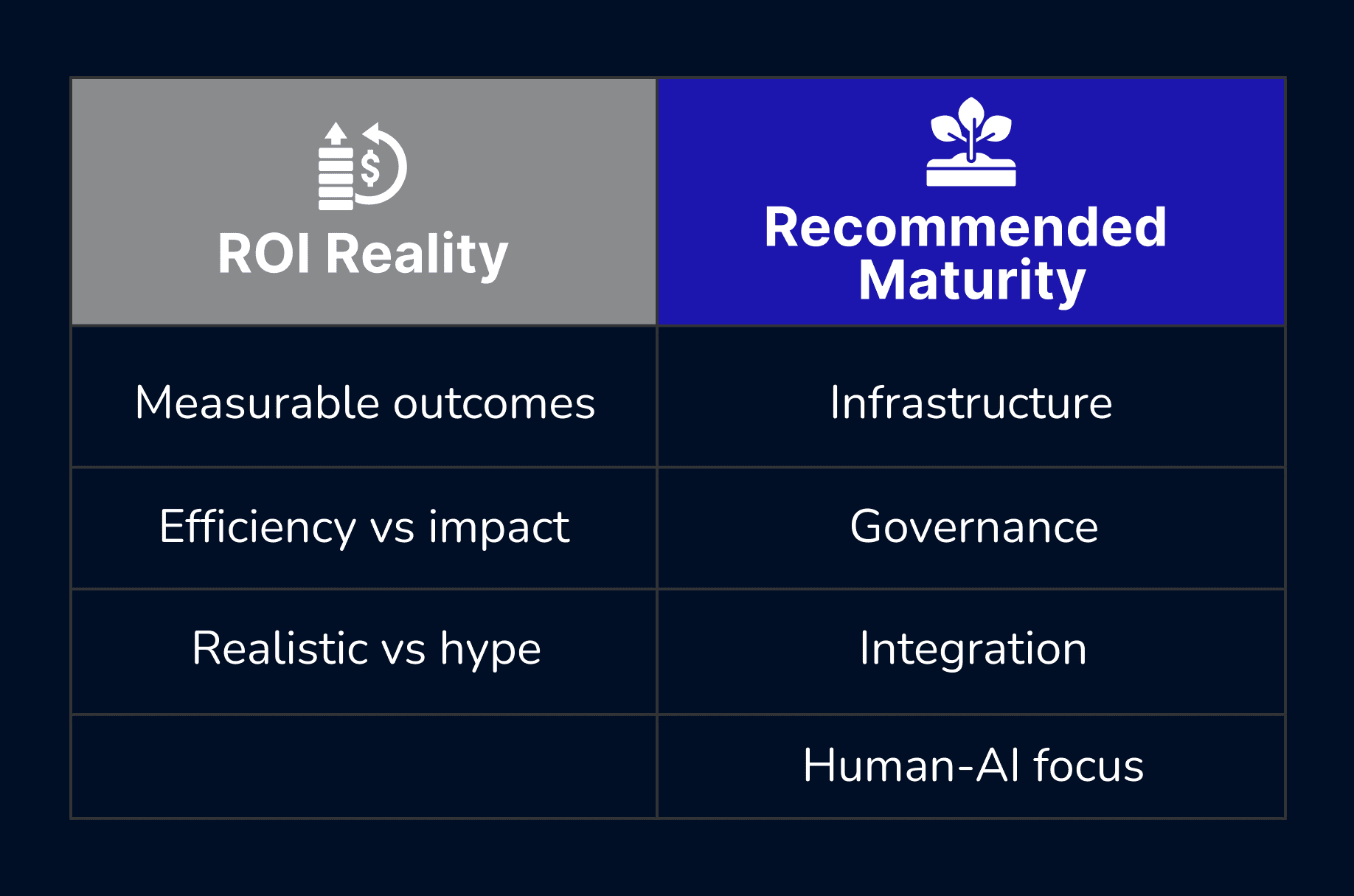

The Hard Truth About Enterprise Readiness for Learning

A realistic assessment of GenAI adoption shows that most enterprises are not structurally prepared to extract meaningful value from AI inside their LMS. The readiness gap is not technical. It is operational, organizational, and cultural. The following points capture the issues that consistently determine whether GenAI delivers impact or stalls after pilots.

- Foundations are fragmented and inconsistent

Many enterprises attempt to deploy GenAI on top of messy learning ecosystems. Content libraries lack structure, metadata is incomplete, and instructional materials follow no unified design standards. When GenAI interacts with this environment, it produces inconsistent outputs and amplifies existing flaws instead of improving them.

- Data maturity is far below what personalization requires

Enterprises often expect adaptive learning without having reliable learner profiles, updated skill maps, or integrated HR systems. Without these underpinnings, GenAI personalization becomes shallow and misaligned, creating recommendations that do not reflect role requirements or performance gaps.

- Most teams treat GenAI as a feature, not as a workflow transformation

L&D teams frequently add AI content creation tools or automated assessments without rethinking end-to-end content operations. This results in isolated pockets of efficiency but no systemic improvement. GenAI only creates value when workflows, collaboration models, and quality controls evolve alongside the technology.

- Executive enthusiasm rarely converts into operational support

Leadership often approves GenAI initiatives for strategic positioning, but does not fund the underlying changes required, such as data integration, governance frameworks, or new operational roles. Without this support, pilots succeed, but enterprise rollout fails.

- Instructional designers and SMEs are not prepared for AI-assisted workflows

Many practitioners lack training in AI evaluation, risk awareness, and quality assurance. SMEs struggle to validate AI-generated outputs because they do not have clear guidelines on what constitutes acceptable AI performance. This slows adoption and increases review cycles.

- Cultural mindsets still prioritize efficiency over learning effectiveness

Organizations often celebrate faster content production while ignoring whether learning actually improves performance outcomes. GenAI accelerates tasks, but it cannot compensate for unclear learning goals or poorly defined measurement practices.

- Legacy LMS architectures limit real-time AI performance

The majority of LMS platforms were built for static content delivery, not dynamic generation or adaptive sequencing. Infrastructure limitations create performance bottlenecks that constrain what GenAI can realistically achieve, regardless of vendor promises.

- Only a small segment of enterprises has true readiness

The organizations that succeed with GenAI in learning share specific traits:

- Learning is treated as a strategic workforce lever, not a support function.

- Content operations are standardized and governed.

- Data flows cleanly across HR, performance, and skills systems.

- GenAI is positioned as infrastructure, not as a marketing feature.

The ROI Patterns That Actually Hold Up Across Enterprises

The following table summarizes the ROI patterns of GenAI in enterprise LMS environments, highlighting where measurable value consistently emerges, the scale of impact, and the conditions that determine success.

The Future State of GenAI in Enterprise LMS

Generative AI in enterprise LMS is entering a phase of measured maturity. The initial hype has waned, and organizations are beginning to understand both its limitations and its strategic potential. The next wave of adoption will be defined less by novelty and more by integration, governance, and measurable business impact.

1. AI as a Learning Operations Multiplier

Rather than acting as a replacement for instructional designers or SMEs, GenAI will increasingly serve as a multiplier for existing capabilities.

- Enterprises will use AI to reduce repetitive manual tasks while human teams focus on strategy, design, and learner outcomes.

- This will lead to faster content iterations, more frequent updates, and improved agility in responding to business changes.

2. Deep Integration with Enterprise Systems

The future will favor GenAI systems that are tightly integrated with HR, performance management, and skills frameworks.

- AI will recommend learning paths that are directly tied to competency frameworks and business objectives.

- Organizations that unify data across LMS, HRIS, and operational systems will see the most measurable gains in engagement, retention, and performance.

3. Responsible AI and Governance as a Competitive Advantage

Enterprises that implement robust AI governance frameworks will gain a competitive edge.

- Monitoring for accuracy, bias, and compliance will become standard practice.

- Clear policies will allow organizations to scale AI responsibly without sacrificing content integrity or regulatory compliance.

4. Predictive Learning Analytics and Skill Forecasting

GenAI will increasingly contribute to forward-looking insights, predicting skill gaps, and recommending targeted interventions.

- By analyzing learning patterns, role requirements, and organizational goals, AI will help identify where training will have the highest impact.

- L&D teams will shift from reactive content creation to proactive skill development strategies.

5. Microlearning and Just-in-Time Support

AI will enable the delivery of highly contextualized, bite-sized learning experiences at the point of need.

- Employees will access short, relevant modules triggered by workflow or performance signals.

- This reduces time away from work and improves knowledge retention, creating measurable improvements in productivity.

6. Continuous Feedback Loops for Learning Effectiveness

Next-generation LMS platforms will use AI to continuously analyze learner interactions, content effectiveness, and engagement metrics.

- Feedback loops will automatically adjust content sequencing, difficulty levels, and delivery formats.

- Organizations will gain actionable insights, moving from periodic course evaluations to real-time learning optimization.

7. The Human-AI Partnership

The most significant evolution will be cultural. GenAI will not replace humans but enhance their decision-making and creative capabilities.

- SMEs, instructional designers, and L&D leaders will become curators, validators, and strategists rather than solely content creators.

- The partnership between human expertise and AI efficiency will define the future of enterprise learning.

Conclusion

Generative AI in enterprise LMS has moved past hype into a phase of practical opportunity. It excels in accelerating content creation, supporting knowledge discovery, improving operational efficiency, and enabling targeted personalization, but only when organizations are prepared to manage the underlying complexity.

The future of enterprise learning will be defined by human-AI partnerships. SMEs, instructional designers, and L&D leaders will focus on strategic curation and quality assurance, while GenAI handles repetitive, time-consuming tasks and enables faster iteration.

Amy Farner, the EVP of Product, Josh Bersin Company, has highlighted the importance:

“The old ways of working will not survive the AI revolution. If organisations are not willing to radically transform the ways they create content, they risk becoming irrelevant.”

Enterprises that succeed will share common characteristics: strong data foundations, integrated workflows, governance structures, and a clear vision of how learning drives business outcomes. Organizations that ignore readiness or chase novelty without preparation will face fragmented pilots, inconsistent adoption, and inflated expectations.

The promise of GenAI is real, but it rewards discipline, foresight, and operational maturity. When applied strategically, it becomes a multiplier of human expertise. It works as a catalyst for scalable learning and a measurable driver of enterprise performance.

To successfully implement Generative AI at scale, organizations need an efficient LMS foundation that supports integration, personalization, and governance. Explore our Enterprise GenAI solutions for LMS to see how Arbisoft helps companies build scalable, AI-ready learning platforms that accelerate content creation, streamline workflows, and ensure measurable business impact.