We put excellence, value and quality above all - and it shows

A Technology Partnership That Goes Beyond Code

“Arbisoft has been my most trusted technology partner for now over 15 years. Arbisoft has very unique methods of recruiting and training, and the results demonstrate that. They have great teams, great positive attitudes and great communication.”

Moving from Legacy Data Platforms to Databricks A Step-by-Step Guide

If you’re still running your analytics on some ancient data warehouse, it probably feels like you’re driving a ‘92 Corolla in a Tesla world. Back in the day, that old DW was successful. But now, it's clunky, eats up way too much cash, and, honestly, just gets in the way whenever you want to do something cool like real-time dashboards or develop an ML model without jumping through a million hoops.

Why Legacy Data Warehouses Are Basically Dinosaurs

What’s actually wrong with these old data warehouses? Let’s break it down.

Rigid Schema Design

First thing, schemas. Star, snowflake, whatever. They’re fine if you know exactly what you need, forever. But what about the minute someone wants to add a new data source or tweak a business rule? Suddenly, you’re stuck in schema-change cleansing for weeks.

Storage and Compute Coupling

Then there’s the whole storage vs. compute mess. With these legacy setups, you pay for compute even when you just want to chuck data into storage. Cloud systems? Yeah, you can split that up. Way smarter.

Limited Analytics Capabilities

Ever tried running machine learning on Teradata or Oracle? Good luck. You’ll see why every self-respecting data scientist runs screaming to the cloud.

So yeah, legacy data warehouses had their moment. But unless you’re nostalgic for slow queries and sky-high bills, it’s time to move on.

The Starting Line: Assessing Your Current Environment

Before you even dream about shoving a single byte across the wire, you’ve got to know what mess you’re sitting in. This isn’t just checking boxes on some IT checklist. You’re basically digging through the bones of your organization’s data life, like the weird family tree with all the secrets.

Business Assessment: It’s Not Just Code and Numbers

The first move is to actually talk to people. Grab your analysts, a few business folks, IT nerds, even that exec who thinks “data lake” is an actual body of water. You’d be amazed how many migrations happen in total isolation, like everyone’s got noise-canceling headphones on. What’s actually running in this warehouse? Old-school reports? Some random dashboards? Are you ticking boxes for compliance, or is there something more mission-critical hiding in there? Nail this down or your migration’s basically doomed.

Quick story time: I analyzed a finance business once which was old-school, lots of spreadsheets. Their whole risk model ran on overnight jobs from a creaky warehouse. The migration wasn’t just about “moving data.” They wanted to do risk calcs during the day, not wait for the moon to rise. Suddenly, latency and data freshness were everything. If you just looked at the SQL, you’d totally miss that. Unless you ask the right people.

And don’t skip the “what can your system actually do” bit. Real-time analytics? ML? Self-serve BI? Nine times out of ten, the answer’s “yeah, not really.” Which is kinda the point of moving in the first place.

Architecture Evaluation: Mapping the Maze

Here’s where the nerd gloves come off. You need to map your data world, small chunks and all. Scribble out the models: are you living in star schema land, tangled in snowflakes, or knee-deep in transactional spaghetti? This shapes your whole migration strategy.

Next up: storage. Where is this stuff actually living? Cloud? On-premises? A secret folder called “Bob’s Backup” on someone’s desktop? Get eyes on every database, every flat file, every weird S3 bucket.

Integration’s a beast, too. How’s data moving in and out? Old-school ETL tools? Bash scripts someone wrote in 2011? Maybe something “innovative”? And dependencies, too. Sometimes the tiniest data mart is propping up some ancient, business-critical dashboard nobody’s owned up to in years. Miss that, and you’ll have folks with pitchforks at your desk. Talk to the senior old folks. They know where the skeletons are buried.

Technical Assessment: Under the Hood

Now for the fun part: performance. How fast are your queries, really? Is your data refresh rate measured in minutes, hours, or “whenever the job decides not to crash”? These are your baseline, don’t kid yourself that “it’s fine.”

ETL is where technical debt comes home to rest. Some of these pipelines are held together with duct tape and hope. You want to untangle, modernize, and maybe even sleep at night.

You can’t ignore security. What risks are you running now? Are you dealing with GDPR, HIPAA, some terrifying industry-specific rulebook? How locked down is your access? The new shiny (like Databricks with Unity Catalog) can help, but you can’t fix what you haven’t bothered to document.

All in all, it’s a bit of a scavenger hunt, part therapy session, and part crime scene investigation. But data migrations aren't boring.

Defining Your Target Platform

You’ve got your direction, you sort of know where you stand, and now it’s time to pick where you want to land. Databricks sounds fancy with the whole Lakehouse, but don’t just jump in headfirst; think it through.

Business Alignment: The North Star

If your new setup isn’t actually helping you hit business goals, then what’s the point? Is your boss breathing down your neck about slow reports? Or are you bleeding money on clunky old systems? Maybe you want to roll out some shiny new data products? Nail down what you’re chasing, whether it’s faster reporting, slashed costs, whatever, and figure out some real KPIs. Like, if faster reporting is the dream, make it specific: “Cut report times in half.”

Modernization: Lift-and-Shift, Burn It Down, or Play It Safe?

This bit’s interesting. You’ve got choices:

Lift-and-shift

Basically, you’re boxing up all your old junk, pulling it into a new basket, and calling it a day. Fast? Absolutely. But you’re still stuck with grandma’s floral couch, i.e., technical debt. There are tools to help, sure, but don’t expect miracles.

Full Modernization

You’re tearing it all down, redesigning your data models, rebuilding your pipelines, adding some AI magic if you’re feeling extra. It’s a beast, takes time, eats up resources, but it's shiny and future-proof.

Hybrid

Honestly, this one’s the sweet spot for most folks. Do a quick lift-and-shift so you’re not trapped in legacy hell, then start modernizing piece by piece. You get quick wins, but you’re not stuck forever with old baggage. Nine times out of ten, this is what I’d do.

Tech Stack: Welcome to the Lakehouse Party

Moving to Databricks? Congrats, you’re officially in cloud country. Here’s what you’re dealing with:

Serverless Computing: No more babysitting servers. Databricks auto-scales clusters, so you can stop sweating the infrastructure details.

Automated Data Pipelines: Think Delta Live Tables and their ilk. Declarative ETL, fewer headaches. Set it up, let it run.

Storage: Delta Lake’s the big cheese here. You get ACID transactions, schema enforcement, and time travel (not the sci-fi kind, but still cool). Stuff like Delta Lake Uniform helps with compatibility. If you’re not using Delta, are you even Databricks-ing?

Distributed Computing: Spark is your engine. Learn how to partition, shuffle, and cache. Make Spark work for you, not the other way around. Performance is everything, so don’t sleep on tuning.

Partner Ecosystem: Getting by With a Little Help

Nobody’s out here building a data empire solo, unless you’ve got a clone army or something. Databricks? Yeah, they’ve got a pretty wild partner scene that’ll speed things up if you play it smart.

Ingestion Partners

Think of Fivetran or Qlik Replicate as your personal robot assistants, grabbing data from everywhere and shoving it into your Lakehouse. You don’t have to hand-code all that mess, huge time-saver, honestly.

ETL Partners

Okay, Databricks is pretty slick at ETL already, but I get it, you might have old-school stuff like Informatica lying around, or maybe you’re playing with newer players like Prophecy. You can hook those up for specific workflows and not reinvent the wheel.

Reporting Partners

Good news, your BI tools aren’t about to be tossed in the bin. Power BI, Tableau, AtScale, yeah, all of them can plug right into Databricks. Thanks to Lakehouse Federation, you can even have ‘em working around your old-school data warehouse while you’re moving over. Makes the whole BI migration way less of a headache.

The Migration Playbook: Pick Your Poison

There’s more than one way to move your data house, and honestly, each has its own drama.

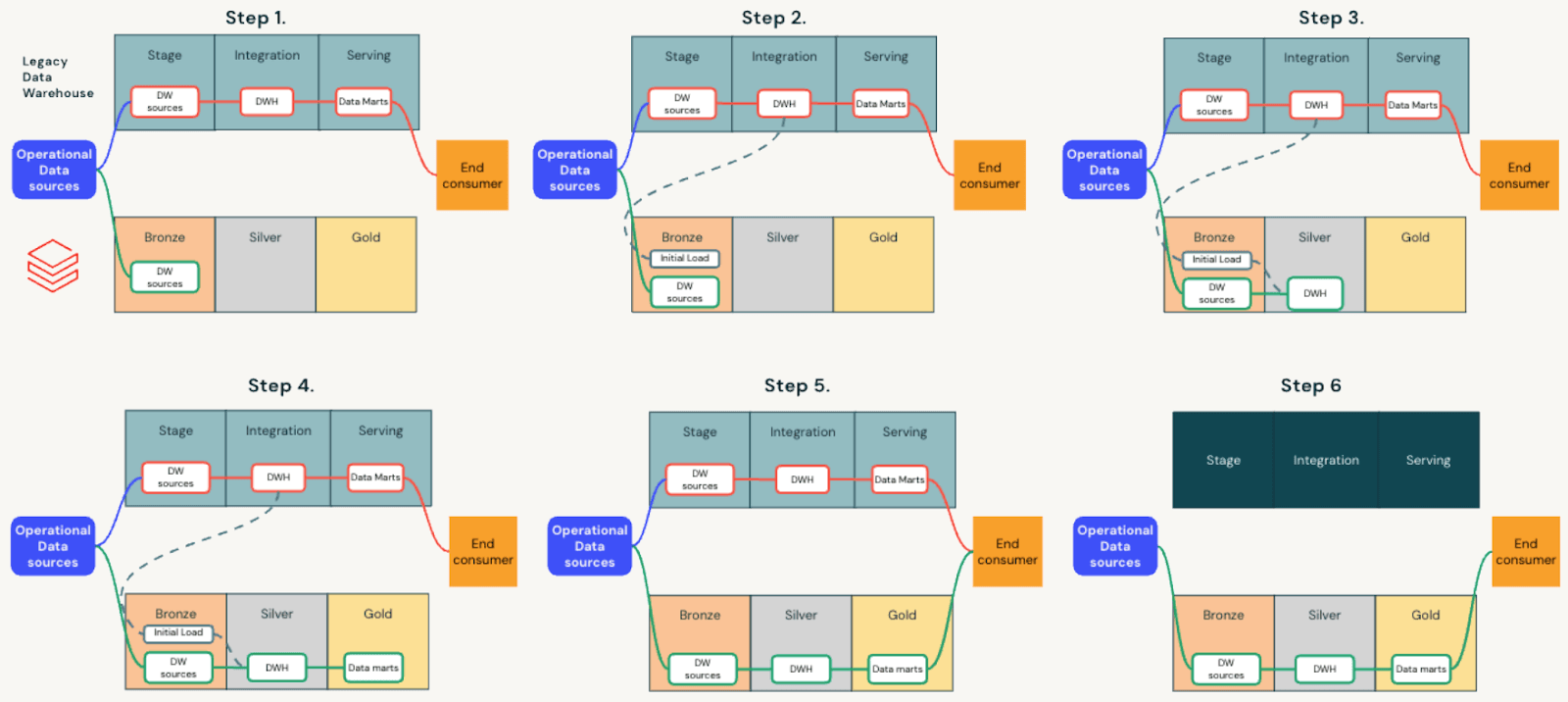

Ingestion & ETL First (Back-to-Front)

You start with the boring (but crucial) stuff, get your data and pipelines right, then worry about making things pretty for the users.

- First, just get the raw data into the Lakehouse. Set up those pipelines so it keeps flowing in.

- Next, roll up your sleeves and rebuild your ETL magic in Databricks. Take advantage of Delta Lake and the fancy new toys.

- Might want to throw in an operational data store for quick decision support if you want to.

- Finally, build your data marts/serving layers, and swap your reports and dashboards to point to these new spots.

What’s cool here? You end up with a rock-solid data foundation, everything’s optimized, and you can tweak pipelines as you go. Downside? The business folks aren’t seeing any shiny new dashboards right away, so they might start panicking. Planning’s gotta be tight or you’ll get chaos. Oh, and managing expectations? It’s practically an Olympic sport at this stage.

Image Source: Transforming Legacy Data Warehouses: A Strategic Migration Blueprint (e-book)

BI-First (Front-to-Back)

On the flip side, you can go flashy: give the business users something to look at, fast.

- Copy over your data marts or whatever people are actually using, so the business teams get their datasets quickly.

- Use Lakehouse Federation to let BI tools pull from both the old system and whatever’s already moved. Magic trick: Users barely notice the transition.

- Once everyone’s happy with their dashboards, then you go back and clean up ingestion and ETL, now that the foundation’s laid out.

The upside? People see value right away, adoption goes up, and you can start rolling out new stuff like semantic layers or natural language queries (shoutout to Databricks Genie and AtScale). But, big but, you’re juggling two systems for a while, and keeping them in sync is not for the faint of heart. Coordination is everything, or you’re toast.

So yeah, pick your strategy. Just don’t try to do it all at once unless you’re secretly a wizard.

Image Source: Transforming Legacy Data Warehouses: A Strategic Migration Blueprint (e-book)

Proving the Concept: Validation Before Full Migration

Diving headfirst into a full-on migration without checking your footing? That’s basically asking for chaos, like building IKEA furniture without glancing at the instructions. You’ve gotta see if your grand plan actually stands up in the real world before you bet the farm on it.

Proof of Concept (POC): So... Can We Even Pull This Off?

A POC is your little test-drive. It’s where you poke at the tech and see if it’ll do what you want. Not rocket science, but it’s a lifesaver. You try out some gnarly queries, see if costs spiral out of control, and make sure your data can walk and chew gum at the same time on Databricks. Can you actually transform that complicated ETL pipeline? Will the data even show up where it’s supposed to? Basically, you’re checking if the engine even starts.

MVP: Is This Worth the Trouble?

Now, the MVP, Minimum Viable Product, that’s your “let’s see if this matters” stage. You take a slice of your data warehouse, move it over, and let some early users mess around. If they love it? Awesome. If they hate it? At least you didn’t waste months moving everything. Feedback here is gold; it’s not just about the tech, it’s about proving this move will actually help the business.

Success Criteria: How Do You Know You Nailed It?

Look, you need to know what “winning” looks like. Spell it out. Are you actually making reports faster? Did you unlock some shiny new analytics trick? Is it as cheap and zippy as you promised the boss? Set some benchmarks, lock down your SLAs, and make sure you’re measuring stuff that matters, not just vanity metrics.

Implement, Optimize, and Monitor

If your MVP and POC don’t catch fire, then it’s go-time. Now the real work kicks in. Most of the migration effort happens here, and honestly, you can’t cut corners on testing. Gotta make sure your data still makes sense, nothing gets scrambled, and everything works just as well, or better than before.

Unit Tests: Break everything down. Each little bit of transformation logic gets poked and prodded until it behaves.

Compliance Tests: Because, you know, lawyers and auditors. You want your stuff to play nice with the rules.

End-to-End Testing: Feed data in one end, see what comes out the other. Pray it matches what you expect.

Don’t try to go it alone. You’ll need your own team, maybe a couple of Databricks folks, and probably some consultants who’ll charge too much. Tools like Databricks Brickbuilder Solutions can help you not reinvent the wheel every time.

Keeping Your Data on Lock: Governance & Security

So now you’ve got data flooding into your Lakehouse, awesome, but also terrifying. Security and governance need to be tight.

Unity Catalog: This thing’s a lifesaver. One-stop shop for data governance, access controls, and all that jazz.

Data Lineage: You want receipts. Where did that number come from? Can you trust it? Track everything.

Compliance: Don’t get fined. Make sure the platform meets whatever alphabet soup of regulations applies to you.

Performance: Make it Actually Work Fast

Look, you didn’t migrate just to have everything slow to a crawl. Time to tune things up.

Indexing: Delta Lake does a lot for you, but don’t get lazy; learn when Z-ordering or other tricks matter.

Caching: Spark caching is your best friend for hot data.

Partitioning: Chop your data up in ways that make queries zip along instead of crawl.

Auto-Scaling: Databricks will scale clusters for you, but test around the settings, tweak min/max clusters, and auto-kill times to save some cash.

Query Optimization: Don’t just blame the tools, work with your devs to write smarter queries. The Catalyst Optimizer is cool, but not magic.

Monitoring & Maintenance: You’re Never Really Done

Sorry, but migration isn’t a “set it and forget it” deal. You gotta keep an eye on things.

Performance Tracking: Remember those KPIs? Actually track ‘em.

Cost Optimization: Watch your spending. Tweak clusters, use spot instances, whatever it takes to keep finance off your back.

Troubleshooting: Stuff breaks. Have a plan to fix it fast.

Keep Evolving: Data never sits still. New sources, new features, keep modernizing or get left behind.

Honestly, the journey never really ends. But, at least it’s not boring.

Conclusion: A New Horizon for Data

Moving your ancient data warehouse over to Databricks? You finally get to ditch all that clunky tech debt, the stuff that’s been slowing you down forever, and step into the world of legit analytics. Fast, flexible, less hair-pulling.

It’s not just about shiny new tech, though. It means your team actually gets to make decisions that matter, fast, instead of wading through swampy data swamps. Sure, there’ll be some headaches along the way (I mean, when is tech ever smooth?), but as long as you map out where you’re starting, where you wanna end up, and don’t just wing it, you’ll come out the other side with a data platform that actually works for you.

So, what’s the nightmare from your old warehouse you’re dying to leave in the dust?